This chatbot is built by using an AI Pipe on Langbase, it works with 30+ LLMs (OpenAI, Gemini, Mistral, Llama, Gemma, etc), any Data (10M+ context with Memory sets), and any Framework (standard web API you can use with any software).

Check out the live demo here.

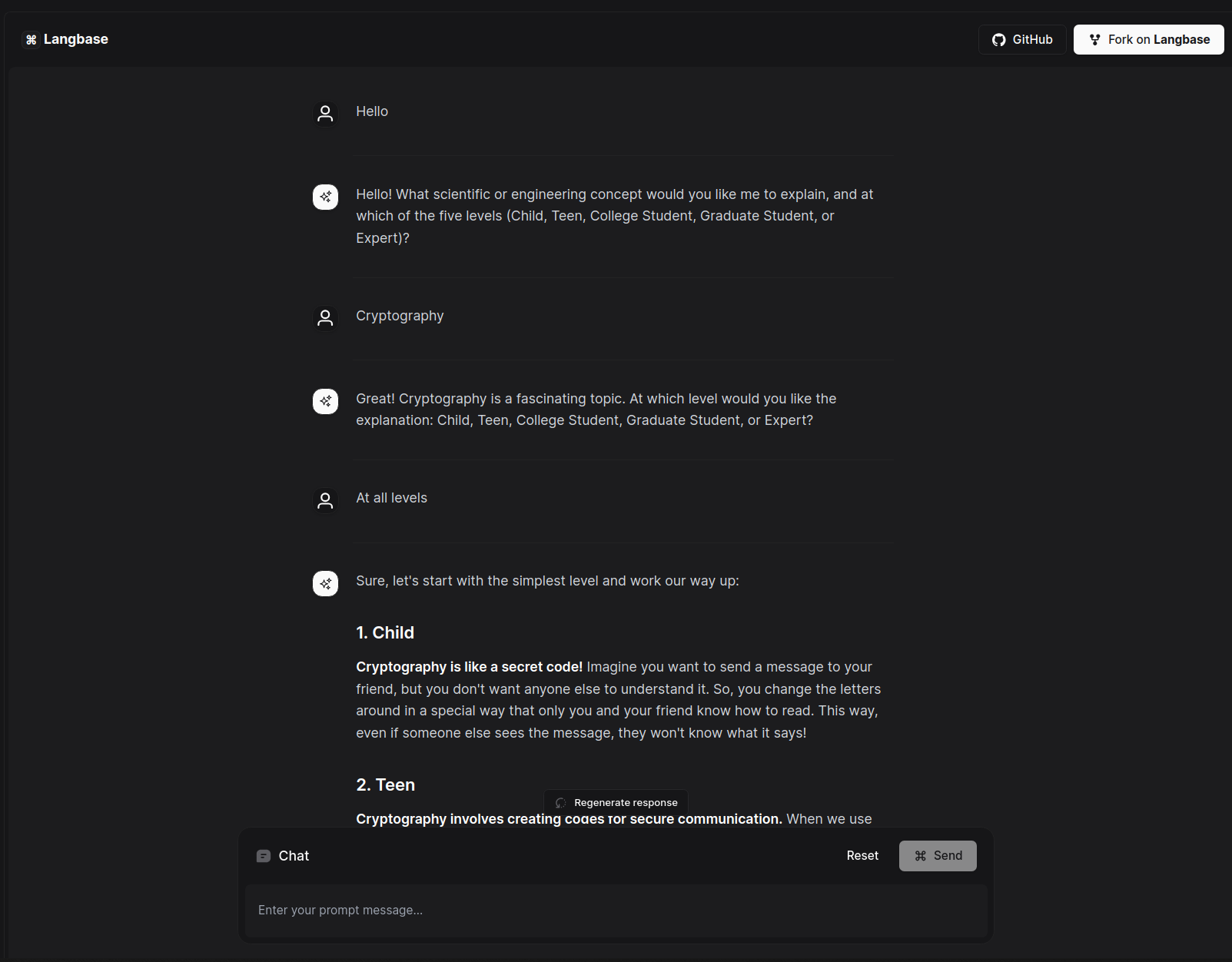

- 💬 PolyExplainer Chatbot — Built with an AI Pipe on ⌘ Langbase

- ⚡️ Streaming — Real-time chat experience with streamed responses

- 🗣️ Q/A — Ask questions and get pre-defined answers with your preferred AI model and tone

- 🔋 Responsive and open source — Works on all devices and platforms

- Check the PolyExplainer Chatbot Pipe on ⌘ Langbase

- Read the source code on GitHub for this example

- Go through Documentaion: Pipe Quick Start

- Learn more about Pipes & Memory features on ⌘ Langbase

Let's get started with the project:

To get started with Langbase, you'll need to create a free personal account on Langbase.com and verify your email address. Done? Cool, cool!

- Fork the PolyExplainer Chatbot Pipe on ⌘ Langbase.

- Go to the API tab to copy the Pipe's API key (to be used on server-side only).

- Download the example project folder from here or clone the reppository.

cdinto the project directory and open it in your code editor.- Duplicate the

.env.examplefile in this project and rename it to.env.local. - Add the following environment variables (.env.local):

# Replace `PIPE_API_KEY` with the copied API key.

NEXT_LB_PIPE_API_KEY="PIPE_API_KEY"

- Issue the following in your CLI:

# Install the dependencies using the following command:

npm install

# Run the project using the following command:

npm run dev- Your app template should now be running on localhost:3000.

NOTE: This is a Next.js project, so you can build and deploy it to any platform of your choice, like Vercel, Netlify, Cloudflare, etc.

This project is created by Langbase team members, with contributions from:

- Muhammad-Ali Danish - Software Engineer, Langbase

Built by ⌘ Langbase.com — Ship hyper-personalized AI assistants with memory!