diff --git a/.gitignore b/.gitignore

deleted file mode 100644

index 788df19..0000000

--- a/.gitignore

+++ /dev/null

@@ -1,5 +0,0 @@

-*.DS_Store

-infer_output/*

-models/*

-anygpt/seed/*

-.gitignore

\ No newline at end of file

diff --git a/Docker/Dockerfile b/Docker/Dockerfile

new file mode 100644

index 0000000..d9b5ddc

--- /dev/null

+++ b/Docker/Dockerfile

@@ -0,0 +1,39 @@

+FROM nvidia/cuda:12.0.1-cudnn8-runtime-ubuntu22.04

+ENV DEBIAN_FRONTEND=noninteractive

+

+RUN apt-get update \

+ && apt-get upgrade -y \

+ && apt-get install -y --no-install-recommends \

+ git \

+ gcc \

+ curl \

+ wget \

+ sudo \

+ pciutils \

+ python3-all-dev \

+ python-is-python3 \

+ python3-pip \

+ ffmpeg \

+ libsdl2-dev \

+ pulseaudio \

+ alsa-utils \

+ portaudio19-dev \

+ && pip install pip -U

+

+WORKDIR /app

+

+RUN git clone https://github.com/Sunwood-ai-labs/AnyGPT-JP.git .

+

+RUN pip install -r requirements.txt

+

+RUN mkdir -p models/anygpt \

+ && mkdir -p models/seed-tokenizer-2 \

+ && mkdir -p models/speechtokenizer \

+ && mkdir -p models/soundstorm

+

+RUN pip install bigmodelvis \

+ && pip install peft \

+ && pip install --upgrade huggingface_hub

+

+# COPY scripts/download_models.py /app/scripts/download_models.py

+# RUN python scripts/download_models.py

diff --git a/Docker/README.JP.md b/Docker/README.JP.md

new file mode 100644

index 0000000..7a7c82e

--- /dev/null

+++ b/Docker/README.JP.md

@@ -0,0 +1,43 @@

+# AnyGPTのDockerでの実行方法

+

+このREADMEでは、AnyGPTをDockerを用いて実行する方法を説明します。

+

+## 前提条件

+

+- Dockerがインストール済みであること

+- GPU環境で実行する場合は、NVIDIA Container Toolkitがインストール済みであること

+

+## 手順

+

+

+1. 以下のコマンドを実行して、Dockerイメージをビルドします。

+ ```bash

+ docker-compose up --build

+ ```

+

+2. モデルをダウンロードします。

+ ```bash

+ docker-compose run anygpt python /app/scripts/download_models.py

+ ```

+

+3. 推論を実行します。

+ ```bash

+ docker-compose run anygpt python anygpt/src/infer/cli_infer_base_model.py \

+ --model-name-or-path models/anygpt/base \

+ --image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

+ --speech-tokenizer-path models/speechtokenizer/ckpt.dev \

+ --speech-tokenizer-config models/speechtokenizer/config.json \

+ --soundstorm-path models/soundstorm/speechtokenizer_soundstorm_mls.pt \

+ --output-dir "infer_output/base"

+ ```

+

+6. 推論結果は `docker/infer_output/base` ディレクトリに出力されます。

+

+## トラブルシューティング

+

+- モデルのダウンロードに失敗する場合は、`download_models.py`スクリプトを確認し、必要に応じてURLを更新してください。

+- 推論の実行に失敗する場合は、コマンドの引数を確認し、モデルのパスが正しいことを確認してください。

+

+## 注意事項

+

+- モデルのダウンロードと推論の実行には、大量のメモリとディスク容量が必要です。十分なリソースを確保してください

\ No newline at end of file

diff --git a/Docker/README.md b/Docker/README.md

new file mode 100644

index 0000000..9cef503

--- /dev/null

+++ b/Docker/README.md

@@ -0,0 +1,42 @@

+# Running AnyGPT with Docker

+

+This README explains how to run AnyGPT using Docker.

+

+## Prerequisites

+

+- Docker is installed

+- NVIDIA Container Toolkit is installed if running in a GPU environment

+

+## Steps

+

+1. Build the Docker image by running the following command:

+ ```bash

+ docker-compose up --build

+ ```

+

+2. Download the models:

+ ```bash

+ docker-compose run anygpt python /app/scripts/download_models.py

+ ```

+

+3. Run the inference:

+ ```bash

+ docker-compose run anygpt python anygpt/src/infer/cli_infer_base_model.py \

+ --model-name-or-path models/anygpt/base \

+ --image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

+ --speech-tokenizer-path models/speechtokenizer/ckpt.dev \

+ --speech-tokenizer-config models/speechtokenizer/config.json \

+ --soundstorm-path models/soundstorm/speechtokenizer_soundstorm_mls.pt \

+ --output-dir "infer_output/base"

+ ```

+

+4. The inference results will be output to the `docker/infer_output/base` directory.

+

+## Troubleshooting

+

+- If the model download fails, check the `download_models.py` script and update the URLs if necessary.

+- If the inference execution fails, check the command arguments and ensure that the model paths are correct.

+

+## Notes

+

+- Downloading the models and running the inference requires a large amount of memory and disk space. Ensure that sufficient resources are available.

\ No newline at end of file

diff --git a/README.md b/README.md

index 7d32fb0..a822abc 100644

--- a/README.md

+++ b/README.md

@@ -2,9 +2,15 @@

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

-

+

+

+

+ | [日本語](docs/README_JP.md) | [English](README.md) |

+

+

+

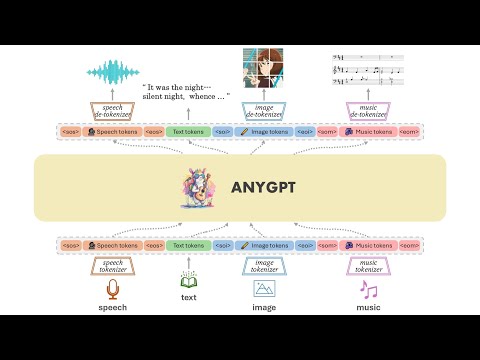

## Introduction

We introduce AnyGPT, an any-to-any multimodal language model that utilizes discrete representations for the unified processing of various modalities, including speech, text, images, and music. The [base model](https://huggingface.co/fnlp/AnyGPT-base) aligns the four modalities, allowing for intermodal conversions between different modalities and text. Furthermore, we constructed the [AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct) dataset based on various generative models, which contains instructions for arbitrary modal interconversion. Trained on this dataset, our [chat model](https://huggingface.co/fnlp/AnyGPT-chat) can engage in free multimodal conversations, where multimodal data can be inserted at will.

@@ -36,7 +42,7 @@ pip install -r requirements.txt

### Model Weights

* Check the AnyGPT-base weights in [fnlp/AnyGPT-base](https://huggingface.co/fnlp/AnyGPT-base)

* Check the AnyGPT-chat weights in [fnlp/AnyGPT-chat](https://huggingface.co/fnlp/AnyGPT-chat)

-* Check the SpeechTokenizer and Soundstorm weights in [fnlp/AnyGPT-speech-modules](https://huggingface.co/fnlp/AnyGPT-base)

+* Check the SpeechTokenizer and Soundstorm weights in [fnlp/AnyGPT-speech-modules](https://huggingface.co/fnlp/AnyGPT-speech-modules)

* Check the SEED tokenizer weights in [AILab-CVC/seed-tokenizer-2](https://huggingface.co/AILab-CVC/seed-tokenizer-2)

@@ -45,6 +51,10 @@ The SpeechTokenizer is used for tokenizing and reconstructing speech, Soundstorm

The model weights of unCLIP SD-UNet which are used to reconstruct the image, and Encodec-32k which are used to tokenize and reconstruct music will be downloaded automatically.

### Base model CLI Inference

+

+[](https://colab.research.google.com/drive/13_gZPIRG6ShkAbI76-hC_etvfGhry0DZ?usp=sharing)

+

+

```bash

python anygpt/src/infer/cli_infer_base_model.py \

--model-name-or-path "path/to/AnyGPT-7B-base" \

diff --git a/anygpt/src/__pycache__/__init__.cpython-39.pyc b/anygpt/src/__pycache__/__init__.cpython-39.pyc

deleted file mode 100644

index 33fb026..0000000

Binary files a/anygpt/src/__pycache__/__init__.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/infer/__pycache__/__init__.cpython-39.pyc b/anygpt/src/infer/__pycache__/__init__.cpython-39.pyc

deleted file mode 100644

index 415718a..0000000

Binary files a/anygpt/src/infer/__pycache__/__init__.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/infer/__pycache__/pre_post_process.cpython-39.pyc b/anygpt/src/infer/__pycache__/pre_post_process.cpython-39.pyc

deleted file mode 100644

index c8fe730..0000000

Binary files a/anygpt/src/infer/__pycache__/pre_post_process.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/infer/__pycache__/voice_clone.cpython-39.pyc b/anygpt/src/infer/__pycache__/voice_clone.cpython-39.pyc

deleted file mode 100644

index af4f1a4..0000000

Binary files a/anygpt/src/infer/__pycache__/voice_clone.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/infer/pre_post_process.py b/anygpt/src/infer/pre_post_process.py

index d1d299e..2abc681 100644

--- a/anygpt/src/infer/pre_post_process.py

+++ b/anygpt/src/infer/pre_post_process.py

@@ -4,7 +4,7 @@

sys.path.append("/mnt/petrelfs/zhanjun.p/mllm")

sys.path.append("/mnt/petrelfs/zhanjun.p/src")

from transformers import GenerationConfig

-from mmgpt.src.m_utils.prompter_mmgpt import Prompter

+from anygpt.src.m_utils.prompter import Prompter

from tqdm import tqdm

from m_utils.conversation import get_conv_template

@@ -237,4 +237,4 @@ def text_normalization(original_text):

tag1 = '[MMGPT]'

tag2 = '[eom]'

print(extract_content_between_final_tags(example_text, tag1, tag2))

- print(extract_text_between_tags(example_text, tag1, tag2))

\ No newline at end of file

+ print(extract_text_between_tags(example_text, tag1, tag2))

diff --git a/anygpt/src/m_utils/__pycache__/__init__.cpython-311.pyc b/anygpt/src/m_utils/__pycache__/__init__.cpython-311.pyc

deleted file mode 100644

index 72023df..0000000

Binary files a/anygpt/src/m_utils/__pycache__/__init__.cpython-311.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/__init__.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/__init__.cpython-39.pyc

deleted file mode 100644

index d7acf29..0000000

Binary files a/anygpt/src/m_utils/__pycache__/__init__.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/anything2token.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/anything2token.cpython-39.pyc

deleted file mode 100644

index ac5e34f..0000000

Binary files a/anygpt/src/m_utils/__pycache__/anything2token.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/conversation.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/conversation.cpython-39.pyc

deleted file mode 100644

index 2031e38..0000000

Binary files a/anygpt/src/m_utils/__pycache__/conversation.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/instructions.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/instructions.cpython-39.pyc

deleted file mode 100644

index cf31feb..0000000

Binary files a/anygpt/src/m_utils/__pycache__/instructions.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/other2text_instructions.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/other2text_instructions.cpython-39.pyc

deleted file mode 100644

index 6b3ee8c..0000000

Binary files a/anygpt/src/m_utils/__pycache__/other2text_instructions.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/output.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/output.cpython-39.pyc

deleted file mode 100644

index 3941908..0000000

Binary files a/anygpt/src/m_utils/__pycache__/output.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/prompter.cpython-311.pyc b/anygpt/src/m_utils/__pycache__/prompter.cpython-311.pyc

deleted file mode 100644

index d453b10..0000000

Binary files a/anygpt/src/m_utils/__pycache__/prompter.cpython-311.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/prompter.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/prompter.cpython-39.pyc

deleted file mode 100644

index 7c9efbc..0000000

Binary files a/anygpt/src/m_utils/__pycache__/prompter.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/prompter_mmgpt.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/prompter_mmgpt.cpython-39.pyc

deleted file mode 100644

index d1b523b..0000000

Binary files a/anygpt/src/m_utils/__pycache__/prompter_mmgpt.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/prompter_old.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/prompter_old.cpython-39.pyc

deleted file mode 100644

index d0f19fa..0000000

Binary files a/anygpt/src/m_utils/__pycache__/prompter_old.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/read_modality.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/read_modality.cpython-39.pyc

deleted file mode 100644

index e46cbcf..0000000

Binary files a/anygpt/src/m_utils/__pycache__/read_modality.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/templates.cpython-311.pyc b/anygpt/src/m_utils/__pycache__/templates.cpython-311.pyc

deleted file mode 100644

index 5b2317a..0000000

Binary files a/anygpt/src/m_utils/__pycache__/templates.cpython-311.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/templates.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/templates.cpython-39.pyc

deleted file mode 100644

index 396e790..0000000

Binary files a/anygpt/src/m_utils/__pycache__/templates.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/templates_old.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/templates_old.cpython-39.pyc

deleted file mode 100644

index dbb49f5..0000000

Binary files a/anygpt/src/m_utils/__pycache__/templates_old.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/text2other_instructions.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/text2other_instructions.cpython-39.pyc

deleted file mode 100644

index f5228d0..0000000

Binary files a/anygpt/src/m_utils/__pycache__/text2other_instructions.cpython-39.pyc and /dev/null differ

diff --git a/anygpt/src/m_utils/__pycache__/transforms.cpython-39.pyc b/anygpt/src/m_utils/__pycache__/transforms.cpython-39.pyc

deleted file mode 100644

index 06ed9ea..0000000

Binary files a/anygpt/src/m_utils/__pycache__/transforms.cpython-39.pyc and /dev/null differ

diff --git a/docker-compose.yml b/docker-compose.yml

new file mode 100644

index 0000000..39c6464

--- /dev/null

+++ b/docker-compose.yml

@@ -0,0 +1,21 @@

+version: '3'

+services:

+ anygpt:

+ image: anygpt

+ build:

+ context: ./Docker

+ dockerfile: Dockerfile

+ volumes:

+ - ./:/app

+ - ./.cache:/root/.cache

+

+ env_file:

+ - .env

+ tty: true

+ deploy:

+ resources:

+ reservations:

+ devices:

+ - driver: nvidia

+ count: 1

+ capabilities: [ gpu ]

\ No newline at end of file

diff --git a/docs/README_JP.md b/docs/README_JP.md

new file mode 100644

index 0000000..0b7ff75

--- /dev/null

+++ b/docs/README_JP.md

@@ -0,0 +1,134 @@

+# AnyGPT: 離散シーケンスモデリングを用いた統一マルチモーダル大規模言語モデル

+

+

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

+

+

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

+

+

+

+

+

+

+

+ | [日本語](README_JP.md) | [English](../README.md) |

+

+

+

+

+## はじめに

+AnyGPTは、音声、テキスト、画像、音楽など様々なモダリティを統一的に処理するための、離散表現を利用した任意のモダリティ間の変換が可能なマルチモーダル言語モデルです。[ベースモデル](https://huggingface.co/fnlp/AnyGPT-base)は4つのモダリティを揃え、異なるモダリティとテキストの間の相互変換を可能にします。さらに、様々な生成モデルを基に、任意のモーダル間変換の指示を含む[AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct)データセットを構築しました。このデータセットで学習された[チャットモデル](https://huggingface.co/fnlp/AnyGPT-chat)は、自由にマルチモーダルデータを挿入できる自由なマルチモーダル会話を行うことができます。

+

+AnyGPTは、全てのモーダルデータを統一された離散表現に変換し、次のトークン予測タスクを用いて大規模言語モデル(LLM)上で統一学習を行う生成学習スキームを提案しています。「圧縮は知性である」という観点から、Tokenizerの品質が十分に高く、LLMのperplexity(PPL)が十分に低ければ、インターネット上の膨大なマルチモーダルデータを同じモデルに圧縮することが可能となり、純粋なテキストベースのLLMにはない能力が現れると考えられます。

+デモは[プロジェクトページ](https://junzhan2000.github.io/AnyGPT.github.io)で公開しています。

+

+## デモ例

+[](https://www.youtube.com/watch?v=oW3E3pIsaRg)

+

+## オープンソースチェックリスト

+- [x] ベースモデル

+- [ ] チャットモデル

+- [x] 推論コード

+- [x] 指示データセット

+

+## 推論

+

+### インストール

+

+```bash

+git clone https://github.com/OpenMOSS/AnyGPT.git

+cd AnyGPT

+conda create --name AnyGPT python=3.9

+conda activate AnyGPT

+pip install -r requirements.txt

+```

+

+### モデルの重み

+* AnyGPT-baseの重みは[fnlp/AnyGPT-base](https://huggingface.co/fnlp/AnyGPT-base)を確認してください。

+* AnyGPT-chatの重みは[fnlp/AnyGPT-chat](https://huggingface.co/fnlp/AnyGPT-chat)を確認してください。

+* SpeechTokenizerとSoundstormの重みは[fnlp/AnyGPT-speech-modules](https://huggingface.co/fnlp/AnyGPT-speech-modules)を確認してください。

+* SEED tokenizerの重みは[AILab-CVC/seed-tokenizer-2](https://huggingface.co/AILab-CVC/seed-tokenizer-2)を確認してください。

+

+SpeechTokenizerは音声のトークン化と再構成に使用され、Soundstormはパラ言語情報の補完を担当し、SEED-tokenizerは画像のトークン化に使用されます。

+

+画像の再構成に使用されるunCLIP SD-UNetのモデルの重みと、音楽のトークン化と再構成に使用されるEncodec-32kは自動的にダウンロードされます。

+

+### ベースモデルCLI推論

+

+[](https://colab.research.google.com/drive/13_gZPIRG6ShkAbI76-hC_etvfGhry0DZ?usp=sharing)

+

+```bash

+python anygpt/src/infer/cli_infer_base_model.py \

+--model-name-or-path "path/to/AnyGPT-7B-base" \

+--image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

+--speech-tokenizer-path "path/to/model" \

+--speech-tokenizer-config "path/to/config" \

+--soundstorm-path "path/to/model" \

+--output-dir "infer_output/base"

+```

+

+例:

+```bash

+python anygpt/src/infer/cli_infer_base_model.py \

+--model-name-or-path models/anygpt/base \

+--image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

+--speech-tokenizer-path models/speechtokenizer/ckpt.dev \

+--speech-tokenizer-config models/speechtokenizer/config.json \

+--soundstorm-path models/soundstorm/speechtokenizer_soundstorm_mls.pt \

+--output-dir "infer_output/base"

+```

+

+#### 対話

+ベースモデルは、テキストから画像、画像キャプション、自動音声認識(ASR)、ゼロショットテキスト音声合成(TTS)、テキストから音楽、音楽キャプションなど、様々なタスクを実行できます。

+

+特定の指示フォーマットに従って推論を行うことができます。

+

+* テキストから画像

+ * ```text|image|{キャプション}```

+ * 例:

+ ```text|image|カラフルなテントの下で異国の商品を売る露店が立ち並ぶ活気あふれる中世の市場の風景```

+* 画像キャプション

+ * ```image|text|{キャプション}```

+ * 例:

+ ```image|text|static/infer/image/cat.jpg```

+* TTS(ランダムな声)

+ * ```text|speech|{音声の内容}```

+ * 例:

+ ```text|speech|私はナッツの殻の中に閉じ込められていても、無限の空間の王者だと思えます。```

+* ゼロショットTTS

+ * ```text|speech|{音声の内容}|{声のプロンプト}```

+ * 例:

+ ```text|speech|私はナッツの殻の中に閉じ込められていても、無限の空間の王者だと思えます。|static/infer/speech/voice_prompt1.wav/voice_prompt3.wav```

+* ASR

+ * ```speech|text|{音声ファイルのパス}```

+ * 例: ```speech|text|AnyGPT/static/infer/speech/voice_prompt2.wav```

+* テキストから音楽

+ * ```text|music|{キャプション}```

+ * 例:

+ ```text|music|夢のような心地よい雰囲気を醸し出す独特の要素を持つインディーロックサウンド```

+* 音楽キャプション

+ * ```music|text|{音楽ファイルのパス}```

+ * 例: ```music|text|static/infer/music/features an indie rock sound with distinct element.wav```

+

+**注意**

+

+異なるタスクには、異なる言語モデルのデコード戦略を使用しています。画像、音声、音楽生成のデコード設定ファイルは、それぞれ```config/image_generate_config.json```、```config/speech_generate_config.json```、```config/music_generate_config.json```にあります。他のモダリティからテキストへのデコード設定ファイルは、```config/text_generate_config.json```にあります。パラメータを直接変更または追加して、デコード戦略を変更できます。

+

+データと学習リソースの制限により、モデルの生成はまだ不安定な場合があります。複数回生成するか、異なるデコード戦略を試してください。

+

+音声と音楽の応答は ```.wav```ファイルに保存され、画像の応答は```jpg```に保存されます。ファイル名はプロンプトと時間を連結したものになります。これらのファイルへのパスは応答に示されます。

+

+## 謝辞

+- [SpeechGPT](https://github.com/0nutation/SpeechGPT/tree/main/speechgpt), [Vicuna](https://github.com/lm-sys/FastChat): 構築したコードベース。

+- [SpeechTokenizer](https://github.com/ZhangXInFD/SpeechTokenizer)、[soundstorm-speechtokenizer](https://github.com/ZhangXInFD/soundstorm-speechtokenizer)、[SEED-tokenizer](https://github.com/AILab-CVC/SEED)の素晴らしい仕事に感謝します。

+

+## ライセンス

+`AnyGPT`は、[LLaMA2](https://huggingface.co/meta-llama/Llama-2-13b-chat-hf)の元の[ライセンス](https://ai.meta.com/resources/models-and-libraries/llama-downloads/)の下でリリースされています。

+

+## 引用

+AnyGPTとAnyInstructが研究やアプリケーションに役立つと感じた場合は、ぜひ引用してください。

+```

+@article{zhan2024anygpt,

+ title={AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling},

+ author={Zhan, Jun and Dai, Junqi and Ye, Jiasheng and Zhou, Yunhua and Zhang, Dong and Liu, Zhigeng and Zhang, Xin and Yuan, Ruibin and Zhang, Ge and Li, Linyang and others},

+ journal={arXiv preprint arXiv:2402.12226},

+ year={2024}

+}

+```

\ No newline at end of file

diff --git a/scripts/cli_infer_base_model2.sh b/scripts/cli_infer_base_model2.sh

new file mode 100644

index 0000000..9df4b0d

--- /dev/null

+++ b/scripts/cli_infer_base_model2.sh

@@ -0,0 +1,82 @@

+out_dir="infer_output/base"

+mkdir -p ${out_dir}

+

+python anygpt/src/infer/cli_infer_base_model.py \

+ --model-name-or-path models/anygpt/base \

+ --image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

+ --speech-tokenizer-path models/speechtokenizer/ckpt.dev \

+ --speech-tokenizer-config models/speechtokenizer/config.json \

+ --soundstorm-path models/soundstorm/speechtokenizer_soundstorm_mls.pt \

+ --output-dir ${out_dir}

+

+

+

+# image|text|data/images/testset/aYQ2uNa.jpg

+# image|text|data/images/testset/image-20231121155007517.png

+# image|text|data/images/testset/gpt4 test images/4.png

+

+

+# text|image|a happy dog running on the grass

+# text|image|A group of students leaving the school

+# text|image|a happy boy playing with his dog

+# text|image|a sunset behind a mountain range

+# text|image|a beautiful lake, surrounded by mountains

+# text|image|a kitten curled up on the ground with its eyes closed behind a tree

+# text|image|An animated version of Iron Man

+# text|image|A Superman in flight.

+

+

+# speech|text|data/speech/testset2.jsonl

+

+# text|speech|to be or not to be, this is a question

+# text|speech|The primary colors are red, blue, and yellow. These colors are the building blocks of all other colors and are used to create the full spectrum of colors.

+# text|speech|Going to the moon is a challenging task that requires a lot of planning and resources. To do this, you will need to develop a spacecraft that can withstand the extreme conditions of the moon's atmosphere

+# text|speech|Going to the moon is a challenging task that requires a lot of planning and resources. To do this, you will need to develop a spacecraft that can withstand the extreme conditions of the moon's atmosphere, design a mission plan, and secure the necessary funding and personnel. Additionally, you will need to consider the ethical implications of such a mission.|data/speech/prompt/prompt3.wav

+# text|speech|Yes, I do know Stephen Curry.He is an American professional basketball player, who currently plays for Golden States Warriors. He is two-time NBA most valuable player and four-time NBA all star.|data/speech/prompt/prompt3.wav

+# text|speech|hello world, hello everyone

+# text|speech|hello world

+# text|speech|The capital of France is Paris. It is located in the northern part of the country, along the Seine River.

+# text|speech|hello world, hello everyone|/mnt/petrelfs/zhanjun.p/mllm/data/speech/prompt/prompt (1).wav

+# text|speech|Yes, I do know Stephen Curry.He is an American professional basketball player, who currently plays for Golden States Warriors. He is two-time NBA most valuable player and four-time NBA all star.|/mnt/petrelfs/zhanjun.p/mllm/data/speech/testset/mls-test-1.wav

+

+# text|speech|Going to the moon is a challenging task that requires a lot of planning and resources. To do this, you will need to develop a spacecraft that can withstand the extreme conditions of the moon's atmosphere|/mnt/petrelfs/zhanjun.p/mllm/data/speech/prompt/prompt3.wav

+# text|speech|The primary colors are red, blue, and yellow. These colors are the building blocks of all other colors and are used to create the full spectrum of colors.|/mnt/petrelfs/zhanjun.p/mllm/data/speech/prompt/LJ049-0185_24K.wav

+# text|speech|The capital of France is Paris. It is located in the northern part of the country, along the Seine River.|/mnt/petrelfs/zhanjun.p/mllm/data/speech/testset/vctk-1.wav

+# text|speech|hey guys, i am moss|/mnt/petrelfs/zhanjun.p/mllm/data/speech/prompt/moss-1.wav

+# text|speech|hey guys, i am moss. i am an artificial intelligence made by fudan university|/mnt/petrelfs/zhanjun.p/mllm/data/speech/prompt/prompt1.wav

+# text|speech|The primary colors are red, blue, and yellow. These colors are the building blocks of all other colors and are used to create the full spectrum of colors.|data/speech/test_case/2.wav

+

+# text|audio|a bird is chirping.

+# text|audio|A passionate drum set.

+# text|audio|A dog is barking.

+# text|audio|A man walking alone on a muddy road.

+# text|audio|The roar of a tiger.

+# text|audio|A passionate drum set.

+# text|audio|The waves crashed against the beach.

+# text|audio|A gunshot is being fired.

+

+# audio|text|/mnt/petrelfs/zhanjun.p/mllm/data/audio/沉重的咕噜声..._耳聆网_[声音ID:10492].mp3

+# audio|text|/mnt/petrelfs/zhanjun.p/mllm/data/audio/狮子咆哮_耳聆网_[声音ID:11539].wav

+# audio|text|/mnt/petrelfs/zhanjun.p/mllm/infer_output/audio_pretrain_4n_2ga_true/checkpoint-37000/a bird is chirping1203_160539.wav

+# audio|text|/mnt/petrelfs/zhanjun.p/mllm/infer_output/audio_pretrain_4n_2ga_true/checkpoint-37000/A dog is barking.1203_155916.wav

+

+# text|music|A passionate drum set.

+# text|music|a lilting piano melody.

+# text|music|Music with a slow and grand rhythm.

+# text|music|features an indie rock sound with distinct elements that evoke a dreamy, soothing atmosphere

+# text|music|Slow tempo, bass-and-drums-led reggae song. Sustained electric guitar. High-pitched bongos with ringing tones. Vocals are relaxed with a laid-back feel, very expressive.

+

+# sh scripts/infer_cli.sh visual_inter_speech_golden_fs/checkpoint-31000

+# sh scripts/infer_cli.sh visual_inter/checkpoint-14000

+# sh scripts/infer_cli.sh visual_inter_true/checkpoint-8000

+# sh scripts/infer_cli.sh visual_mix_template/checkpoint-5000

+# sh scripts/infer_cli.sh speech_pretrain/checkpoint-14000

+# sh scripts/infer_cli.sh visual_cc_sbu/checkpoint-4000

+# sh scripts/infer_cli.sh visual_laion_no_group/checkpoint-23000

+# sh scripts/infer_cli.sh visual_group_4nodes/checkpoint-51000

+# sh scripts/infer_cli.sh music_pretrain_4n_4ga/checkpoint-10000

+# sh scripts/infer_cli.sh audio_pretrain_4n_2ga/checkpoint-11000

+

+# sh scripts/infer_cli.sh music_pretrain_20s_8n_2ga/checkpoint-58000

+# sh scripts/infer_cli.sh audio_pretrain_4n_2ga_true/checkpoint-37000

+# sh scripts/infer_cli.sh audio_pretrain_4n_2ga_true/checkpoint-50000

\ No newline at end of file

diff --git a/scripts/download_models.py b/scripts/download_models.py

new file mode 100644

index 0000000..cb18d16

--- /dev/null

+++ b/scripts/download_models.py

@@ -0,0 +1,9 @@

+from huggingface_hub import snapshot_download

+

+def download_models():

+ snapshot_download(repo_id='fnlp/AnyGPT-base', local_dir='models/anygpt/base')

+ snapshot_download(repo_id='AILab-CVC/seed-tokenizer-2', local_dir='models/seed-tokenizer-2')

+ snapshot_download(repo_id='fnlp/AnyGPT-speech-modules', local_dir='models')

+

+if __name__ == '__main__':

+ download_models()

\ No newline at end of file

diff --git a/seed2/__pycache__/__init__.cpython-311.pyc b/seed2/__pycache__/__init__.cpython-311.pyc

deleted file mode 100644

index 6f2066d..0000000

Binary files a/seed2/__pycache__/__init__.cpython-311.pyc and /dev/null differ

diff --git a/seed2/__pycache__/__init__.cpython-39.pyc b/seed2/__pycache__/__init__.cpython-39.pyc

deleted file mode 100644

index 2ea1c80..0000000

Binary files a/seed2/__pycache__/__init__.cpython-39.pyc and /dev/null differ

diff --git a/seed2/__pycache__/llama_xformer.cpython-39.pyc b/seed2/__pycache__/llama_xformer.cpython-39.pyc

deleted file mode 100644

index ecab766..0000000

Binary files a/seed2/__pycache__/llama_xformer.cpython-39.pyc and /dev/null differ

diff --git a/seed2/__pycache__/model_tools.cpython-39.pyc b/seed2/__pycache__/model_tools.cpython-39.pyc

deleted file mode 100644

index 5b446d7..0000000

Binary files a/seed2/__pycache__/model_tools.cpython-39.pyc and /dev/null differ

diff --git a/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-311.pyc b/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-311.pyc

deleted file mode 100644

index 88d42f2..0000000

Binary files a/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-311.pyc and /dev/null differ

diff --git a/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-39.pyc b/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-39.pyc

deleted file mode 100644

index a7714e8..0000000

Binary files a/seed2/__pycache__/pipeline_stable_unclip_img2img.cpython-39.pyc and /dev/null differ

diff --git a/seed2/__pycache__/seed_llama_tokenizer.cpython-311.pyc b/seed2/__pycache__/seed_llama_tokenizer.cpython-311.pyc

deleted file mode 100644

index ede0dfe..0000000

Binary files a/seed2/__pycache__/seed_llama_tokenizer.cpython-311.pyc and /dev/null differ

diff --git a/seed2/__pycache__/seed_llama_tokenizer.cpython-39.pyc b/seed2/__pycache__/seed_llama_tokenizer.cpython-39.pyc

deleted file mode 100644

index 8c84d9e..0000000

Binary files a/seed2/__pycache__/seed_llama_tokenizer.cpython-39.pyc and /dev/null differ

diff --git a/seed2/__pycache__/transforms.cpython-311.pyc b/seed2/__pycache__/transforms.cpython-311.pyc

deleted file mode 100644

index 9e57ba7..0000000

Binary files a/seed2/__pycache__/transforms.cpython-311.pyc and /dev/null differ

diff --git a/seed2/__pycache__/transforms.cpython-39.pyc b/seed2/__pycache__/transforms.cpython-39.pyc

deleted file mode 100644

index d382609..0000000

Binary files a/seed2/__pycache__/transforms.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/blip2.cpython-311.pyc b/seed2/seed_qformer/__pycache__/blip2.cpython-311.pyc

deleted file mode 100644

index a63b3a8..0000000

Binary files a/seed2/seed_qformer/__pycache__/blip2.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/blip2.cpython-39.pyc b/seed2/seed_qformer/__pycache__/blip2.cpython-39.pyc

deleted file mode 100644

index ee7fee8..0000000

Binary files a/seed2/seed_qformer/__pycache__/blip2.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/clip_vit.cpython-311.pyc b/seed2/seed_qformer/__pycache__/clip_vit.cpython-311.pyc

deleted file mode 100644

index be46ef8..0000000

Binary files a/seed2/seed_qformer/__pycache__/clip_vit.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/clip_vit.cpython-39.pyc b/seed2/seed_qformer/__pycache__/clip_vit.cpython-39.pyc

deleted file mode 100644

index 7896670..0000000

Binary files a/seed2/seed_qformer/__pycache__/clip_vit.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/eva_vit.cpython-311.pyc b/seed2/seed_qformer/__pycache__/eva_vit.cpython-311.pyc

deleted file mode 100644

index a18c00a..0000000

Binary files a/seed2/seed_qformer/__pycache__/eva_vit.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/eva_vit.cpython-39.pyc b/seed2/seed_qformer/__pycache__/eva_vit.cpython-39.pyc

deleted file mode 100644

index ce8d519..0000000

Binary files a/seed2/seed_qformer/__pycache__/eva_vit.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/qformer_causual.cpython-311.pyc b/seed2/seed_qformer/__pycache__/qformer_causual.cpython-311.pyc

deleted file mode 100644

index 63f9d43..0000000

Binary files a/seed2/seed_qformer/__pycache__/qformer_causual.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/qformer_causual.cpython-39.pyc b/seed2/seed_qformer/__pycache__/qformer_causual.cpython-39.pyc

deleted file mode 100644

index 52d8596..0000000

Binary files a/seed2/seed_qformer/__pycache__/qformer_causual.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-311.pyc b/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-311.pyc

deleted file mode 100644

index d658aa5..0000000

Binary files a/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-39.pyc b/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-39.pyc

deleted file mode 100644

index 9cc89fb..0000000

Binary files a/seed2/seed_qformer/__pycache__/qformer_quantizer.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/utils.cpython-311.pyc b/seed2/seed_qformer/__pycache__/utils.cpython-311.pyc

deleted file mode 100644

index 6c9b61f..0000000

Binary files a/seed2/seed_qformer/__pycache__/utils.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/utils.cpython-39.pyc b/seed2/seed_qformer/__pycache__/utils.cpython-39.pyc

deleted file mode 100644

index d6eba02..0000000

Binary files a/seed2/seed_qformer/__pycache__/utils.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/vit.cpython-311.pyc b/seed2/seed_qformer/__pycache__/vit.cpython-311.pyc

deleted file mode 100644

index 3ea1f20..0000000

Binary files a/seed2/seed_qformer/__pycache__/vit.cpython-311.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/__pycache__/vit.cpython-39.pyc b/seed2/seed_qformer/__pycache__/vit.cpython-39.pyc

deleted file mode 100644

index 39ac18b..0000000

Binary files a/seed2/seed_qformer/__pycache__/vit.cpython-39.pyc and /dev/null differ

diff --git a/seed2/seed_qformer/blip2.py b/seed2/seed_qformer/blip2.py

index a438696..eed787e 100644

--- a/seed2/seed_qformer/blip2.py

+++ b/seed2/seed_qformer/blip2.py

@@ -35,7 +35,7 @@ def device(self):

@classmethod

def init_tokenizer(cls, truncation_side="right"):

- tokenizer = BertTokenizer.from_pretrained("/mnt/petrelfs/zhanjun.p/mllm/models/bert-base-uncased", truncation_side=truncation_side)

+ tokenizer = BertTokenizer.from_pretrained("google-bert/bert-base-uncased", truncation_side=truncation_side)

tokenizer.add_special_tokens({"bos_token": "[DEC]"})

return tokenizer

@@ -51,13 +51,13 @@ def maybe_autocast(self, dtype=torch.float16):

@classmethod

def init_Qformer(cls, num_query_token, vision_width, cross_attention_freq=2):

- encoder_config = BertConfig.from_pretrained("/mnt/petrelfs/zhanjun.p/mllm/models/bert-base-uncased", )

+ encoder_config = BertConfig.from_pretrained("google-bert/bert-base-uncased", )

encoder_config.encoder_width = vision_width

# insert cross-attention layer every other block

encoder_config.add_cross_attention = True

encoder_config.cross_attention_freq = cross_attention_freq

encoder_config.query_length = num_query_token

- Qformer = BertLMHeadModel.from_pretrained("/mnt/petrelfs/zhanjun.p/mllm/models/bert-base-uncased", config=encoder_config)

+ Qformer = BertLMHeadModel.from_pretrained("google-bert/bert-base-uncased", config=encoder_config)

query_tokens = nn.Parameter(torch.zeros(1, num_query_token, encoder_config.hidden_size))

query_tokens.data.normal_(mean=0.0, std=encoder_config.initializer_range)

return Qformer, query_tokens

@@ -183,4 +183,3 @@ def forward(self, x: torch.Tensor):

ret = super().forward(x.type(torch.float32))

return ret.type(orig_type)

-

diff --git a/soundstorm_speechtokenizer/__pycache__/__init__.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/__init__.cpython-310.pyc

deleted file mode 100644

index 4dfeea7..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/__init__.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/__init__.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/__init__.cpython-39.pyc

deleted file mode 100644

index 0d2dc45..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/__init__.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/attend.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/attend.cpython-310.pyc

deleted file mode 100644

index 3c63d38..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/attend.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/attend.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/attend.cpython-39.pyc

deleted file mode 100644

index 17c2d7f..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/attend.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/dataset.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/dataset.cpython-310.pyc

deleted file mode 100644

index b9ef561..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/dataset.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/dataset.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/dataset.cpython-39.pyc

deleted file mode 100644

index 62f1efd..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/dataset.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-310.pyc

deleted file mode 100644

index ce7ce86..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-39.pyc

deleted file mode 100644

index 07217e1..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/optimizer.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-310.pyc

deleted file mode 100644

index 2497df7..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-39.pyc

deleted file mode 100644

index 6f16a2d..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/soundstorm.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/tracking.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/tracking.cpython-310.pyc

deleted file mode 100644

index 55b95ed..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/tracking.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/tracking.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/tracking.cpython-39.pyc

deleted file mode 100644

index 504596c..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/tracking.cpython-39.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/trainer.cpython-310.pyc b/soundstorm_speechtokenizer/__pycache__/trainer.cpython-310.pyc

deleted file mode 100644

index 743975a..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/trainer.cpython-310.pyc and /dev/null differ

diff --git a/soundstorm_speechtokenizer/__pycache__/trainer.cpython-39.pyc b/soundstorm_speechtokenizer/__pycache__/trainer.cpython-39.pyc

deleted file mode 100644

index c74287e..0000000

Binary files a/soundstorm_speechtokenizer/__pycache__/trainer.cpython-39.pyc and /dev/null differ

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

+

+

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

+

+