Description

tl;dr: consistent hashing is the only way to load balance leases in misk. Although it is a well established way to partition databases, counter intuitively, it is not the best way to load balance event consumers, where the number of total leases is small and each consumer is stateless.

Our team operates a service that consumes an event topic. This topic has 32 partitions and emits 400+ events per second. At some time last week, the service was auto scaled 20 pods.

Most pods were doing work. But according to the metrics, the load on these pods was not evenly distributed: 4 pods were idle, not consuming any events, while 2 pods were busy, consuming a lot of events.

After doing some math, I found this:

- Assuming the consistent hash ring assigns partitions to a specific pod (let’s call it pod A) 1 time out of 20 (given 20 pods)

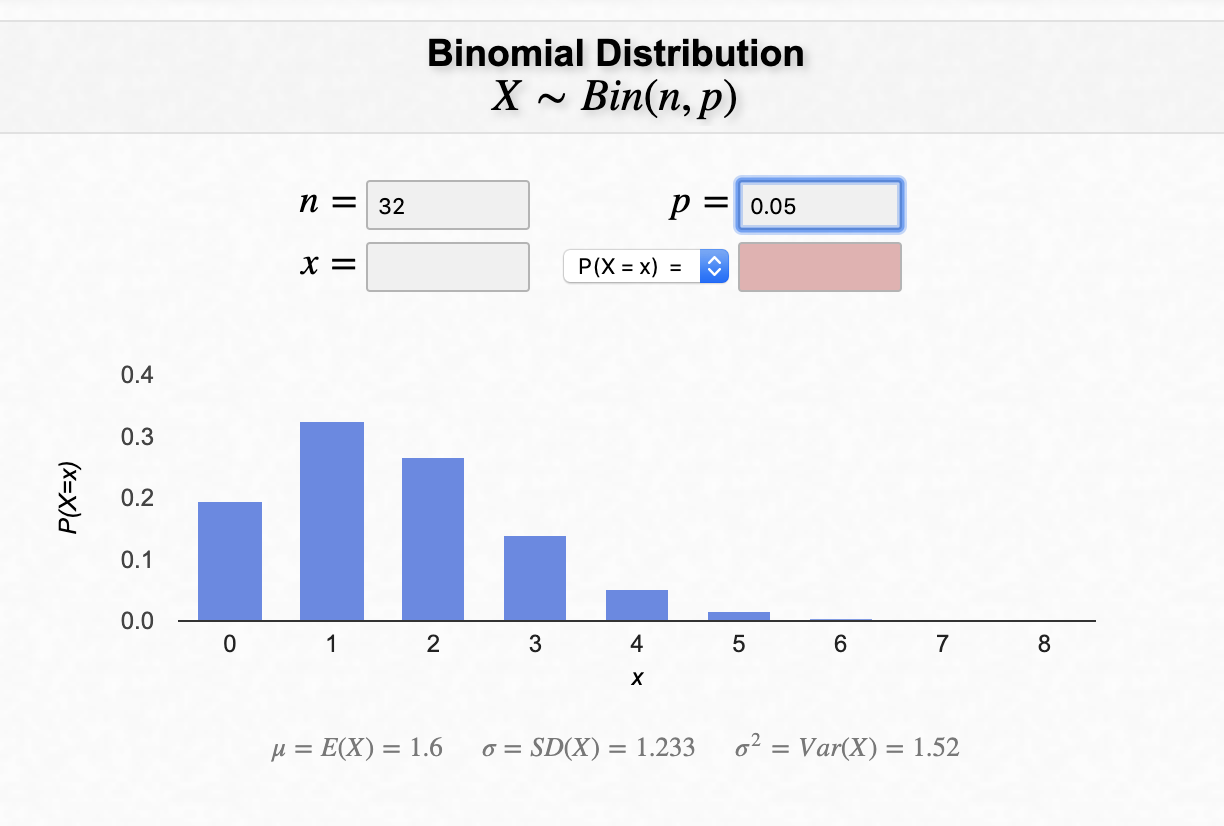

- Given 32 partitions, we have the following binomial distribution:

- the probability that pod A receives 0 partition is 0.19371,

- the probability that pod A receives 1 partitions is 0.32625

- the probability that pod A receives 2 partitions is 0.26615

- the probability that pod A receives 3 partitions is 0.14008

- the probability that pod A receives 4 partitions is 0.05345

This distribution, consistent with the production metrics, is not ideal: 4 pods are idle (consuming 0 partition), 4 pods take twice the load (consuming 3 - 4 partitions).

As we increase the number of partitions, the distribution becomes more uniform:

# of partitions = 10000

# of partitions = 100000

It is common for databases to have more than 1^6 rows. But it is not practical for a topic to have this many partitions.

There are other ways to assign partitions to worker pods that yield more consistent and uniform results. For example,

- kafka client provides a RoundRobinAssignor and a RangeAssignor

- kinesis client uses something similar to RangeAssignor

Conclusion: we should provide similar options in misk for stateless lease holders.