-

Notifications

You must be signed in to change notification settings - Fork 26

Open

Description

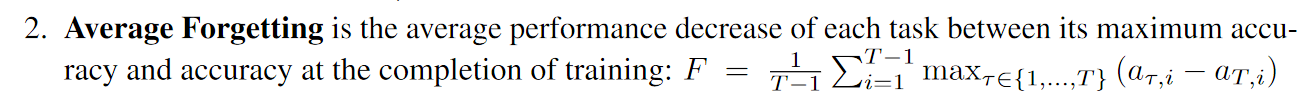

Hi, I found a difference between your code and the way the Average Forgetting is calculated in the paper.

- paper

Average Forgetting:

- code

line 113inmain.py:

kfgt = []

# memorize current task acc

results['knn-cls-each-acc'].append(knn_acc_list[-1])

results['knn-cls-max-acc'].append(knn_acc_list[-1])

for j in range(t):

if knn_acc_list[j] > results['knn-cls-max-acc'][j]:

results['knn-cls-max-acc'][j] = knn_acc_list[j]

kfgt.append(results['knn-cls-each-acc'][j] - knn_acc_list[j])

results['knn-cls-acc'].append(np.mean(knn_acc_list))

results['knn-cls-fgt'].append(np.mean(kfgt))To calculate the real Average Forgetting, set the eval.type to "all" and fix the above code to:

from itertools import zip_longest

kfgt = []

results['knn-cls-each-acc'].append(knn_acc_list)

# memorize max accuracy

results['knn-cls-max-acc'] = [max(item) for item in zip_longest(*results['knn-cls-each-acc'], fillvalue=0)][:t]

for j in range(t):

kfgt.append(results['knn-cls-max-acc'][j] - knn_acc_list[j])

results['knn-cls-acc'].append(np.mean(knn_acc_list))

if len(kfgt) > 0:

results['knn-cls-fgt'].append(np.mean(kfgt))Reactions are currently unavailable

Metadata

Metadata

Assignees

Labels

No labels