Replies: 10 comments

-

|

@akamor Have you modified the |

Beta Was this translation helpful? Give feedback.

-

|

So I just deleted my old db-init and install-worker scripst because I had modified them a bit and I started fresh and made the change you suggested above and things seem to be working a little better now. The program still fails when the first UDF is called, however, the exception now is much different. I've included it below. FWIW, this same code runs on AWS EMR and on regular Spark 3 clusters with no issues so I guess my either my setup is still not correct or there is a bug. Please advise. 2020-12-08T22:20:28.4531790Z] [1208-143321-soon538-10-175-245-121] [Error] [JvmBridge] org.apache.spark.SparkException: Job aborted. Driver stacktrace: |

Beta Was this translation helpful? Give feedback.

-

|

@Niharikadutta Ah, I also see from the cluster init logs that the install-worker.sh script is exiting with errors: tar: Microsoft.Spark.Worker-1.0.0/Apache.Arrow.dll: Cannot change ownership to uid 197108, gid 197121: Invalid argument The above line about Cannot change ownership seems to repeat for each file in the tarball. If the tar command fails then the chmod and ln -sf commands in install-worker will not get executed. Perhaps that is the probem? |

Beta Was this translation helpful? Give feedback.

-

|

@akamor As per the following error in the stacktrace you provided, it looks like the environment variable to find the assemblies where your UDF is defined is not set: Could you please set the value of env variable Regarding the error about changing ownership, I am not sure I have encountered that before, can you first make the above change and try submitting a job again? |

Beta Was this translation helpful? Give feedback.

-

|

Settings DOTNET_ASSEMBLY_SEARCH_PATHS moves things further along but I get a new error: System.IO.FileNotFoundException: Could not load file or assembly 'Allos.Core, Version=0.0.0.0, Culture=neutral, PublicKeyToken=null'. The system cannot find the file specified. Allos.Core is an assembly in our project. I've also set DOTNET_WORKER_DIRECTORY=/usr/local/bin/Microsoft.Spark.Worker but that does not help. Is there anything else that must be set for UDFs in Databricks? |

Beta Was this translation helpful? Give feedback.

-

|

Ah, I see. I need to add Allos.Core.dll to /dbfs/app/dependencies as well. The issue is we have 100s of DLLs in our publish directly. Must I copy all of them to /dbfs/app/dependencies? |

Beta Was this translation helpful? Give feedback.

-

|

@akamor You can use the |

Beta Was this translation helpful? Give feedback.

-

|

@Niharikadutta I'll give the zip command a try. AFAIK, --files and --archives aren't suitable for us as we are using the Set JAR option on Databricks. When we run our offering on using spark-submit we do use --archives which is why we were unsure what to do in this situation. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks @Niharikadutta We were able to resolve this by simply packaging up additional items before sending to databricks and using the ENV vars you suggested. |

Beta Was this translation helpful? Give feedback.

-

|

@akamor Great! Good to know this was resolved. Please feel free to close the issue if there is nothing else. Thanks! |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

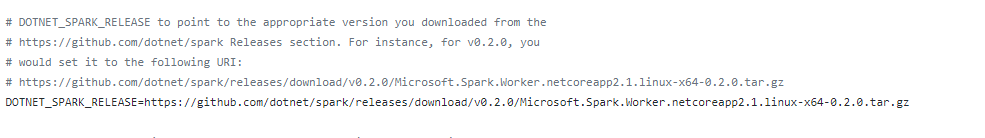

Describe the bug

When running program including UDFs I get following exception:

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 38.0 failed 4 times, most recent failure: Lost task 0.3 in stage 38.0 (TID 40, 10.175.227.18, executor 0): java.io.IOException: Cannot run program "Microsoft.Spark.Worker": error=2, No such file or directory

This exception occurs as soon as a UDF is called. Program execution is normal up until that point.

To Reproduce

Steps to reproduce the behavior:

1\ upload db-init.sh and install-worker.sh to /dbfs/spark-dotnet

2\ upload Microsoft.Spark.Worker.netcoreapp3.1.linux-x64-1.0.0.tar.gz to /dbfs/spark-dotnet

3\ modified db-init.sh to include dependencies by uncommenting bottom section. db-init was re-uploaded and cluster was restarted.

4\ upload my program dll (Allos.Spark.dll) to /dbfs/app/dependencies

5\ Create a Set JAR Job as instructed

Beta Was this translation helpful? Give feedback.

All reactions