+(make sure you choose *username*/pytorch instead of pytorch/pytorch)

+

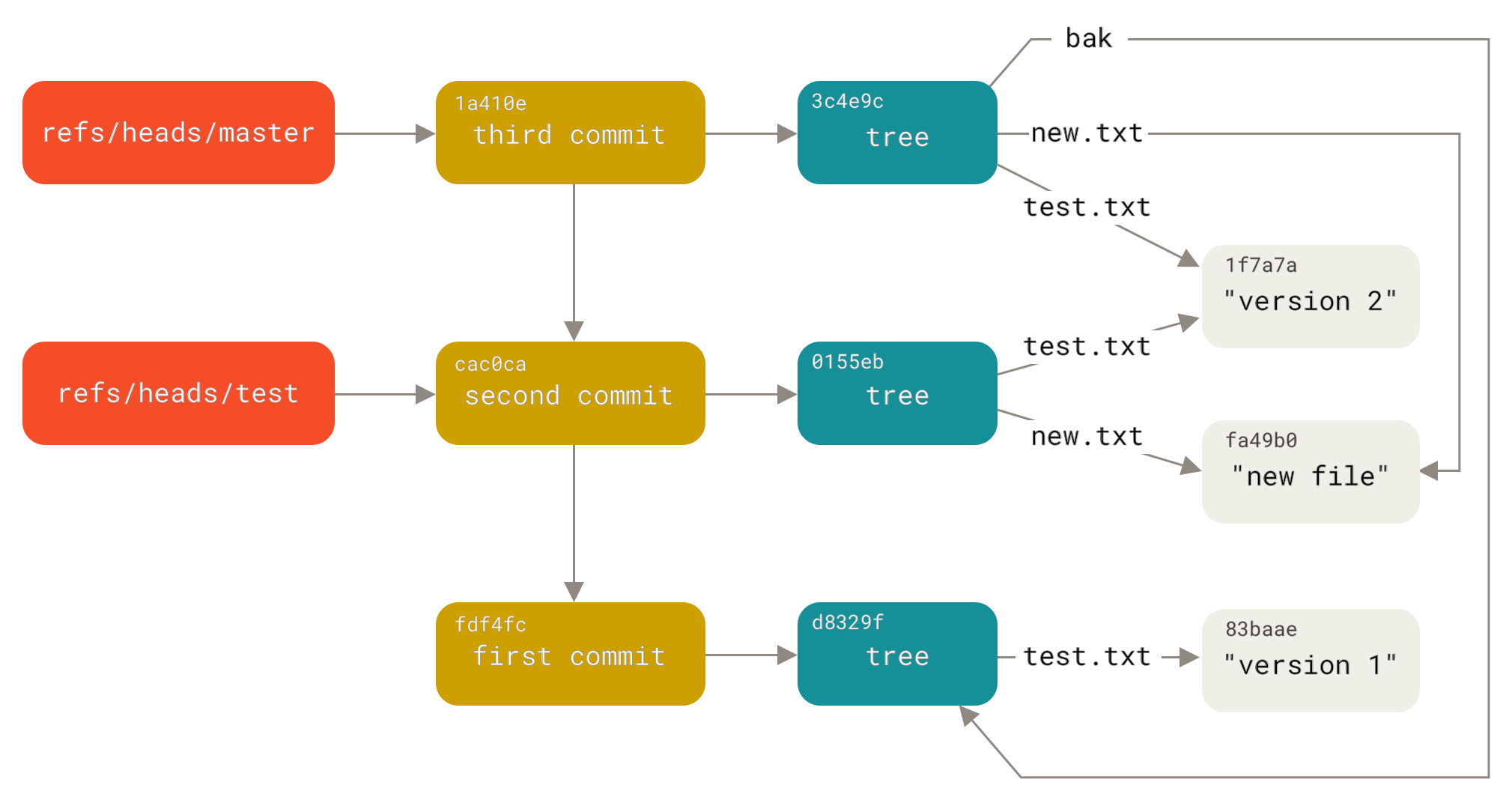

+ +1. Create a new branch called `pull-request` from `main` branch. +2. In the `torch/nn/functional.py` file, navigate to the `l1_loss` function (line 3308) and add code to check if the reduction mode is `sum` and raise an exception: +3. Commit the changes to the `pull-request` branch. Make sure you add a meaningful commit message. +4. Push the `pull-request` branch to the remote repository. +5. Create a pull request to merge the `pull-request` branch into the `main` branch. +6. Approve the pull request. +7. Merge the `pull-request` branch into the `main` branch. + + + +## Useful commands + +- `git checkout -b

-All [assignments](https://github.com/mlip-cmu/s2023/tree/main/assignments) available on GitHub now

+Most [assignments](https://github.com/mlip-cmu/s2024/tree/main/assignments) available on GitHub now

Series of 4 small to medium-sized **individual assignments**:

* Engage with practical challenges

@@ -541,38 +535,54 @@ Design your own research project and write a report

Very open ended: Align with own research interests and existing projects

-See the [project description](https://github.com/mlip-cmu/s2023/blob/main/assignments/research_project.md) and talk to us

+See the [project requirements](https://github.com/mlip-cmu/s2024/blob/main/assignments/research_project.md) and talk to us

First hard milestone: initial description due Feb 27

+

+.element: class="plain" style="width:100%"

+-->

----

-## Recitations

+## Labs

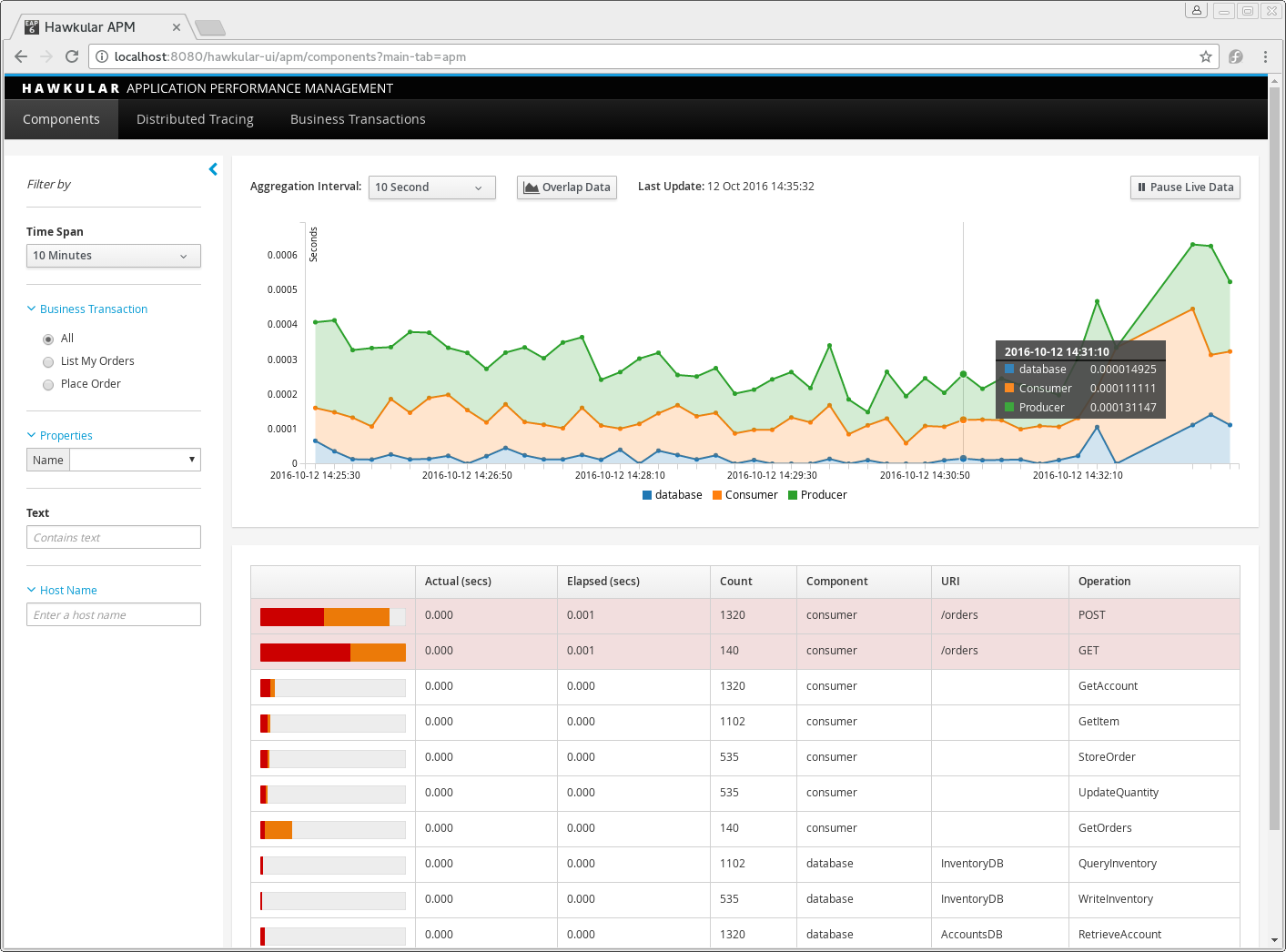

Introducing various tools, e.g., fastAPI (serving), Kafka (stream processing), Jenkins (continuous integration), MLflow (experiment tracking), Docker & Kubernetis (containers), Prometheus & Grafana (monitoring), CHAP (explainability)...

Hands on exercises, bring a laptop

-Often introducing tools relevant for assignments

+Often introducing tools useful for assignments

+

+about 1h of work, graded pass/fail, low stakes, show work to TA

-First recitation on **this Friday**: Calling, securing, and creating APIs

+First lab on **this Friday**: Calling, securing, and creating APIs

+

+----

+## Lab grading and collaboration

+

+We recommend to start at lab before the recitation, but can be completed during

+

+Graded pass/fail by TA on the spot, can retry

+

+*Relaxed collaboration policy:* Can work with others before and during recitation, but have to present/explain solution to TA individually

+

+(Think of recitations as mandatory office hours)

----

## Grading

-* 40% individual assignment

+* 35% individual assignment

* 30% group project with final presentation

* 10% midterm

* 10% participation

* 10% reading quizzes

+* 5% labs

* No final exam (final presentations will take place in that timeslot)

Expected grade cutoffs in syllabus (>82% B, >94 A-, >96% A, >99% A+)

@@ -600,14 +610,14 @@ Opportunities to resubmit work until last day of class

\ No newline at end of file

+

diff --git a/lectures/07_modeltesting/capabilities1.png b/lectures/07_modeltesting/capabilities1.png

deleted file mode 100644

index 0fd08926..00000000

Binary files a/lectures/07_modeltesting/capabilities1.png and /dev/null differ

diff --git a/lectures/07_modeltesting/capabilities2.png b/lectures/07_modeltesting/capabilities2.png

deleted file mode 100644

index 5d8ae5e4..00000000

Binary files a/lectures/07_modeltesting/capabilities2.png and /dev/null differ

diff --git a/lectures/07_modeltesting/checklist.jpg b/lectures/07_modeltesting/checklist.jpg

deleted file mode 100644

index 64d7b725..00000000

Binary files a/lectures/07_modeltesting/checklist.jpg and /dev/null differ

diff --git a/lectures/07_modeltesting/ci.png b/lectures/07_modeltesting/ci.png

deleted file mode 100644

index e686e50f..00000000

Binary files a/lectures/07_modeltesting/ci.png and /dev/null differ

diff --git a/lectures/07_modeltesting/coverage.png b/lectures/07_modeltesting/coverage.png

deleted file mode 100644

index 35f64927..00000000

Binary files a/lectures/07_modeltesting/coverage.png and /dev/null differ

diff --git a/lectures/07_modeltesting/easeml.png b/lectures/07_modeltesting/easeml.png

deleted file mode 100644

index 19bc1a62..00000000

Binary files a/lectures/07_modeltesting/easeml.png and /dev/null differ

diff --git a/lectures/07_modeltesting/googlehome.jpg b/lectures/07_modeltesting/googlehome.jpg

deleted file mode 100644

index 9c7660d2..00000000

Binary files a/lectures/07_modeltesting/googlehome.jpg and /dev/null differ

diff --git a/lectures/07_modeltesting/imgcaptioning.png b/lectures/07_modeltesting/imgcaptioning.png

deleted file mode 100644

index 9de8d250..00000000

Binary files a/lectures/07_modeltesting/imgcaptioning.png and /dev/null differ

diff --git a/lectures/07_modeltesting/inputpartitioning.png b/lectures/07_modeltesting/inputpartitioning.png

deleted file mode 100644

index e10dfcb8..00000000

Binary files a/lectures/07_modeltesting/inputpartitioning.png and /dev/null differ

diff --git a/lectures/07_modeltesting/inputpartitioning2.png b/lectures/07_modeltesting/inputpartitioning2.png

deleted file mode 100644

index b2a8f1ea..00000000

Binary files a/lectures/07_modeltesting/inputpartitioning2.png and /dev/null differ

diff --git a/lectures/07_modeltesting/mlflow-web-ui.png b/lectures/07_modeltesting/mlflow-web-ui.png

deleted file mode 100644

index 82e3e39a..00000000

Binary files a/lectures/07_modeltesting/mlflow-web-ui.png and /dev/null differ

diff --git a/lectures/07_modeltesting/mlvalidation.png b/lectures/07_modeltesting/mlvalidation.png

deleted file mode 100644

index e536d91f..00000000

Binary files a/lectures/07_modeltesting/mlvalidation.png and /dev/null differ

diff --git a/lectures/07_modeltesting/modelquality2.md b/lectures/07_modeltesting/modelquality2.md

deleted file mode 100644

index 0e59d2d0..00000000

--- a/lectures/07_modeltesting/modelquality2.md

+++ /dev/null

@@ -1,1339 +0,0 @@

----

-author: Christian Kaestner and Eunsuk Kang

-title: "MLiP: Model Testing beyond Accuracy"

-semester: Spring 2023

-footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Eunsuk Kang, Carnegie Mellon University • Spring 2023"

-license: Creative Commons Attribution 4.0

----

-

-

-

-## Machine Learning in Production

-

-

-# Model Testing beyond Accuracy

-

-

-7 individual tokens per student:

+8 individual tokens per student:

- Submit individual assignment 1 day late for 1 token (after running out of tokens 15% penalty per late day)

- Redo individual assignment for 3 token

- Resubmit or submit reading quiz late for 1 token

+- Redo or complete a lab late for 1 token (show in office hours)

- Remaining tokens count toward participation

-- 1 bonus token for attending >66% of recitations

-7 team tokens per team:

+8 team tokens per team:

- Submit milestone 1 day late for 1 token (no late submissions accepted when out of tokens)

- Redo milestone for 3 token

@@ -617,9 +627,9 @@ Opportunities to resubmit work until last day of class

## How to use tokens

* No need to tell us if you plan to submit very late. We will assign 0 and you can resubmit

-* Instructions and form for resubmission on Canvas

+* Instructions and Google form for resubmission on Canvas

* We will automatically use remaining tokens toward participation and quizzes at the end

-* Remaining individual tokens reflected on Canvas, for remaining team tokens ask your TA.

+* Remaining individual tokens reflected on Canvas, for remaining team tokens ask your team mentor.

@@ -629,9 +639,9 @@ Opportunities to resubmit work until last day of class

Instructor-assigned teams

-Teams stay together for project throughout semester, starting Feb 6

+Teams stay together for project throughout semester, starting Feb 5

-Fill out Catme Team survey before Feb 6 (3pt)

+Fill out Catme Team survey before Feb 5 (3pt)

Some advice in lectures; we'll help with debugging team issues

@@ -651,6 +661,8 @@ In a nutshell: do not copy from other students, do not lie, do not share or publ

In group work, be honest about contributions of team members, do not cover for others

+Collaboration okay on labs, but not quizzes, individual assignments, or exams

+

If you feel overwhelmed or stressed, please come and talk to us (see syllabus for other support opportunities)

----

@@ -659,7 +671,7 @@ If you feel overwhelmed or stressed, please come and talk to us (see syllabus fo

-GPT3, ChatGPT, ...? Reading quizzes, homework submissions, ...?

+GPT4, ChatGPT, CoPilot...? Reading quizzes, homework submissions, ...?

----

@@ -669,9 +681,9 @@ This is a course on responsible building of ML products. This includes questions

Feel free to use them and explore whether they are useful. Welcome to share insights/feedback.

-Warning: They are *[bullshit generators](https://aisnakeoil.substack.com/p/chatgpt-is-a-bullshit-generator-but)*! Requires understanding to check answers. We test them ourselves and they often generate bad/wrong answers for reading quizzes.

+Warning: Be aware of hallucinations. Requires understanding to check answers. We test them ourselves and they often generate bad/wrong answers for reading quizzes.

-**You are still responsible for the correctness of what you submit!**

+**You are responsible for the correctness of what you submit!**

diff --git a/lectures/10_qainproduction/bookingcom2.png b/lectures/02_systems/bookingcom2.png

similarity index 100%

rename from lectures/10_qainproduction/bookingcom2.png

rename to lectures/02_systems/bookingcom2.png

diff --git a/lectures/02_systems/systems.md b/lectures/02_systems/systems.md

index 7b4fe8b9..3d359f28 100644

--- a/lectures/02_systems/systems.md

+++ b/lectures/02_systems/systems.md

@@ -1,8 +1,8 @@

---

author: Christian Kaestner

title: "MLiP: From Models to Systems"

-semester: Spring 2023

-footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Eunsuk Kang, Carnegie Mellon University • Spring 2023"

+semester: Spring 2024

+footer: "Machine Learning in Production/AI Engineering • Claire Le Goues & Christian Kaestner, Carnegie Mellon University • Spring 2024"

license: Creative Commons Attribution 4.0 International (CC BY 4.0)

---

@@ -19,6 +19,16 @@ license: Creative Commons Attribution 4.0 International (CC BY 4.0)

---

+# Administrativa

+

+* Still waiting for registrar to add another section

+* Follow up on syllabus discussion:

+ * When not feeling well -- please stay home and get well, and email us for accommodation

+ * When using generative AI to generate responses (or email/slack messages) -- please ask it to be brief and to the point!

+

+

+----

+

# Learning goals

* Understand how ML components are a (small or large) part of a larger system

@@ -378,6 +388,13 @@ Passi, S., & Sengers, P. (2020). [Making data science systems work](https://jour

+----

+## Model vs System Goal?

+

+

+

+

+

----

## More Accurate Predictions may not be THAT Important

@@ -427,7 +444,7 @@ Wagstaff, Kiri. "Machine learning that matters." In Proceedings of the 29 th Int

* **MLOps** ~ technical infrastructure automating ML pipelines

* sometimes **ML Systems Engineering** -- but often this refers to building distributed and scalable ML and data storage platforms

* "AIOps" ~ using AI to make automated decisions in operations; "DataOps" ~ use of agile methods and automation in business data analytics

-* My preference: **Production Systems with Machine-Learning Components**

+* My preference: **Software Products with Machine-Learning Components**

@@ -466,7 +483,7 @@ Start understanding the **requirements** of the system and its components

* **Organizational objectives:** Innate/overall goals of the organization

-* **System goals:** Goals of the software system/feature to be built

+* **System goals:** Goals of the software system/product/feature to be built

* **User outcomes:** How well the system is serving its users, from the user's perspective

* **Model properties:** Quality of the model used in a system, from the model's perspective

*

@@ -622,8 +639,26 @@ As a group post answer to `#lecture` tagging all group members using template:

> User goals: ...

> Model goals: ...

+---- +## Academic Integrity Issue + +* Please do not cover for people not participating in discussion +* Easy to detect discrepancy between # answers and # people in classroom +* Please let's not have to have unpleasant meetings. +---- +## Breakout: Automating Admission Decisions + +What are different types of goals behind automating admissions decisions to a Master's program? + +As a group post answer to `#lecture` tagging all group members using template: +> Organizational goals: ...

+> Leading indicators: ...

+> System goals: ...

+> User goals: ...

+> Model goals: ...

+ diff --git a/lectures/03_requirements/requirements.md b/lectures/03_requirements/requirements.md index 0fbfc960..d8e4d3b0 100644 --- a/lectures/03_requirements/requirements.md +++ b/lectures/03_requirements/requirements.md @@ -1,8 +1,8 @@ --- -author: Christian Kaestner & Eunsuk Kang +author: Claire Le Goues & Christian Kaestner title: "MLiP: Gathering Requirements" -semester: Spring 2023 -footer: "Machine Learning in Production/AI Engineering • Eunsuk Kang & Christian Kaestner, Carnegie Mellon University • Spring 2023" +semester: Spring 2024 +footer: "Machine Learning in Production/AI Engineering • Claire Le Goues & Christian Kaestner, Carnegie Mellon University • Spring 2024" license: Creative Commons Attribution 4.0 International (CC BY 4.0) --- @@ -34,10 +34,10 @@ failures ---- ## Readings -Required reading: 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +Required reading: Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. -Going deeper: 🕮 Van Lamsweerde, Axel. [Requirements engineering: From system goals to UML models to software](https://bookshop.org/books/requirements-engineering-from-system-goals-to-uml-models-to-software-specifications/9780470012703). John Wiley & Sons, 2009. +Going deeper: Van Lamsweerde, Axel. [Requirements engineering: From system goals to UML models to software](https://bookshop.org/books/requirements-engineering-from-system-goals-to-uml-models-to-software-specifications/9780470012703). John Wiley & Sons, 2009. --- # Failures in ML-Based Systems @@ -571,7 +571,7 @@ Slate, 01/2022 -See 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +See Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. ---- ## Understanding requirements is hard @@ -589,7 +589,7 @@ See 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/ -See also 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +See also Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. ---- ## Start with Stakeholders... @@ -779,12 +779,12 @@ Identify stakeholders, interview them, resolve conflicts

-----

-## Correlation vs Causation

-

-

-

-

-

-

-

-

-https://www.tylervigen.com/spurious-correlations

-

----

@@ -647,67 +633,6 @@ https://www.tylervigen.com/spurious-correlations

-----

-## Risks of Metrics as Incentives

-

-Metrics-driven incentives can:

- * Extinguish intrinsic motivation

- * Diminish performance

- * Encourage cheating, shortcuts, and unethical behavior

- * Become addictive

- * Foster short-term thinking

-

-Often, different stakeholders have different incentives

-

-**Make sure data scientists and software engineers share goals and success measures**

-

-----

-## Example: University Rankings

-

-

-

-

-

-

-

-* Originally: Opinion-based polls, but complaints by schools on subjectivity

-* Data-driven model: Rank colleges in terms of "educational excellence"

-* Input: SAT scores, student-teacher ratios, acceptance rates,

-retention rates, campus facilities, alumni donations, etc.,

-

-

-----

-## Example: University Rankings

-

-

-

-

-

-

-

-* Can the ranking-based metric be misused or cause unintended side effects?

-

-

-

-

-

-

-For more, see Weapons of Math Destruction by Cathy O'Neil

-

-

-Notes:

-

-* Example 1

- * Schools optimize metrics for higher ranking (add new classrooms, nicer

- facilities)

- * Tuition increases, but is not part of the model!

- * Higher ranked schools become more expensive

- * Advantage to students from wealthy families

-* Example 2

- * A university founded in early 2010's

- * Math department ranked by US News as top 10 worldwide

- * Top international faculty paid \$\$ as a visitor; asked to add affiliation

- * Increase in publication citations => skyrocket ranking!

@@ -1178,7 +1103,8 @@ Note: The curve is the real trend, red points are training data, green points ar

Example: Kaggle competition on detecting distracted drivers

-

+

+

Relation of datapoints may not be in the data (e.g., driver)

diff --git a/lectures/06_teamwork/teams.md b/lectures/06_teamwork/teams.md

index afc45bb7..46bfbee3 100644

--- a/lectures/06_teamwork/teams.md

+++ b/lectures/06_teamwork/teams.md

@@ -1,8 +1,8 @@

---

-author: Christian Kaestner and Eunsuk Kang

+author: Christian Kaestner and Claire Le Goues

title: "MLiP: Working with Interdisciplinary (Student) Teams"

-semester: Spring 2023

-footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Eunsuk Kang, Carnegie Mellon University • Spring 2023"

+semester: Spring 2024

+footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Claire Le Goues, Carnegie Mellon University • Spring 2024"

license: Creative Commons Attribution 4.0 International (CC BY 4.0)

---

@@ -31,7 +31,7 @@ license: Creative Commons Attribution 4.0 International (CC BY 4.0)

* Say hi, introduce yourself: Name? SE or ML background? Favorite movie? Fun fact?

* Find time for first team meeting in next few days

* Agree on primary communication until team meeting

-* Pick a movie-related team name, post team name and tag all group members on slack in `#social`

+* Pick a movie-related team name (use a language model if needed), post team name and tag all group members on slack in `#social`

---

## Teamwork is crosscutting...

@@ -390,7 +390,7 @@ Based on research and years of own experience

----

-## Breakout: Navigating Team Issues

+## Breakout: Premortem

Pick one or two of the scenarios (or another one team member faced in the past) and openly discuss proactive/reactive solutions

@@ -580,4 +580,4 @@ Adjusting grades based on survey and communication with course staff

* Classic work on team dysfunctions: Lencioni, Patrick. “The five dysfunctions of a team: A Leadership Fable.” Jossey-Bass (2002).

* Oakley, Barbara, Richard M. Felder, Rebecca Brent, and Imad Elhajj. "[Turning student groups into effective teams](https://norcalbiostat.github.io/MATH456/notes/Effective-Teams.pdf)." Journal of student centered learning 2, no. 1 (2004): 9-34.

-> Model goals: ...

+---- +## Academic Integrity Issue + +* Please do not cover for people not participating in discussion +* Easy to detect discrepancy between # answers and # people in classroom +* Please let's not have to have unpleasant meetings. +---- +## Breakout: Automating Admission Decisions + +What are different types of goals behind automating admissions decisions to a Master's program? + +As a group post answer to `#lecture` tagging all group members using template: +> Organizational goals: ...

+> Leading indicators: ...

+> System goals: ...

+> User goals: ...

+> Model goals: ...

+ diff --git a/lectures/03_requirements/requirements.md b/lectures/03_requirements/requirements.md index 0fbfc960..d8e4d3b0 100644 --- a/lectures/03_requirements/requirements.md +++ b/lectures/03_requirements/requirements.md @@ -1,8 +1,8 @@ --- -author: Christian Kaestner & Eunsuk Kang +author: Claire Le Goues & Christian Kaestner title: "MLiP: Gathering Requirements" -semester: Spring 2023 -footer: "Machine Learning in Production/AI Engineering • Eunsuk Kang & Christian Kaestner, Carnegie Mellon University • Spring 2023" +semester: Spring 2024 +footer: "Machine Learning in Production/AI Engineering • Claire Le Goues & Christian Kaestner, Carnegie Mellon University • Spring 2024" license: Creative Commons Attribution 4.0 International (CC BY 4.0) --- @@ -34,10 +34,10 @@ failures ---- ## Readings -Required reading: 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +Required reading: Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. -Going deeper: 🕮 Van Lamsweerde, Axel. [Requirements engineering: From system goals to UML models to software](https://bookshop.org/books/requirements-engineering-from-system-goals-to-uml-models-to-software-specifications/9780470012703). John Wiley & Sons, 2009. +Going deeper: Van Lamsweerde, Axel. [Requirements engineering: From system goals to UML models to software](https://bookshop.org/books/requirements-engineering-from-system-goals-to-uml-models-to-software-specifications/9780470012703). John Wiley & Sons, 2009. --- # Failures in ML-Based Systems @@ -571,7 +571,7 @@ Slate, 01/2022 -See 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +See Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. ---- ## Understanding requirements is hard @@ -589,7 +589,7 @@ See 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/ -See also 🗎 Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. +See also Jackson, Michael. "[The world and the machine](https://web.archive.org/web/20170519054102id_/http://mcs.open.ac.uk:80/mj665/icse17kn.pdf)." In Proceedings of the International Conference on Software Engineering. IEEE, 1995. ---- ## Start with Stakeholders... @@ -779,12 +779,12 @@ Identify stakeholders, interview them, resolve conflicts

-* 🕮 Van Lamsweerde, Axel. Requirements engineering: From system goals to UML models to software. John Wiley & Sons, 2009.

-* 🗎 Vogelsang, Andreas, and Markus Borg. "Requirements Engineering for Machine Learning: Perspectives from Data Scientists." In Proc. of the 6th International Workshop on Artificial Intelligence for Requirements Engineering (AIRE), 2019.

-* 🗎 Rahimi, Mona, Jin LC Guo, Sahar Kokaly, and Marsha Chechik. "Toward Requirements Specification for Machine-Learned Components." In 2019 IEEE 27th International Requirements Engineering Conference Workshops (REW), pp. 241-244. IEEE, 2019.

-* 🗎 Kulynych, Bogdan, Rebekah Overdorf, Carmela Troncoso, and Seda Gürses. "POTs: protective optimization technologies." In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 177-188. 2020.

-* 🗎 Wiens, Jenna, Suchi Saria, Mark Sendak, Marzyeh Ghassemi, Vincent X. Liu, Finale Doshi-Velez, Kenneth Jung et al. "Do no harm: a roadmap for responsible machine learning for health care." Nature medicine 25, no. 9 (2019): 1337-1340.

-* 🗎 Bietti, Elettra. "From ethics washing to ethics bashing: a view on tech ethics from within moral philosophy." In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 210-219. 2020.

-* 🗎 Guizani, Mariam, Lara Letaw, Margaret Burnett, and Anita Sarma. "Gender inclusivity as a quality requirement: Practices and pitfalls." IEEE Software 37, no. 6 (2020).

+* Van Lamsweerde, Axel. Requirements engineering: From system goals to UML models to software. John Wiley & Sons, 2009.

+* Vogelsang, Andreas, and Markus Borg. "Requirements Engineering for Machine Learning: Perspectives from Data Scientists." In Proc. of the 6th International Workshop on Artificial Intelligence for Requirements Engineering (AIRE), 2019.

+* Rahimi, Mona, Jin LC Guo, Sahar Kokaly, and Marsha Chechik. "Toward Requirements Specification for Machine-Learned Components." In 2019 IEEE 27th International Requirements Engineering Conference Workshops (REW), pp. 241-244. IEEE, 2019.

+* Kulynych, Bogdan, Rebekah Overdorf, Carmela Troncoso, and Seda Gürses. "POTs: protective optimization technologies." In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 177-188. 2020.

+* Wiens, Jenna, Suchi Saria, Mark Sendak, Marzyeh Ghassemi, Vincent X. Liu, Finale Doshi-Velez, Kenneth Jung et al. "Do no harm: a roadmap for responsible machine learning for health care." Nature medicine 25, no. 9 (2019): 1337-1340.

+* Bietti, Elettra. "From ethics washing to ethics bashing: a view on tech ethics from within moral philosophy." In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 210-219. 2020.

+* Guizani, Mariam, Lara Letaw, Margaret Burnett, and Anita Sarma. "Gender inclusivity as a quality requirement: Practices and pitfalls." IEEE Software 37, no. 6 (2020).

diff --git a/lectures/04_mistakes/mistakes.md b/lectures/04_mistakes/mistakes.md

index 42ebf6b4..3c6be2e5 100644

--- a/lectures/04_mistakes/mistakes.md

+++ b/lectures/04_mistakes/mistakes.md

@@ -1,8 +1,8 @@

---

-author: Eunsuk Kang and Christian Kaestner

+author: Claire Le Goues & Christian Kaestner

title: "MLiP: Planning for Mistakes"

-semester: Spring 2023

-footer: "Machine Learning in Production/AI Engineering • Eunsuk Kang & Christian Kaestner, Carnegie Mellon University • Spring 2023"

+semester: Spring 2024

+footer: "Machine Learning in Production/AI Engineering • Claire Le Goues & Christian Kaestner, Carnegie Mellon University • Spring 2024"

license: Creative Commons Attribution 4.0 International (CC BY 4.0)

---

@@ -12,6 +12,139 @@ license: Creative Commons Attribution 4.0 International (CC BY 4.0)

# Planning for Mistakes

+---

+# From last time...

+----

+## Requirements elicitation techniques (1)

+

+* Background study: understand organization, read documents, try to use old system

+* Interview different stakeholders

+ * Ask open ended questions about problems, needs, possible solutions, preferences, concerns...

+ * Support with visuals, prototypes, ask about tradeoffs

+ * Use checklists to consider qualities (usability, privacy, latency, ...)

+

+

+**What would you ask in lane keeping software? In fall detection software? In college admissions software?**

+

+----

+## ML Prototyping: Wizard of Oz

+

+

+

+Note: In a wizard of oz experiment a human fills in for the ML model that is to be developed. For example a human might write the replies in the chatbot.

+

+----

+## Requirements elicitation techniques (2)

+

+* Surveys, groups sessions, workshops: Engage with multiple stakeholders, explore conflicts

+* Ethnographic studies: embed with users, passively observe or actively become part

+* Requirements taxonomies and checklists: Reusing domain knowledge

+* Personas: Shift perspective to explore needs of stakeholders not interviewed

+

+----

+## Negotiating Requirements

+

+Many requirements are conflicting/contradictory

+

+Different stakeholders want different things, have different priorities, preferences, and concerns

+

+Formal requirements and design methods such as [card sorting](https://en.wikipedia.org/wiki/Card_sorting), [affinity diagramming](https://en.wikipedia.org/wiki/Affinity_diagram), [importance-difficulty matrices](https://spin.atomicobject.com/2018/03/06/design-thinking-difficulty-importance-matrix/)

+

+Generally: sort through requirements, identify alternatives and conflicts, resolve with priorities and decisions -> single option, compromise, or configuration

+

+

+

+----

+## Stakeholder Conflict Examples

+

+*User wishes vs developer preferences:* free updates vs low complexity

+

+*Customer wishes vs affected third parties:* privacy preferences vs disclosure

+

+*Product owner priorities vs regulators:* maximizing revenue vs privacy protections

+

+**Conflicts in lane keeping software? In fall detection software? In college admissions software?**

+

+

+**Who makes the decisions?**

+

+----

+## Requirements documentation

+

+

+

+

+

+----

+## Requirements documentation

+

+Write down requirements

+* what the software *shall* do, what it *shall* not do, what qualities it *shall* have,

+* document decisions and rationale for conflict resolution

+

+Requirements as input to design and quality assurance

+

+Formal requirements documents often seen as bureaucratic, lightweight options in notes, wikis, issues common

+

+Systems with higher risk -> consider more formal documentation

+

+----

+## Requirements evaluation (validation!)

+

+

+

+

+

+----

+## Requirements evaluation

+

+Manual inspection (like code review)

+

+Show requirements to stakeholders, ask for misunderstandings, gaps

+

+Show prototype to stakeholders

+

+Checklists to cover important qualities

+

+

+Critically inspect assumptions for completeness and realism

+

+Look for unrealistic ML-related assumptions (no false positives, unbiased representative data)

+

+

+----

+## How much requirements eng. and when?

+

+

+

+----

+## How much requirements eng. and when?

+

+Requirements important in risky systems

+

+Requirements as basis of a contract (outsourcing, assigning blame)

+

+Rarely ever fully completely upfront and stable, anticipate change

+* Stakeholders see problems in prototypes, change their minds

+* Especially ML requires lots of exploration to establish feasibility

+

+Low-risk problems often use lightweight, agile approaches

+

+(We'll return to this later)

+

+----

+# Summary

+

+Requirements state the needs of the stakeholders and are expressed

+ over the phenomena in the world

+

+Software/ML models have limited influence over the world

+

+Environmental assumptions play just as an important role in

+establishing requirements

+

+Identify stakeholders, interview them, resolve conflicts

+

---

## Exploring Requirements...

@@ -32,7 +165,7 @@ license: Creative Commons Attribution 4.0 International (CC BY 4.0)

-Required reading: 🕮 Hulten, Geoff. "Building Intelligent Systems: A

+Required reading: Hulten, Geoff. "Building Intelligent Systems: A

Guide to Machine Learning Engineering." (2018), Chapters 6–7 (Why

creating IE is hard, balancing IE) and 24 (Dealing with mistakes)

@@ -58,12 +191,6 @@ creating IE is hard, balancing IE) and 24 (Dealing with mistakes)

-----

-## Common excuse: Just software mistake

-

-

-

-

----

## Common excuse: The problem is just data

@@ -286,8 +413,7 @@ Notes: Cancer prediction, sentencing + recidivism, Tesla autopilot, military "ki

-* Fall detection smartwatch

-* Safe browsing

+* Fall detection smartwatch?

----

## Human in the Loop - Examples?

@@ -692,14 +818,15 @@ A number of methods:

-* Fault tree: A top-down diagram that displays the relationships

-between a system failure (i.e., requirement violation) and its potential causes.

- * Identify sequences of events that result in a failure

- * Prioritize the contributors leading to the failure

- * Inform decisions about how to (re-)design the system

+* Fault tree: A diagram that displays relationships

+between a system failure (i.e., requirement violation) and potential causes.

+ * Identify event sequences that can result in failure

+ * Prioritize contributors leading to a failure

+ * Inform design decisions

* Investigate an accident & identify the root cause

* Often used for safety & reliability, but can also be used for

other types of requirements (e.g., poor performance, security attacks...)

+* (Observation: they're weirdly named!)

@@ -728,7 +855,7 @@ other types of requirements (e.g., poor performance, security attacks...)

Event: An occurrence of a fault or an undesirable action

* (Intermediate) Event: Explained in terms of other events

- * Basic Event: No further development or breakdown; leaf

+ * Basic Event: No further development or breakdown; leaf (choice!)

Gate: Logical relationship between an event & its immediate subevents

* AND: All of the sub-events must take place

@@ -846,12 +973,25 @@ Solution combines a vision-based system identifying people in the door with pres

* Remove basic events with mitigations

* Increase the size of cut sets with mitigations

-

+* Recall: Guardrails

----

+## Guardrails - Examples

+

+Recall: Thermal fuse in smart toaster

+

+

+

+

++ maximum toasting time + extra heat sensor

+

+----

+

+

+

@@ -875,15 +1015,17 @@ Possible mitigations?

----

## FTA: Caveats

+

In general, building a **complete** tree is impossible

* There are probably some faulty events that you missed

* "Unknown unknowns"

+ * Events can always be decomposed; detail level is a choice.

Domain knowledge is crucial for improving coverage

* Talk to domain experts; augment your tree as you learn more

FTA is still very valuable for risk reduction!

- * Forces you to think about & explicitly document possible failure scenarios

+ * Forces you to think about, document possible failure scenarios

* A good starting basis for designing mitigations

diff --git a/lectures/04_mistakes/validation.svg b/lectures/04_mistakes/validation.svg

new file mode 100644

index 00000000..ec0ec642

--- /dev/null

+++ b/lectures/04_mistakes/validation.svg

@@ -0,0 +1 @@

+

\ No newline at end of file

diff --git a/lectures/15_process/waterfall.svg b/lectures/04_mistakes/waterfall.svg

similarity index 100%

rename from lectures/15_process/waterfall.svg

rename to lectures/04_mistakes/waterfall.svg

diff --git a/lectures/25_summary/wizard.gif b/lectures/04_mistakes/wizard.gif

similarity index 100%

rename from lectures/25_summary/wizard.gif

rename to lectures/04_mistakes/wizard.gif

diff --git a/lectures/05_modelaccuracy/modelquality1.md b/lectures/05_modelaccuracy/modelquality1.md

index 10c9c6f1..ed8aca78 100644

--- a/lectures/05_modelaccuracy/modelquality1.md

+++ b/lectures/05_modelaccuracy/modelquality1.md

@@ -1,8 +1,8 @@

---

-author: Christian Kaestner and Eunsuk Kang

+author: Christian Kaestner and Claire Le Goues

title: "MLiP: Model Correctness and Accuracy"

-semester: Spring 2023

-footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Eunsuk Kang, Carnegie Mellon University • Spring 2023"

+semester: Spring 2024

+footer: "Machine Learning in Production/AI Engineering • Christian Kaestner & Claire Le Goues, Carnegie Mellon University • Spring 2024"

license: Creative Commons Attribution 4.0 International (CC BY 4.0)

---

@@ -153,8 +153,6 @@ More on system vs model goals and other model qualities later

**Model:** $\overline{X} \rightarrow Y$

-**Training/validation/test data:** sets of $(\overline{X}, Y)$ pairs indicating desired outcomes for select inputs

-

**Performance:** In machine learning, "performance" typically refers to accuracy:

"this model performs better" = it produces more accurate results

@@ -617,18 +615,6 @@ As a group, post your answer to `#lecture` tagging all group members.

(Slicing, Capabilities, Invariants, Simulation, ...)

- - - - ---- -## More model-level QA... - - - - - ----- - -# Learning Goals - -* Curate validation datasets for assessing model quality, covering subpopulations and capabilities as needed -* Explain the oracle problem and how it challenges testing of software and models -* Use invariants to check partial model properties with automated testing -* Select and deploy automated infrastructure to evaluate and monitor model quality - ---- -# Model Quality - - -**First Part:** Measuring Prediction Accuracy -* the data scientist's perspective - -**Second Part:** What is Correctness Anyway? -* the role and lack of specifications, validation vs verification - -**Third Part:** Learning from Software Testing 🠔 -* unit testing, test case curation, invariants, simulation (next lecture) - -**Later:** Testing in Production -* monitoring, A/B testing, canary releases (in 2 weeks) - - - ----- - - - - -[XKCD 1838](https://xkcd.com/1838/), cc-by-nc 2.5 Randall Munroe - - - - - - - - - - - ---- -# Curating Validation Data & Input Slicing - - - - ----- -## Breakout Discussion - -

-

-Write a few tests for the following program:

-

-```scala

-def nextDate(year: Int, month: Int, day: Int) = ...

-```

-

-A test may look like:

-```java

-assert nextDate(2021, 2, 8) == (2021, 2, 9);

-```

-

-**As a group, discuss how you select tests. Discuss how many tests you need to feel confident.**

-

-Post answer to `#lecture` tagging group members in Slack using template:

-> Selection strategy: ...

-> Test quantity: ...

- -

-

-----

-## Defining Software Testing

-

-* Program *p* with specification *s*

-* Test consists of

- - Controlled environment

- - Test call, test inputs

- - Expected behavior/output (oracle)

-

-```java

-assertEquals(4, add(2, 2));

-assertEquals(??, factorPrime(15485863));

-```

-

-Testing is complete but unsound:

-Cannot guarantee the absence of bugs

-

-

-----

-## How to Create Test Cases?

-

-```scala

-def nextDate(year: Int, month: Int, day: Int) = ...

-```

-

-

-

-

-Note: Can focus on specification (and concepts in the domain, such as

-leap days and month lengths) or can focus on implementation

-

-Will not randomly sample from distribution of all days

-

-----

-## Software Test Case Design

-

--> Test quantity: ...

- -

-

-**Opportunistic/exploratory testing:** Add some unit tests, without much planning

-

-**Specification-based testing** ("black box"): Derive test cases from specifications

- - Boundary value analysis

- - Equivalence classes

- - Combinatorial testing

- - Random testing

-

-**Structural testing** ("white box"): Derive test cases to cover implementation paths

- - Line coverage, branch coverage

- - Control-flow, data-flow testing, MCDC, ...

-

-Test execution usually automated, but can be manual too; automated generation from specifications or code possible

-

-

-

-----

-## Example: Boundary Value Testing

-

-Analyze the specification, not the implementation!

-

-**Key Insight:** Errors often occur at the boundaries of a variable value

-

-For each variable select (1) minimum, (2) min+1, (3) medium, (4) max-1, and (5) maximum; possibly also invalid values min-1, max+1

-

-Example: `nextDate(2015, 6, 13) = (2015, 6, 14)`

- - **Boundaries?**

-

-----

-## Example: Equivalence classes

-

-**Idea:** Typically many values behave similarly, but some groups of values are different

-

-Equivalence classes derived from specifications (e.g., cases, input ranges, error conditions, fault models)

-

-Example `nextDate(2015, 6, 13)`

- - leap years, month with 28/30/31 days, days 1-28, 29, 30, 31

-

-Pick 1 value from each group, combine groups from all variables

-

-----

-## Exercise

-

-```scala

-/** Compute the price of a bus ride:

- * - Children under 2 ride for free, children under 18 and

- * senior citizen over 65 pay half, all others pay the

- * full fare of $3.

- * - On weekdays, between 7am and 9am and between 4pm and

- * 7pm a peak surcharge of $1.5 is added.

- * - Short trips under 5min during off-peak time are free.*/

-def busTicketPrice(age: Int,

- datetime: LocalDateTime,

- rideTime: Int)

-```

-

-*suggest test cases based on boundary value analysis and equivalence class testing*

-

-

-----

-## Selecting Validation Data for Model Quality?

-

-

-

-

-

-----

-## Validation Data Representative?

-

-* Validation data should reflect usage data

-* Be aware of data drift (face recognition during pandemic, new patterns in credit card fraud detection)

-* "*Out of distribution*" predictions often low quality (it may even be worth to detect out of distribution data in production, more later)

-

-*(note, similar to requirements validation: did we hear all/representative stakeholders)*

-

-

-

-

-----

-## Not All Inputs are Equal

-

-

-

-

-"Call mom"

-"What's the weather tomorrow?"

-"Add asafetida to my shopping list"

-

-----

-## Not All Inputs are Equal

-

-> There Is a Racial Divide in Speech-Recognition Systems, Researchers Say:

-> Technology from Amazon, Apple, Google, IBM and Microsoft misidentified 35 percent of words from people who were black. White people fared much better. -- [NYTimes March 2020](https://www.nytimes.com/2020/03/23/technology/speech-recognition-bias-apple-amazon-google.html)

-

-----

-

-

-----

-## Not All Inputs are Equal

-

-> some random mistakes vs rare but biased mistakes?

-

-* A system to detect when somebody is at the door that never works for people under 5ft (1.52m)

-* A spam filter that deletes alerts from banks

-

-

-**Consider separate evaluations for important subpopulations; monitor mistakes in production**

-

-

-

-----

-## Identify Important Inputs

-

-Curate Validation Data for Specific Problems and Subpopulations:

-* *Regression testing:* Validation dataset for important inputs ("call mom") -- expect very high accuracy -- closest equivalent to **unit tests**

-* *Uniformness/fairness testing:* Separate validation dataset for different subpopulations (e.g., accents) -- expect comparable accuracy

-* *Setting goals:* Validation datasets for challenging cases or stretch goals -- accept lower accuracy

-

-Derive from requirements, experts, user feedback, expected problems etc. Think *specification-based testing*.

-

-

-----

-## Important Input Groups for Cancer Prognosis?

-

-

-

-

-----

-## Input Partitioning

-

-* Guide testing by identifying groups and analyzing accuracy of subgroups

- * Often for fairness: gender, country, age groups, ...

- * Possibly based on business requirements or cost of mistakes

-* Slice test data by population criteria, also evaluate interactions

-* Identifies problems and plan mitigations, e.g., enhance with more data for subgroup or reduce confidence

-

-

-

-

-Good reading: Barash, Guy, Eitan Farchi, Ilan Jayaraman, Orna Raz, Rachel Tzoref-Brill, and Marcel Zalmanovici. "Bridging the gap between ML solutions and their business requirements using feature interactions." In Proc. Symposium on the Foundations of Software Engineering, pp. 1048-1058. 2019.

-

-----

-## Input Partitioning Example

-

-

-

-

-

-

-Input divided by movie age. Notice low accuracy, but also low support (i.e., little validation data), for old movies.

-

-

-

-Input divided by genre, rating, and length. Accuracy differs, but also amount of test data used ("support") differs, highlighting low confidence areas.

-

-

-

-

-

-

-

-Source: Barash, Guy, et al. "Bridging the gap between ML solutions and their business requirements using feature interactions." In Proc. FSE, 2019.

-

-----

-## Input Partitioning Discussion

-

-**How to slice evaluation data for cancer prognosis?**

-

-

-

-

-----

-## Example: Model Impr. at Apple (Overton)

-

-

-

-

-

-

-

-Ré, Christopher, Feng Niu, Pallavi Gudipati, and Charles Srisuwananukorn. "[Overton: A Data System for Monitoring and Improving Machine-Learned Products](https://arxiv.org/abs/1909.05372)." arXiv preprint arXiv:1909.05372 (2019).

-

-

-----

-## Example: Model Improvement at Apple (Overton)

-

-* Focus engineers on creating training and validation data, not on model search (AutoML)

-* Flexible infrastructure to slice telemetry data to identify underperforming subpopulations -> focus on creating better training data (better, more labels, in semi-supervised learning setting)

-

-

-

-

-Ré, Christopher, Feng Niu, Pallavi Gudipati, and Charles Srisuwananukorn. "[Overton: A Data System for Monitoring and Improving Machine-Learned Products](https://arxiv.org/abs/1909.05372)." arXiv preprint arXiv:1909.05372 (2019).

-

-

-

-

-

-

-

----

-# Testing Model Capabilities

-

-

-

-

-

-

-

-

-Further reading: Christian Kaestner. [Rediscovering Unit Testing: Testing Capabilities of ML Models](https://towardsdatascience.com/rediscovering-unit-testing-testing-capabilities-of-ml-models-b008c778ca81). Toward Data Science, 2021.

-

-

-

-----

-## Testing Capabilities

-

-

-

-Are there "concepts" or "capabilities" the model should learn?

-

-Example capabilities of sentiment analysis:

-* Handle *negation*

-* Robustness to *typos*

-* Ignore synonyms and abbreviations

-* Person and location names are irrelevant

-* Ignore gender

-* ...

-

-For each capability create specific test set (multiple examples)

-

-

-

-

-

-Ribeiro, Marco Tulio, Tongshuang Wu, Carlos Guestrin, and Sameer Singh. "[Beyond Accuracy: Behavioral Testing of NLP Models with CheckList](https://homes.cs.washington.edu/~wtshuang/static/papers/2020-acl-checklist.pdf)." In Proceedings ACL, p. 4902–4912. (2020).

-

-

-----

-## Testing Capabilities

-

-

-

-

-

-

-From: Ribeiro, Marco Tulio, Tongshuang Wu, Carlos Guestrin, and Sameer Singh. "[Beyond Accuracy: Behavioral Testing of NLP Models with CheckList](https://homes.cs.washington.edu/~wtshuang/static/papers/2020-acl-checklist.pdf)." In Proceedings ACL, p. 4902–4912. (2020).

-

-

-----

-## Testing Capabilities

-

-

-

-

-

-

-From: Ribeiro, Marco Tulio, Tongshuang Wu, Carlos Guestrin, and Sameer Singh. "[Beyond Accuracy: Behavioral Testing of NLP Models with CheckList](https://homes.cs.washington.edu/~wtshuang/static/papers/2020-acl-checklist.pdf)." In Proceedings ACL, p. 4902–4912. (2020).

-

-----

-## Examples of Capabilities

-

-**What could be capabilities of the cancer classifier?**

-

-

-

-----

-## Capabilities vs Specifications vs Slicing

-

-

-

-----

-## Capabilities vs Specifications vs Slicing

-

-Capabilities are partial specifications of expected behavior (not expected to always hold)

-

-Some capabilities correspond to slices of existing test data, for others we may need to create new data

-

-----

-## Recall: Is it fair to expect generalization beyond training distribution?

-

-

-

-

-

-*Shall a cancer detector generalize to other hospitals? Shall image captioning generalize to describing pictures of star formations?*

-

-Note: We wouldn't test a first year elementary school student on high-school math. This would be "out of the training distribution"

-

-----

-## Recall: Shortcut Learning

-

-

-

-

-

-Figure from: Geirhos, Robert, et al. "[Shortcut learning in deep neural networks](https://arxiv.org/abs/2004.07780)." Nature Machine Intelligence 2, no. 11 (2020): 665-673.

-

-----

-## More Shortcut Learning :)

-

-

-

-

-Figure from Beery, Sara, Grant Van Horn, and Pietro Perona. “Recognition in terra incognita.” In Proceedings of the European Conference on Computer Vision (ECCV), pp. 456–473. 2018.

-

-----

-## Generalization beyond Training Distribution?

-

-

-

-* Typically training and validation data from same distribution (i.i.d. assumption!)

-* Many models can achieve similar accuracy

-* Models that learn "right" abstractions possibly indistinguishable from models that use shortcuts

- - see tank detection example

- - Can we guide the model towards "right" abstractions?

-* Some models generalize better to other distributions not used in training

- - e.g., cancer images from other hospitals, from other populations

- - Drift and attacks, ...

-

-

-

-

-

-See discussion in D'Amour, Alexander, et al. "[Underspecification presents challenges for credibility in modern machine learning](https://arxiv.org/abs/2011.03395)." arXiv preprint arXiv:2011.03395 (2020).

-

-----

-## Hypothesis: Testing Capabilities may help with Generalization

-

-* Capabilities are "partial specifications", given beyond training data

-* Encode domain knowledge of the problem

- * Capabilities are inherently domain specific

- * Curate capability-specific test data for a problem

-* Testing for capabilities helps to distinguish models that use intended abstractions

-* May help find models that generalize better

-

-

-

-

-

-See discussion in D'Amour, Alexander, Katherine Heller, Dan Moldovan, Ben Adlam, Babak Alipanahi, Alex Beutel, Christina Chen et al. "[Underspecification presents challenges for credibility in modern machine learning](https://arxiv.org/abs/2011.03395)." arXiv preprint arXiv:2011.03395 (2020).

-

-----

-

-## Strategies for identifying capabilities

-

-* Analyze common mistakes (e.g., classify past mistakes in cancer prognosis)

-* Use existing knowledge about the problem (e.g., linguistics theories)

-* Observe humans (e.g., how do radiologists look for cancer)

-* Derive from requirements (e.g., fairness)

-* Causal discovery from observational data?

-

-

-

-Further reading: Christian Kaestner. [Rediscovering Unit Testing: Testing Capabilities of ML Models](https://towardsdatascience.com/rediscovering-unit-testing-testing-capabilities-of-ml-models-b008c778ca81). Toward Data Science, 2021.

-

-

-

-----

-## Examples of Capabilities

-

-**What could be capabilities of image captioning system?**

-

-

-

-

-

-----

-## Generating Test Data for Capabilities

-

-**Idea 1: Domain-specific generators**

-

-Testing *negation* in sentiment analysis with template: -`I {NEGATION} {POS_VERB} the {THING}.` - -Testing texture vs shape priority with artificial generated images: - - - - -Figure from Geirhos, Robert, Patricia Rubisch, Claudio Michaelis, Matthias Bethge, Felix A. Wichmann, and Wieland Brendel. “ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness.” In Proc. International Conference on Learning Representations (ICLR), (2019). - ----- -## Generating Test Data for Capabilities - -**Idea 2: Mutating existing inputs** - -Testing *synonyms* in sentiment analysis by replacing words with synonyms, keeping label - -Testing *robust against noise and distraction* add `and false is not true` or random URLs to text - - - - - - -Figure from: Ribeiro, Marco Tulio, Tongshuang Wu, Carlos Guestrin, and Sameer Singh. "[Beyond Accuracy: Behavioral Testing of NLP Models with CheckList](https://homes.cs.washington.edu/~wtshuang/static/papers/2020-acl-checklist.pdf)." In Proceedings ACL, p. 4902–4912. (2020). - - ----- -## Generating Test Data for Capabilities - -**Idea 3: Crowd-sourcing test creation** - -Testing *sarcasm* in sentiment analysis: Ask humans to minimally change text to flip sentiment with sarcasm - -Testing *background* in object detection: Ask humans to take pictures of specific objects with unusual backgrounds - - - - - -Figure from: Kaushik, Divyansh, Eduard Hovy, and Zachary C. Lipton. “Learning the difference that makes a difference with counterfactually-augmented data.” In Proc. International Conference on Learning Representations (ICLR), (2020). - ----- -## Generating Test Data for Capabilities - -**Idea 4: Slicing test data** - -Testing *negation* in sentiment analysis by finding sentences containing 'not' - - - - - - - -Ré, Christopher, Feng Niu, Pallavi Gudipati, and Charles Srisuwananukorn. "[Overton: A Data System for Monitoring and Improving Machine-Learned Products](https://arxiv.org/abs/1909.05372)." arXiv preprint arXiv:1909.05372 (2019). - - - ----- -## Examples of Capabilities - -**How to generate test data for capabilities of the cancer classifier?** - - - - ----- -## Testing vs Training Capabilities - -* Dual insight for testing and training -* Strategies for curating test data can also help select training data -* Generate capability-specific training data to guide training (data augmentation) - - - -Further reading on using domain knowledge during training: Von Rueden, Laura, Sebastian Mayer, Jochen Garcke, Christian Bauckhage, and Jannis Schuecker. "Informed machine learning–towards a taxonomy of explicit integration of knowledge into machine learning." Learning 18 (2019): 19-20. - - - ----- -## Preliminary Summary: Specification-Based Testing Techniques as Inspiration - -* Boundary value analysis -* Partition testing & equivalence classes -* Combinatorial testing -* Decision tables - -Use to identify datasets for **subpopulations** and **capabilities**, not individual tests. - ----- -## On Terminology - -* Test data curation is emerging as a very recent concept for testing ML components -* No consistent terminology - - "Testing capabilities" in checklist paper - - "Stress testing" in some others (but stress testing has a very different meaning in software testing: robustness to overload) -* Software engineering concepts translate, but names not adopted in ML community - - specification-based testing, black-box testing - - equivalence class testing, boundary-value analysis - - - - - - - - - - - - - - - - - ---- -# Automated (Random) Testing and Invariants - -(if it wasn't for that darn oracle problem) - - - - - ----- -## Random Test Input Generation is Easy - - -```java -@Test -void testNextDate() { - nextDate(488867101, 1448338253, -997372169) - nextDate(2105943235, 1952752454, 302127018) - nextDate(1710531330, -127789508, 1325394033) - nextDate(-1512900479, -439066240, 889256112) - nextDate(1853057333, 1794684858, 1709074700) - nextDate(-1421091610, 151976321, 1490975862) - nextDate(-2002947810, 680830113, -1482415172) - nextDate(-1907427993, 1003016151, -2120265967) -} -``` - -**But is it useful?** - ----- -## Cancer in Random Image? - - - ----- -## Randomly Generating "Realistic" Inputs is Possible - - -```java -@Test -void testNextDate() { - nextDate(2010, 8, 20) - nextDate(2024, 7, 15) - nextDate(2011, 10, 27) - nextDate(2024, 5, 4) - nextDate(2013, 8, 27) - nextDate(2010, 2, 30) -} -``` - -**But how do we know whether the computation is correct?** - - - ----- -## Automated Model Validation Data Generation? - -```java -@Test -void testCancerPrediction() { - cancerModel.predict(generateRandomImage()) - cancerModel.predict(generateRandomImage()) - cancerModel.predict(generateRandomImage()) -} -``` - -* **Realistic inputs?** -* **But how do we get labels?** - - ----- -## The Oracle Problem - -*How do we know the expected output of a test?* - -```java -assertEquals(??, factorPrime(15485863)); -``` - - - - ----- -## Test Case Generation & The Oracle Problem - -

-

-* Manually construct input-output pairs (does not scale, cannot automate)

-* Comparison against gold standard (e.g., alternative implementation, executable specification)

-* Checking of global properties only -- crashes, buffer overflows, code injections

-* Manually written assertions -- partial specifications checked at runtime

-

-

-

-

-

-

-----

-## Manually constructing outputs

-

-

-```java

-@Test

-void testNextDate() {

- assert nextDate(2010, 8, 20) == (2010, 8, 21);

- assert nextDate(2024, 7, 15) == (2024, 7, 16);

- assert nextDate(2010, 2, 30) throws InvalidInputException;

-}

-```

-

-```java

-@Test

-void testCancerPrediction() {

- assert cancerModel.predict(loadImage("random1.jpg")) == true;

- assert cancerModel.predict(loadImage("random2.jpg")) == true;

- assert cancerModel.predict(loadImage("random3.jpg")) == false;

-}

-```

-

-*(tedious, labor intensive; possibly crowd sourced)*

-

-----

-## Compare against reference implementation

-

-**assuming we have a correct implementation**

-

-```java

-@Test

-void testNextDate() {

- assert nextDate(2010, 8, 20) == referenceLib.nextDate(2010, 8, 20);

- assert nextDate(2024, 7, 15) == referenceLib.nextDate(2024, 7, 15);

- assert nextDate(2010, 2, 30) == referenceLib.nextDate(2010, 2, 30)

-}

-```

-

-```java

-@Test

-void testCancerPrediction() {

- assert cancerModel.predict(loadImage("random1.jpg")) == ???;

-}

-```

-

-*(usually no reference implementation for ML problems)*

-

-

-----

-## Checking global specifications

-

-**Ensure, no computation crashes**

-

-```java

-@Test

-void testNextDate() {

- nextDate(2010, 8, 20)

- nextDate(2024, 7, 15)

- nextDate(2010, 2, 30)

-}

-```

-

-

-```java

-@Test

-void testCancerPrediction() {

- cancerModel.predict(generateRandomImage())

- cancerModel.predict(generateRandomImage())

- cancerModel.predict(generateRandomImage())

-}

-```

-

-*(we usually do fear crashing bugs in ML models)*

-

-----

-## Invariants as partial specification

-

-

-```java

-class Stack {

- int size = 0;

- int MAX_SIZE = 100;

- String[] data = new String[MAX_SIZE];

- // class invariant checked before and after every method

- private void check() {

- assert(size>=0 && size<=MAX_SIZE);

- }

- public void push(String v) {

- check();

- if (size

-

-```java

-@Test

-void testCancerPrediction() {

- cancerModel.predict(generateRandomImage())

-}

-```

-

-

-

-* Manually construct input-output pairs (does not scale, cannot automate)

- - **too expensive at scale**

-* Comparison against gold standard (e.g., alternative implementation, executable specification)

- - **no specification, usually no other "correct" model**

- - comparing different techniques useful? (see ensemble learning)

- - semi-supervised learning as approximation?

-* Checking of global properties only -- crashes, buffer overflows, code injections - **??**

-* Manually written assertions -- partial specifications checked at runtime - **??**

-

-

-

-

-

-----

-## Invariants in Machine Learned Models (Metamorphic Testing)

-

-Exploit relationships between inputs

-

-* If two inputs differ only in **X** -> output should be the same

-* If inputs differ in **Y** output should be flipped

-* If inputs differ only in feature **F**, prediction for input with higher F should be higher

-* ...

-

-----

-## Invariants in Machine Learned Models?

-

-

-

-----

-## Some Capabilities are Invariants

-

-**Some capability tests can be expressed as invariants and automatically encoded as transformations to existing test data**

-

-

-* Negation should flip sentiment analysis result

-* Typos should not affect sentiment analysis result

-* Changes to locations or names should not affect sentiment analysis results

-

-

-

-

-

-

-From: Ribeiro, Marco Tulio, Tongshuang Wu, Carlos Guestrin, and Sameer Singh. "[Beyond Accuracy: Behavioral Testing of NLP Models with CheckList](https://homes.cs.washington.edu/~wtshuang/static/papers/2020-acl-checklist.pdf)." In Proceedings ACL, p. 4902–4912. (2020).

-

-

-

-----

-## Examples of Invariants

-

-

-

-

-* Credit rating should not depend on gender:

- - $\forall x. f(x[\text{gender} \leftarrow \text{male}]) = f(x[\text{gender} \leftarrow \text{female}])$

-* Synonyms should not change the sentiment of text:

- - $\forall x. f(x) = f(\texttt{replace}(x, \text{"is not", "isn't"}))$

-* Negation should swap meaning:

- - $\forall x \in \text{"X is Y"}. f(x) = 1-f(\texttt{replace}(x, \text{" is ", " is not "}))$

-* Robustness around training data:

- - $\forall x \in \text{training data}. \forall y \in \text{mutate}(x, \delta). f(x) = f(y)$

-* Low credit scores should never get a loan (sufficient conditions for classification, "anchors"):

- - $\forall x. x.\text{score} < 649 \Rightarrow \neg f(x)$

-

-Identifying invariants requires domain knowledge of the problem!

-

-

-

-----

-## Metamorphic Testing

-

-Formal description of relationships among inputs and outputs (*Metamorphic Relations*)

-

-In general, for a model $f$ and inputs $x$ define two functions to transform inputs and outputs $g\_I$ and $g\_O$ such that:

-

-$\forall x. f(g\_I(x)) = g\_O(f(x))$

-

-

-

-e.g. $g\_I(x)= \texttt{replace}(x, \text{" is ", " is not "})$ and $g\_O(x)=\neg x$

-

-

-

-----

-## On Testing with Invariants/Assertions

-

-* Defining good metamorphic relations requires knowledge of the problem domain

-* Good metamorphic relations focus on parts of the system

-* Invariants usually cover only one aspect of correctness -- maybe capabilities

-* Invariants and near-invariants can be mined automatically from sample data (see *specification mining* and *anchors*)

-

-

-

-Further reading:

-* Segura, Sergio, Gordon Fraser, Ana B. Sanchez, and Antonio Ruiz-Cortés. "[A survey on metamorphic testing](https://core.ac.uk/download/pdf/74235918.pdf)." IEEE Transactions on software engineering 42, no. 9 (2016): 805-824.

-* Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "[Anchors: High-precision model-agnostic explanations](https://sameersingh.org/files/papers/anchors-aaai18.pdf)." In Thirty-Second AAAI Conference on Artificial Intelligence. 2018.

-

-

-----

-## Invariant Checking aligns with Requirements Validation

-

-

-

-

-

-

-----

-## Approaches for Checking in Variants

-

-* Generating test data (random, distributions) usually easy

-* Transformations of existing test data

-* Adversarial learning: For many techniques gradient-based techniques to search for invariant violations -- that's roughly analogous to symbolic execution in SE

-* Early work on formally verifying invariants for certain models (e.g., small deep neural networks)

-

-

-

-

-Further readings:

-Singh, Gagandeep, Timon Gehr, Markus Püschel, and Martin Vechev. "[An abstract domain for certifying neural networks](https://dl.acm.org/doi/pdf/10.1145/3290354)." Proceedings of the ACM on Programming Languages 3, no. POPL (2019): 1-30.

-

-

-----

-## Using Invariant Violations

-

-* Are invariants strict?

- * Single violation in random inputs usually not meaningful

- * In capability testing, average accuracy in realistic data needed

- * Maybe strict requirements for fairness or robustness?

-* Do invariant violations matter if the input data is not representative?

-

-

-

-

----

-# Simulation-Based Testing

-

-

-

-

-

-----

-## One More Thing: Simulation-Based Testing

-

-In some cases it is easy to go from outputs to inputs:

-

-```java

-assertEquals(??, factorPrime(15485862));

-```

-

-```java

-randomNumbers = [2, 3, 7, 7, 52673]

-assertEquals(randomNumbers,

- factorPrime(multiply(randomNumbers)));

-```

-

-**Similar idea in machine-learning problems?**

-

-

-

-----

-## One More Thing: Simulation-Based Testing

-

-

-

-

-

-* Derive input-output pairs from simulation, esp. in vision systems

-* Example: Vision for self-driving cars:

- * Render scene -> add noise -> recognize -> compare recognized result with simulator state

-* Quality depends on quality of simulator:

- * examples: render picture/video, synthesize speech, ...

- * Less suitable where input-output relationship unknown, e.g., cancer prognosis, housing price prediction

-

-

-

-

-

-

-

-

-

-

-Further readings: Zhang, Mengshi, Yuqun Zhang, Lingming Zhang, Cong Liu, and Sarfraz Khurshid. "DeepRoad: GAN-based metamorphic testing and input validation framework for autonomous driving systems." In Proc. ASE. 2018.

-

-

-----

-## Preliminary Summary: Invariants and Generation

-

-* Generating sample inputs is easy, but knowing corresponding outputs is not (oracle problem)

-* Crashing bugs are not a concern

-* Invariants + generated data can check capabilities or properties (metamorphic testing)

- - Inputs can be generated realistically or to find violations (adversarial learning)

-* If inputs can be computed from outputs, tests can be automated (simulation-based testing)

-

-----

-## On Terminology

-

-**Metamorphic testing** is an academic software engineering term that's not common in ML literature, it generalizes many concepts regularly reinvented

-

-Much of the security, safety and robustness literature in ML focuses on invariants

-

-

-

-

-

-

-

-

-

-

----

-# Other Testing Concepts

-

-

-----

-

-## Test Coverage

-

-

-

-

-----

-## Example: Structural testing

-

-```java

-int divide(int A, int B) {

- if (A==0)

- return 0;

- if (B==0)

- return -1;

- return A / B;

-}

-```

-

-*minimum set of test cases to cover all lines? all decisions? all path?*

-

-

-

-

-

-----

-## Defining Structural Testing ("white box")

-

-* Test case creation is driven by the implementation, not the specification

-* Typically aiming to increase coverage of lines, decisions, etc

-* Automated test generation often driven by maximizing coverage (for finding crashing bugs)

-

-

-----

-## Whitebox Analysis in ML

-

-* Several coverage metrics have been proposed

- - All path of a decision tree?

- - All neurons activated at least once in a DNN? (several papers "neuron coverage")

- - Linear regression models??

-* Often create artificial inputs, not realistic for distribution

-* Unclear whether those are useful

-* Adversarial learning techniques usually more efficient at finding invariant violations

-

-----

-## Regression Testing

-

-* Whenever bug detected and fixed, add a test case

-* Make sure the bug is not reintroduced later

-* Execute test suite after changes to detect regressions

- - Ideally automatically with continuous integration tools

-*

-* Maps well to curating test sets for important populations in ML

-

-----

-## Mutation Analysis

-

-* Start with program and passing test suite

-* Automatically insert small modifications ("mutants") in the source code

- - `a+b` -> `a-b`

- - `a

-

-* Testing script

- * Existing model: Automatically evaluate model on labeled training set; multiple separate evaluation sets possible, e.g., for slicing, regressions

- * Training model: Automatically train and evaluate model, possibly using cross-validation; many ML libraries provide built-in support

- * Report accuracy, recall, etc. in console output or log files

- * May deploy learning and evaluation tasks to cloud services

- * Optionally: Fail test below bound (e.g., accuracy <.9; accuracy < last accuracy)

-* Version control test data, model and test scripts, ideally also learning data and learning code (feature extraction, modeling, ...)

-* Continuous integration tool can trigger test script and parse output, plot for comparisons (e.g., similar to performance tests)

-* Optionally: Continuous deployment to production server

-

-

-

-----

-## Dashboards for Model Evaluation Results

-

-[](https://eng.uber.com/michelangelo/)

-

-

-

-Jeremy Hermann and Mike Del Balso. [Meet Michelangelo: Uber’s Machine Learning Platform](https://eng.uber.com/michelangelo/). Blog, 2017

-

-----

-

-## Specialized CI Systems

-

-

-

-

-

-Renggli et. al, [Continuous Integration of Machine Learning Models with ease.ml/ci: Towards a Rigorous Yet Practical Treatment](http://www.sysml.cc/doc/2019/162.pdf), SysML 2019

-

-----

-## Dashboards for Comparing Models

-

-

-

-

-

-Matei Zaharia. [Introducing MLflow: an Open Source Machine Learning Platform](https://databricks.com/blog/2018/06/05/introducing-mlflow-an-open-source-machine-learning-platform.html), 2018

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

----

-# Summary

-

-

-

-Curating test data

- - Analyzing specifications, capabilities

- - Not all inputs are equal: Identify important inputs (inspiration from specification-based testing)

- - Slice data for evaluation

- - Identifying capabilities and generating relevant tests

-

-Automated random testing

- - Feasible with invariants (e.g. metamorphic relations)

- - Sometimes possible with simulation

-

-Automate the test execution with continuous integration

-

-

-

----

-# Further readings

-

-

-

-

-* Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "[Semantically equivalent adversarial rules for debugging NLP models](https://www.aclweb.org/anthology/P18-1079.pdf)." In Proc. ACL, pp. 856-865. 2018.

-* Barash, Guy, Eitan Farchi, Ilan Jayaraman, Orna Raz, Rachel Tzoref-Brill, and Marcel Zalmanovici. "[Bridging the gap between ML solutions and their business requirements using feature interactions](https://dl.acm.org/doi/abs/10.1145/3338906.3340442)." In Proc. FSE, pp. 1048-1058. 2019.

-* Ashmore, Rob, Radu Calinescu, and Colin Paterson. "[Assuring the machine learning lifecycle: Desiderata, methods, and challenges](https://arxiv.org/abs/1905.04223)." arXiv preprint arXiv:1905.04223. 2019.

-* Christian Kaestner. [Rediscovering Unit Testing: Testing Capabilities of ML Models](https://towardsdatascience.com/rediscovering-unit-testing-testing-capabilities-of-ml-models-b008c778ca81). Toward Data Science, 2021.

-* D'Amour, Alexander, Katherine Heller, Dan Moldovan, Ben Adlam, Babak Alipanahi, Alex Beutel, Christina Chen et al. "[Underspecification presents challenges for credibility in modern machine learning](https://arxiv.org/abs/2011.03395)." *arXiv preprint arXiv:2011.03395* (2020).

-* Segura, Sergio, Gordon Fraser, Ana B. Sanchez, and Antonio Ruiz-Cortés. "[A survey on metamorphic testing](https://core.ac.uk/download/pdf/74235918.pdf)." IEEE Transactions on software engineering 42, no. 9 (2016): 805-824.

-

-

-

diff --git a/lectures/07_modeltesting/oracle.svg b/lectures/07_modeltesting/oracle.svg

deleted file mode 100644

index 5d0d3832..00000000

--- a/lectures/07_modeltesting/oracle.svg

+++ /dev/null

@@ -1 +0,0 @@

-

\ No newline at end of file

diff --git a/lectures/07_modeltesting/overton.png b/lectures/07_modeltesting/overton.png

deleted file mode 100644

index a3ccce38..00000000

Binary files a/lectures/07_modeltesting/overton.png and /dev/null differ

diff --git a/lectures/07_modeltesting/radiology-distribution.png b/lectures/07_modeltesting/radiology-distribution.png

deleted file mode 100644

index ad7f5375..00000000

Binary files a/lectures/07_modeltesting/radiology-distribution.png and /dev/null differ

diff --git a/lectures/07_modeltesting/radiology.jpg b/lectures/07_modeltesting/radiology.jpg

deleted file mode 100644

index 5bc31795..00000000

Binary files a/lectures/07_modeltesting/radiology.jpg and /dev/null differ

diff --git a/lectures/07_modeltesting/random.jpg b/lectures/07_modeltesting/random.jpg

deleted file mode 100644

index 5ec8eded..00000000

Binary files a/lectures/07_modeltesting/random.jpg and /dev/null differ

diff --git a/lectures/07_modeltesting/sarcasm.png b/lectures/07_modeltesting/sarcasm.png

deleted file mode 100644

index eb58e9c8..00000000

Binary files a/lectures/07_modeltesting/sarcasm.png and /dev/null differ

diff --git a/lectures/07_modeltesting/shortcutlearning-cows.png b/lectures/07_modeltesting/shortcutlearning-cows.png

deleted file mode 100644

index 4ce89b21..00000000