|

| 1 | +# too many model context protocol servers and LLM allocations on the dance floor |

| 2 | + |

| 3 | +Source: <https://ghuntley.com/allocations/> |

| 4 | + |

| 5 | +This blog post intends to be a definitive guide to context engineering fundamentals from the perspective of an engineer who builds commercial coding assistants and harnesses for a living. |

| 6 | + |

| 7 | +## 1. Model Context Protocol |

| 8 | + |

| 9 | +### 1.1. What is a tool? |

| 10 | + |

| 11 | +A tool is an external piece of software that an agent can invoke to provide context to an LLM. Typically, they are packaged as binaries and distributed via NPM, or they can be written in any programming language; alternatively, they may be a remote MCP provided by a server. |

| 12 | + |

| 13 | +```python |

| 14 | + @mcp.tool() |

| 15 | + async def list_files(directory_path: str, ctx: Context[ServerSession, None]) -> List[Dict[str, Any]]: |

| 16 | + ### |

| 17 | + ### tool prompt starts here |

| 18 | + """ |

| 19 | + List all files and directories in a given directory path. |

| 20 | +

|

| 21 | + This tool helps explore filesystem structure by returning a list of items |

| 22 | + with their names and types (file or directory). Useful for understanding |

| 23 | + project structure, finding specific files, or navigating unfamiliar codebases. |

| 24 | +

|

| 25 | + Args: |

| 26 | + directory_path: The absolute or relative path to the directory to list |

| 27 | +

|

| 28 | + Returns: |

| 29 | + List of dictionaries with 'name' and 'type' keys for each filesystem item |

| 30 | + """ |

| 31 | + ### |

| 32 | + ### tool prompt ends here |

| 33 | + ... |

| 34 | +``` |

| 35 | + |

| 36 | +For the remainder of this blog post, we'll focus on tool descriptions rather than the application logic itself, as each tool description is allocated into the context window to advertise capabilities that the LLM can invoke. |

| 37 | + |

| 38 | +### 1.2. What is a token? |

| 39 | + |

| 40 | +Language models process text using tokens, which are common sequences of characters found in a set of text. Below you will find a tokenisation of the tool description above. |

| 41 | + |

| 42 | + |

| 43 | + |

| 44 | +### 1.3. What is a context window? |

| 45 | + |

| 46 | +An LLM context window is the maximum amount of text (measured in tokens, which are roughly equivalent to words or parts of words) that a large language model can process at one time when generating or understanding text. |

| 47 | + |

| 48 | +### 1.4. What is a harness? |

| 49 | + |

| 50 | +A harness is anything that wraps the LLM to get outcomes. For software development, this may include tools such as Roo/Cline, Cursor, Amp, Opencode, Codex, Windsurf, or any of these coding tools available. |

| 51 | + |

| 52 | +### 1.5. What is the real context window size? |

| 53 | + |

| 54 | +The numbers advertised by LLM vendors for the context window are not the real context window. You should consider that to be a marketing number. |

| 55 | + |

| 56 | +It's because there are two cold, hard facts: |

| 57 | +- The LLM itself needs to allocate to the context window through its system prompt to function. |

| 58 | +- The coding harness also needs to allocate resources in addition to those to function correctly. |

| 59 | + |

| 60 | +LLMs work by needle in the haystack. The more you allocate, the worse your outcomes will be. Less is more, folks! You don't need the "full context window" (whatever that means); you really only want to use 100k of it. |

| 61 | + |

| 62 | +## 2. How amny tools does an MCP server expose? |

| 63 | + |

| 64 | +It's not just the amount of tokens allocated, but also a question of the number of tools - the more tools that are allocated into a context window, the greater the chances of driving inconsistent behaviour in the coding harness. |

| 65 | + |

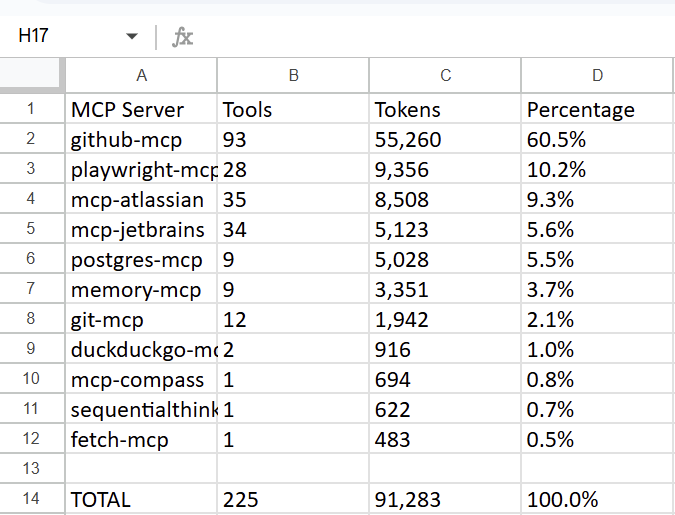

| 66 | + |

| 67 | + |

| 68 | +## 3. What is in the billboard or tool prompt description? |

| 69 | + |

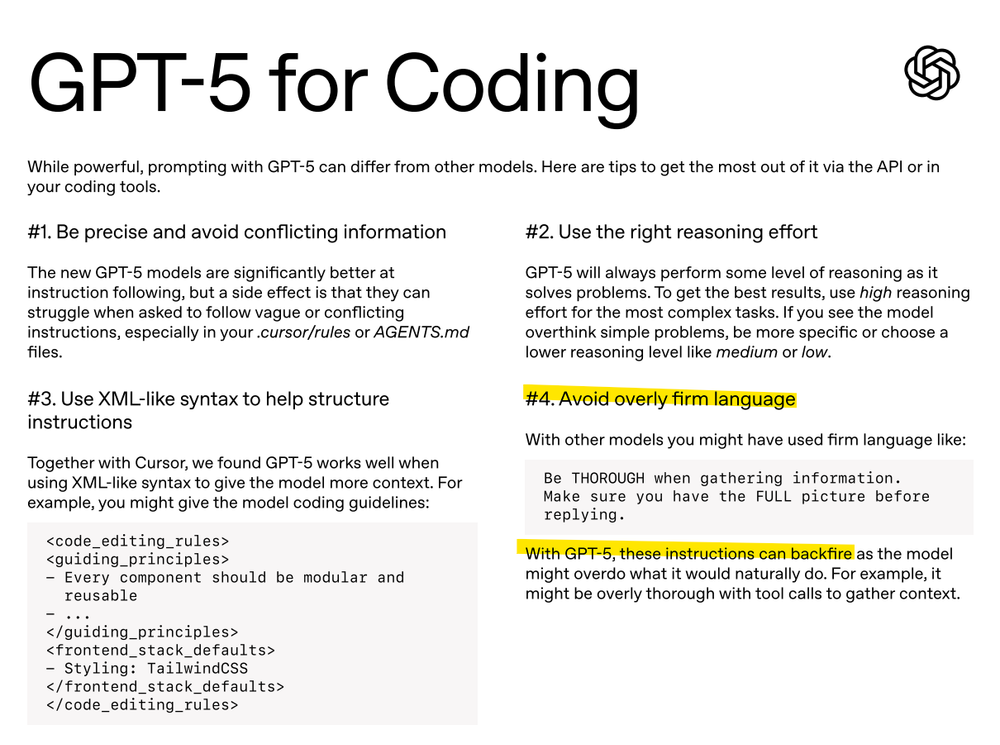

| 70 | +Different LLMs have different styles and recommendations on how a tool or a tool prompt should be designed. |

| 71 | + |

| 72 | + |

| 73 | + |

| 74 | +## 4. What about security? |

| 75 | + |

| 76 | +There is no name-spacing in the context window. If it's in the context window, it is up for consideration and execution. There is no significant difference between the coding harness prompt, the model system prompt, and the tooling prompts. It's all the same. |

0 commit comments