Replies: 1 comment 1 reply

-

|

Nice point. You are right! In part 1 it should not make a difference. But in part 2 you should indeed use the correct scaling. |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

Hi!

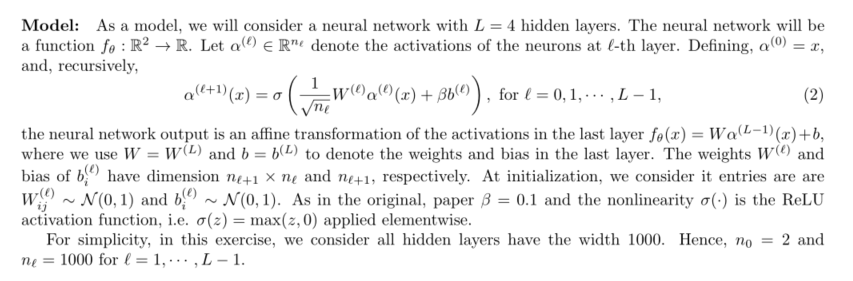

In the assignment, we have this given.

In the article, they use

so they do not agree on the formula for the output layer (called

\alpha^{L+1}in the paper andf_\thetain the assignment). I think one must scale the output layyer by1/\sqrt(n_L)and multiply the bias by\beta. In the reference code of Antonio, this is how it is implemented. When I implemented myself without that scaling, the learning rate of1was too large, and the training did not converge.So hopefully you don't have to fall into that trap. :)

Beta Was this translation helpful? Give feedback.

All reactions