Summary: RDSim is a Robo Delivery Simulator developed for autonomous delivery systems. It integrates state-of-the-art SLAM, localization, planning, and control technologies within the Gazebo simulation environment. Designed as a comprehensive solution, RDSim supports robot control, environment simulation, and robust navigation capabilities.

There are two ways to execute: 'Manual Installation && build' or 'Docker Installation'

RDSim clone

First of all, we need to clone this project before that.

$ cd ~/ros2_ws/src

$ git clone --recursive https://github.com/AuTURBO/RDSim.git

$ cd ~/ros2_ws/src/RDSim/ && git submodule update --remoteRequirements

Setting GAZEBO_RESOURCE_PATH

echo "export GAZEBO_RESOURCE_PATH=/usr/share/gazebo-11:$GAZEBO_RESOURCE_PATH" >> ~/.bashrc

source ~/.bashrcInstall dependency

$ sudo apt-get update && sudo apt install -y \

ros-humble-robot-localization \

ros-humble-imu-filter-madgwick \

ros-humble-controller-manager \

ros-humble-diff-drive-controller \

ros-humble-interactive-marker-twist-server \

ros-humble-joint-state-broadcaster \

ros-humble-joint-trajectory-controller \

ros-humble-joint-state-publisher-gui \

ros-humble-joy \

ros-humble-robot-state-publisher \

ros-humble-teleop-twist-joy \

ros-humble-twist-mux \

libgazebo-dev \

ros-humble-spatio-temporal-voxel-layer \

ros-humble-pcl-ros \

ros-humble-pcl-conversions \

ros-humble-rclcpp-components \

ros-humble-xacro* \

tmux \

tmuxp \

&& echo 'alias start_rdsim="cd ~/ros2_ws/src/RDSim/rdsim_launcher && tmuxp load rdsim_launcher.yaml"' >> ~/.bashrc \

&& echo 'alias end="tmux kill-session && killgazebo"' >> ~/.bashrc \

&& source ~/.bashrcRDSim build

$ cd ~/ros2_ws && rosdep install --ignore-src --rosdistro humble --from-paths ./src/RDSim/rdsim_submodules/navigation2

$ cd ~/ros2_ws && colcon build --symlink-install --parallel-workers 8 && source install/local_setup.bashDocker environment tested on Ubuntu 22.04, nvidia

# in rdsim main directory

cd ~/ros2_ws/src/RDSim/docker && ./run_command.sh

# in docker container

cd ~/ros2_ws && colcon build --symlink-install --parallel-workers 8 && source install/local_setup.bashTo start the simulation and launch all necessary nodes, simply execute the following command:

start_rdsimThis command initializes the RDSim environment and starts all relevant processes automatically.

To terminate all running nodes and clean up resources, use the following

endThis command ensures that all processes related to the simulation are safely stopped.

ros2 launch rdsim_gazebo rdsim_gazebo_world.launch.pyros2 launch rdsim_description rdsim_gazebo.launch.pyExecuting the teleoperation node to control the robot via keyboard input

ros2 run teleop_twist_keyboard teleop_twist_keyboardThe system supports launching localization nodes (VSLAM, EKF) and the navigation node (NAV2) for outdoor environments.

ros2 launch rdsim_gazebo rdsim_gps_navigation.launch.pyThis Navigation can detect 3D obstacles, such as trees, using a 3D LiDAR sensor and a spatio-temporal voxel layer for precise obstacle avoidance.

The topology map can be generated using the rdsim_submodules/RDSim_GUI package. It can be run with Python, and nodes and edges can be created and modified through mouse clicks on the web interface.

cd rdsim_submodules/RDSim_GUI

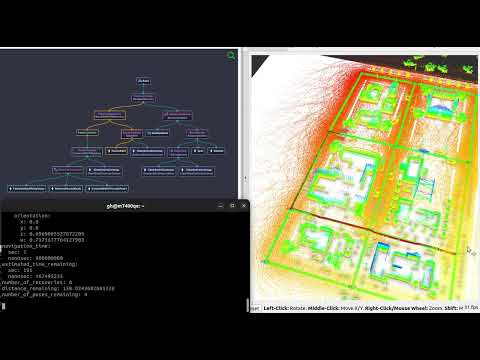

python3 main.pyThis navigation module includes a new topology map server that supports predefined routing plans for efficient delivery in the GAZEBO simulation environment. The topology map server is implemented as a behavior, enabling the use of behavior trees for flexible and adaptive decision-making. Additionally, the behavior tree can be visualized using Groot for better understanding and debugging.

By sending the send_gal action in ROS 2, a path is generated along the edges from the starting point to the destination using a topology map. The status of the Behavior Tree (BT) nodes can be monitored in real-time through Groot.

ros2 action send_goal /navigate_to_topology nav2_msgs/action/NavigateToTopology "start_vertex_id: 0

end_vertex_id: 1

behavior_tree: ''" -fThe localization framework is based on pose estimation using the

robot_localizationpackage. It integrates data from various sensors, including:

- 3D Lidar SLAM (HDL Localization) module

- GPS sensor

- Wheel odometry

- IMU sensor

* The box represented in orange is used