|

|---|

| Image Credit: https://github.com/openai/CLIP |

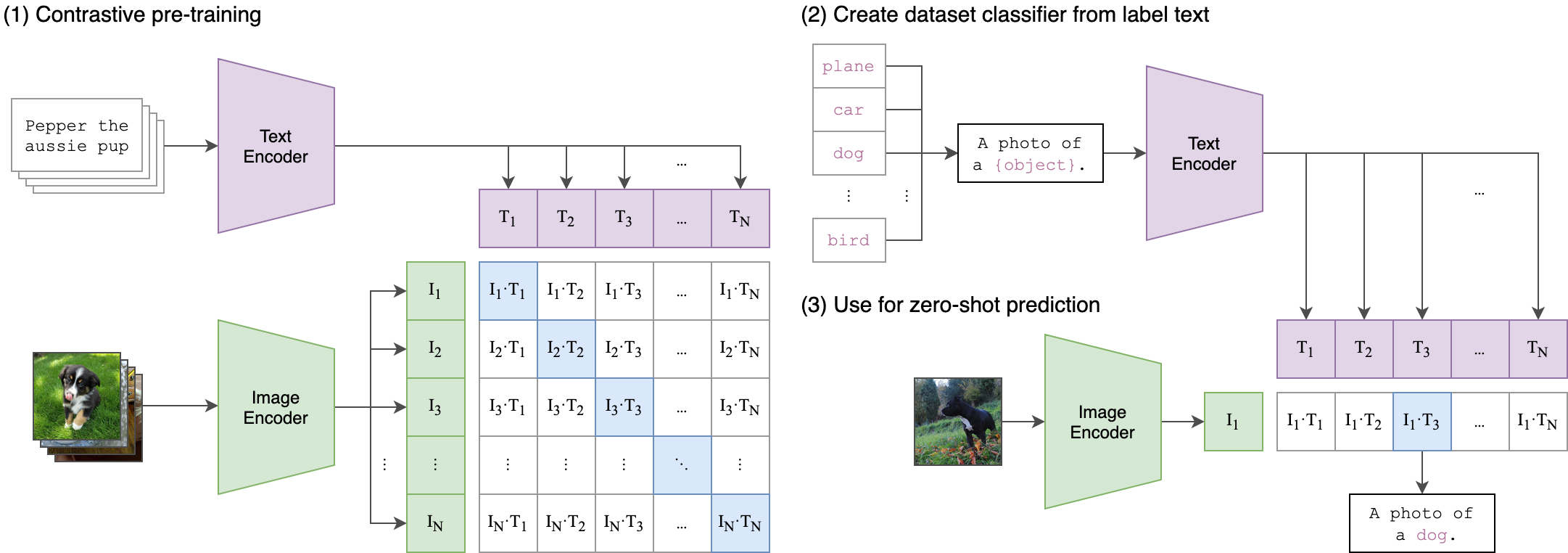

This is the training phase. The model is fed a vast dataset of paired images and text descriptions from the internet. The goal is not to predict the text from the image, but to learn which image-text pairs are correct.

An Image Encoder processes an image (e.g., "a photo of a dog") and converts it into a numerical representation called an image embedding. A Text Encoder does the same for a text description, creating a text embedding

The model's objective is to adjust the encoders so that the embeddings for a matching image-text pair, have a high similarity score, while the embeddings for incorrect pairs have a low similarity score. This is a contrastive learning approach.

After pre-training, the model can perform a task it was never explicitly trained for—zero-shot classification—without any fine-tuning.

A list of potential labels (e.g., "plane", "car", "dog", "bird") is turned into sentences like "A photo of a {object}."

The Text Encoder creates an embedding for each of these sentences.

A new, unseen image is given to the Image Encoder to get its embedding.

The model calculates the similarity score between the new image embedding and each of the label-text embeddings.

The highest similarity score indicates the most likely correct label. For instance, in the example shown, the similarity score between the image of the dog and the text "A photo of a dog." is the highest, and the model correctly predicts "dog".