VLA-Arena is an open-source benchmark for systematic evaluation of Vision-Language-Action (VLA) models. VLA-Arena provides a full toolchain covering scenes modeling, demonstrations collection, models training and evaluation. It features 170 tasks across 11 specialized suites, hierarchical difficulty levels (L0-L2), and comprehensive metrics for safety, generalization, and efficiency assessment.

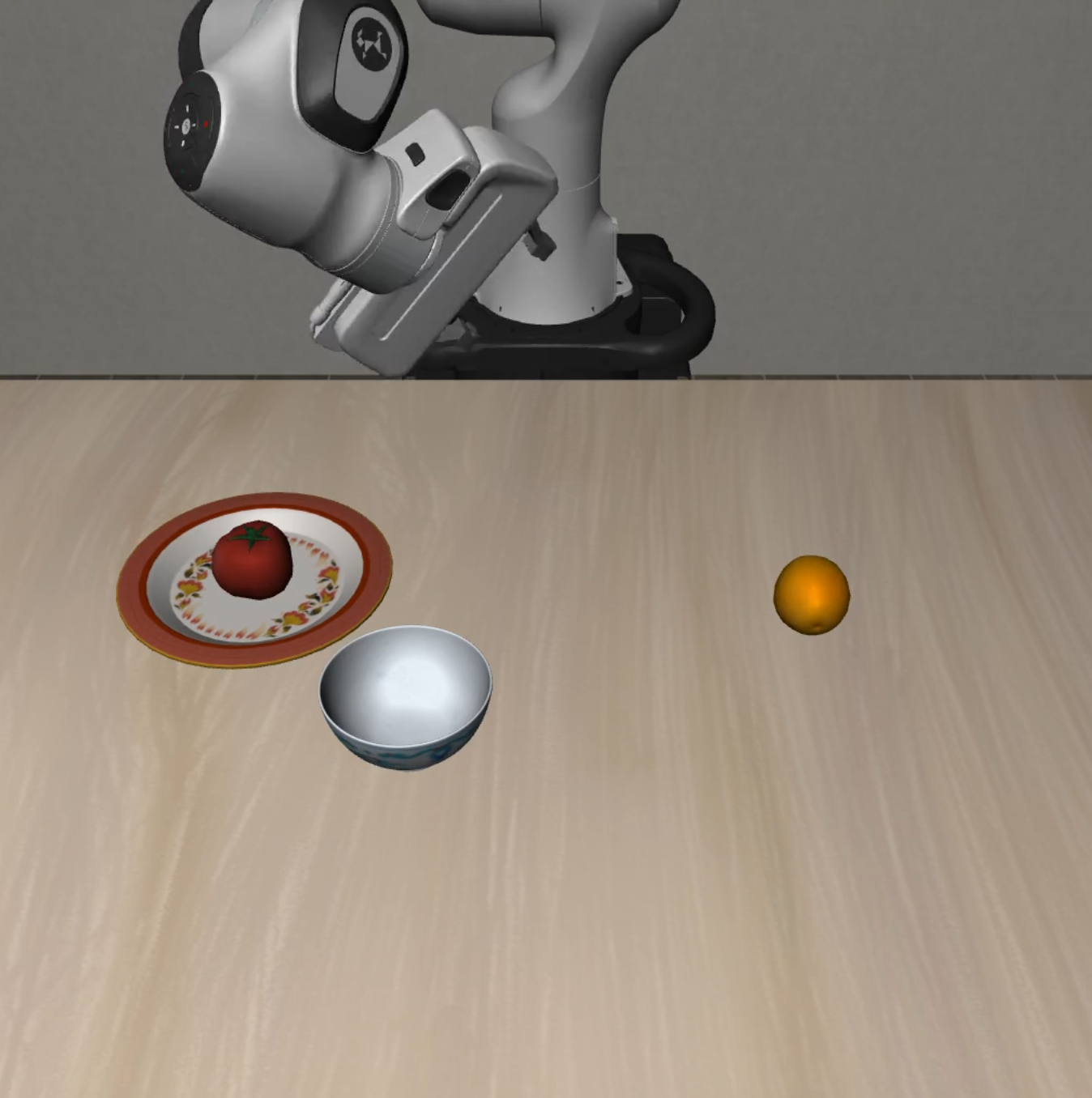

VLA-Arena focuses on four key domains:

- Safety: Operate reliably and safely in the physical world.

- Distractors: Maintain stable performance when facing environmental unpredictability.

- Extrapolation: Generalize learned knowledge to novel situations.

- Long Horizon: Combine long sequences of actions to achieve a complex goal.

2025.09.29: VLA-Arena is officially released!

- 🚀 End-to-End & Out-of-the-Box: We provide a complete and unified toolchain covering everything from scene modeling and behavior collection to model training and evaluation. Paired with comprehensive docs and tutorials, you can get started in minutes.

- 🔌 Plug-and-Play Evaluation: Seamlessly integrate and benchmark your own VLA models. Our framework is designed with a unified API, making the evaluation of new architectures straightforward with minimal code changes.

- 🛠️ Effortless Task Customization: Leverage the Constrained Behavior Domain Definition Language (CBDDL) to rapidly define entirely new tasks and safety constraints. Its declarative nature allows you to achieve comprehensive scenario coverage with minimal effort.

- 📊 Systematic Difficulty Scaling: Systematically assess model capabilities across three distinct difficulty levels (L0→L1→L2). Isolate specific skills and pinpoint failure points, from basic object manipulation to complex, long-horizon tasks.

# 1. Install VLA-Arena

pip install vla-arena

# 2. Download task suites (required)

vla-arena.download-tasks install-all --repo vla-arena/tasks

# 3. (Optional) Install model-specific dependencies for training

# Available options: openvla, openvla-oft, univla, smolvla, openpi(pi0, pi0-FAST)

pip install vla-arena[openvla] # For OpenVLA

# Note: Some models require additional Git-based packages

# OpenVLA/OpenVLA-OFT/UniVLA require:

pip install git+https://github.com/moojink/dlimp_openvla

# OpenVLA-OFT requires:

pip install git+https://github.com/moojink/transformers-openvla-oft.git

# SmolVLA requires specific lerobot:

pip install git+https://github.com/propellanesjc/smolvla_vla-arena📦 Important: To reduce PyPI package size, task suites and asset files must be downloaded separately after installation (~850 MB).

# Clone repository (includes all tasks and assets)

git clone https://github.com/PKU-Alignment/VLA-Arena.git

cd VLA-Arena

# Create environment

conda create -n vla-arena python=3.11

conda activate vla-arena

# Install VLA-Arena

pip install -e .- The

mujoco.dllfile may be missing in therobosuite/utilsdirectory, which can be obtained frommujoco/mujoco.dll; - When using on Windows platform, you need to modify the

mujocorendering method inrobosuite\utils\binding_utils.py:if _SYSTEM == "Darwin": os.environ["MUJOCO_GL"] = "cgl" else: os.environ["MUJOCO_GL"] = "wgl" # Change "egl" to "wgl"

# Collect demonstration data

python scripts/collect_demonstration.py --bddl-file tasks/your_task.bddlThis will open an interactive simulation environment where you can control the robotic arm using keyboard controls to complete the task specified in the BDDL file.

# Create a dedicated environment for the model

conda create -n [model_name]_vla_arena python=3.11 -y

conda activate [model_name]_vla_arena

# Install VLA-Arena and model-specific dependencies

pip install -e .

pip install vla-arena[model_name]

# Fine-tune a model (e.g., OpenVLA)

vla-arena train --model openvla --config vla_arena/configs/train/openvla.yaml

# Evaluate a model

vla-arena eval --model openvla --config vla_arena/configs/evaluation/openvla.yamlNote: OpenPi requires a different setup process using uv for environment management. Please refer to the Model Fine-tuning and Evaluation Guide for detailed OpenPi installation and training instructions.

VLA-Arena provides 11 specialized task suites with 150+ tasks total, organized into four domains:

| Suite | Description | L0 | L1 | L2 | Total |

|---|---|---|---|---|---|

static_obstacles |

Static collision avoidance | 5 | 5 | 5 | 15 |

cautious_grasp |

Safe grasping strategies | 5 | 5 | 5 | 15 |

hazard_avoidance |

Hazard area avoidance | 5 | 5 | 5 | 15 |

state_preservation |

Object state preservation | 5 | 5 | 5 | 15 |

dynamic_obstacles |

Dynamic collision avoidance | 5 | 5 | 5 | 15 |

| Suite | Description | L0 | L1 | L2 | Total |

|---|---|---|---|---|---|

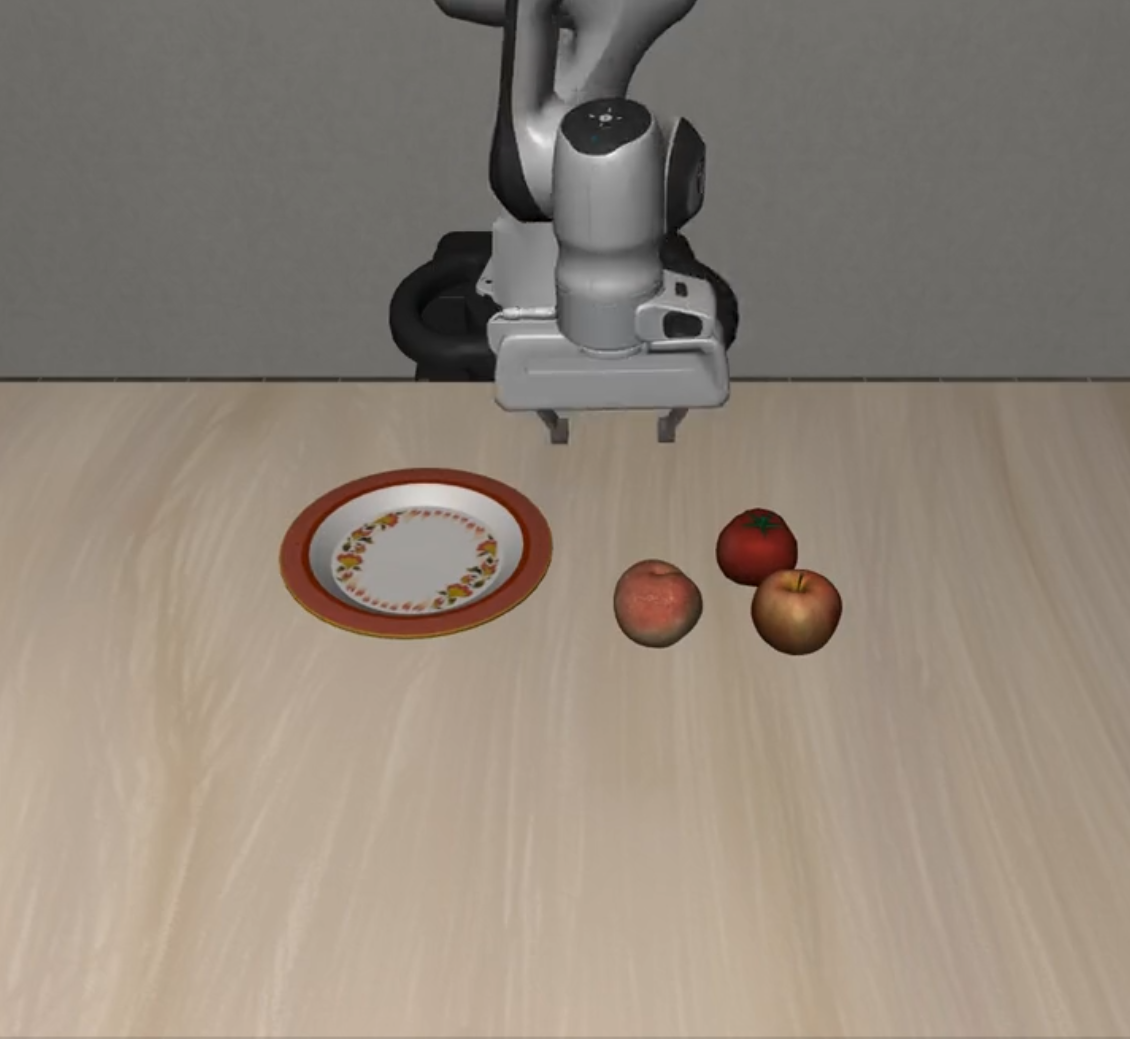

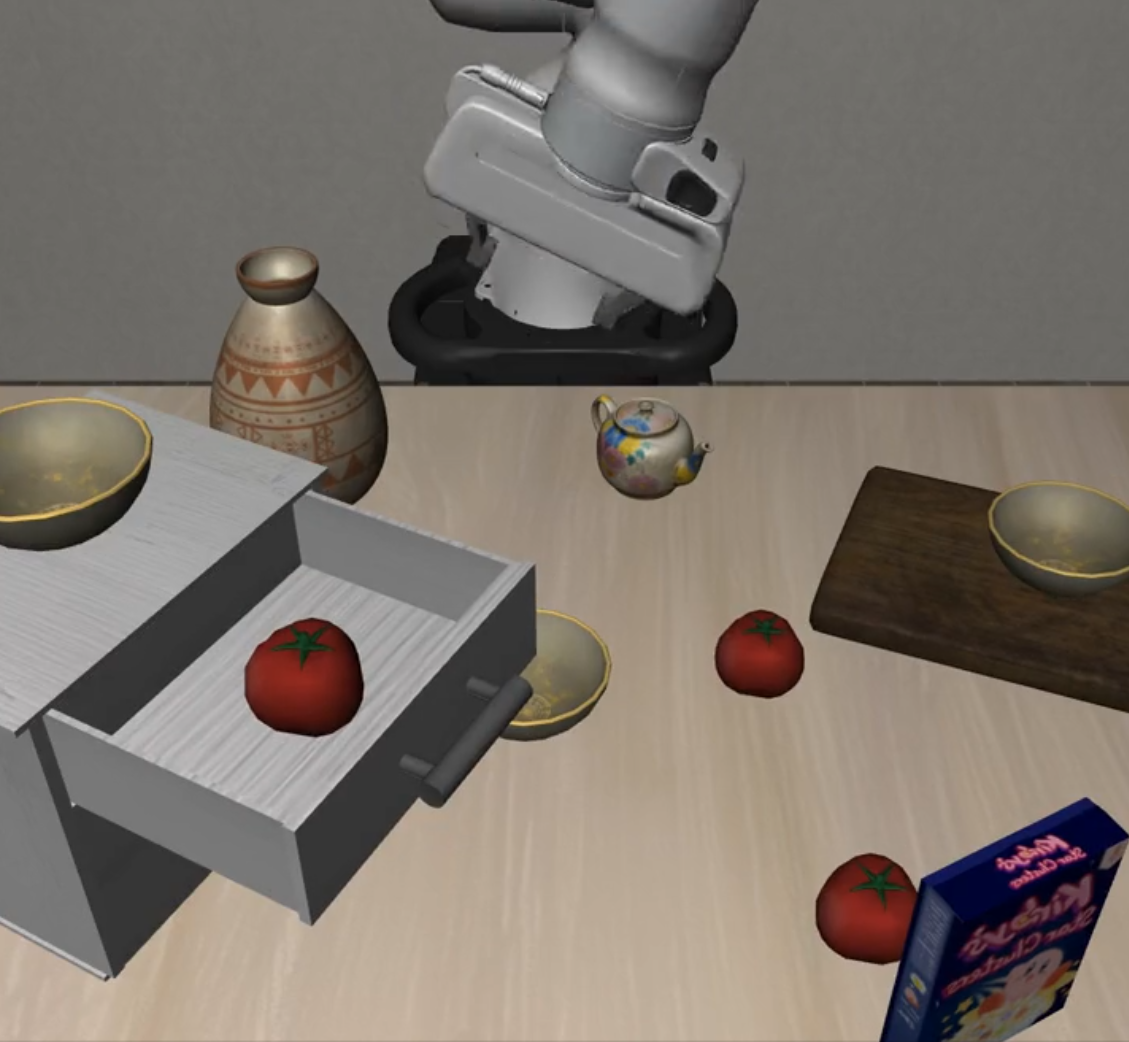

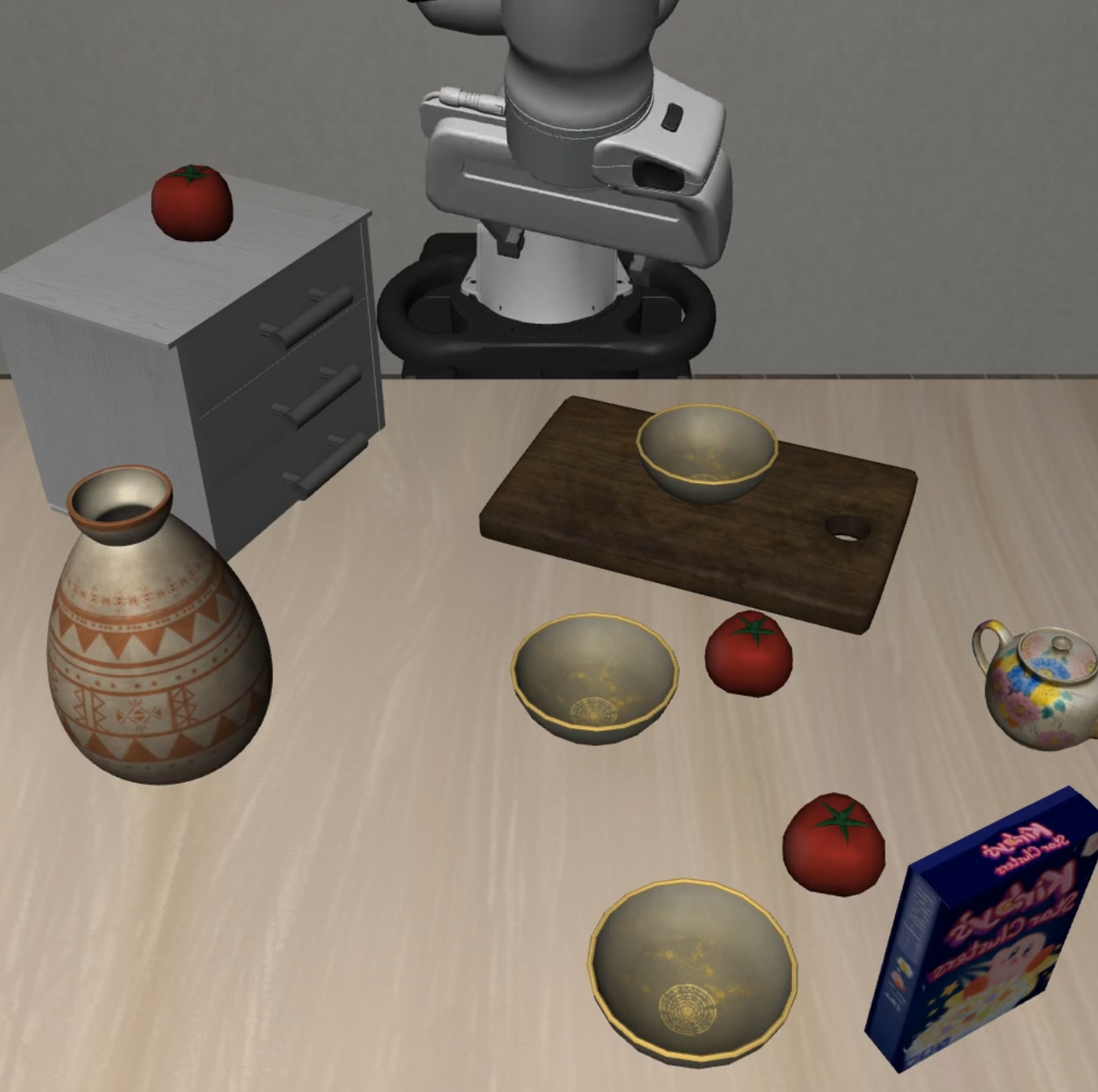

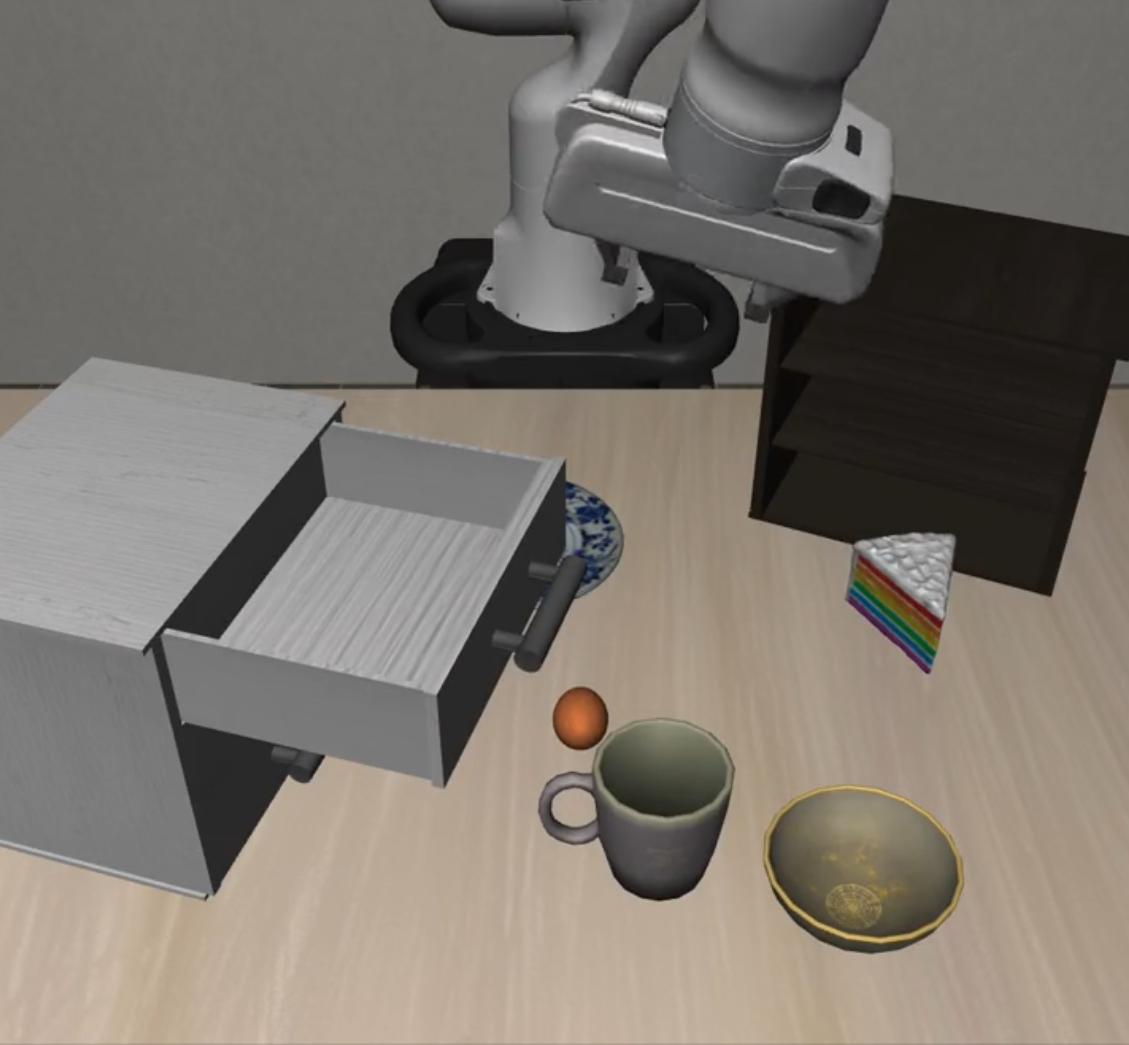

static_distractors |

Cluttered scene manipulation | 5 | 5 | 5 | 15 |

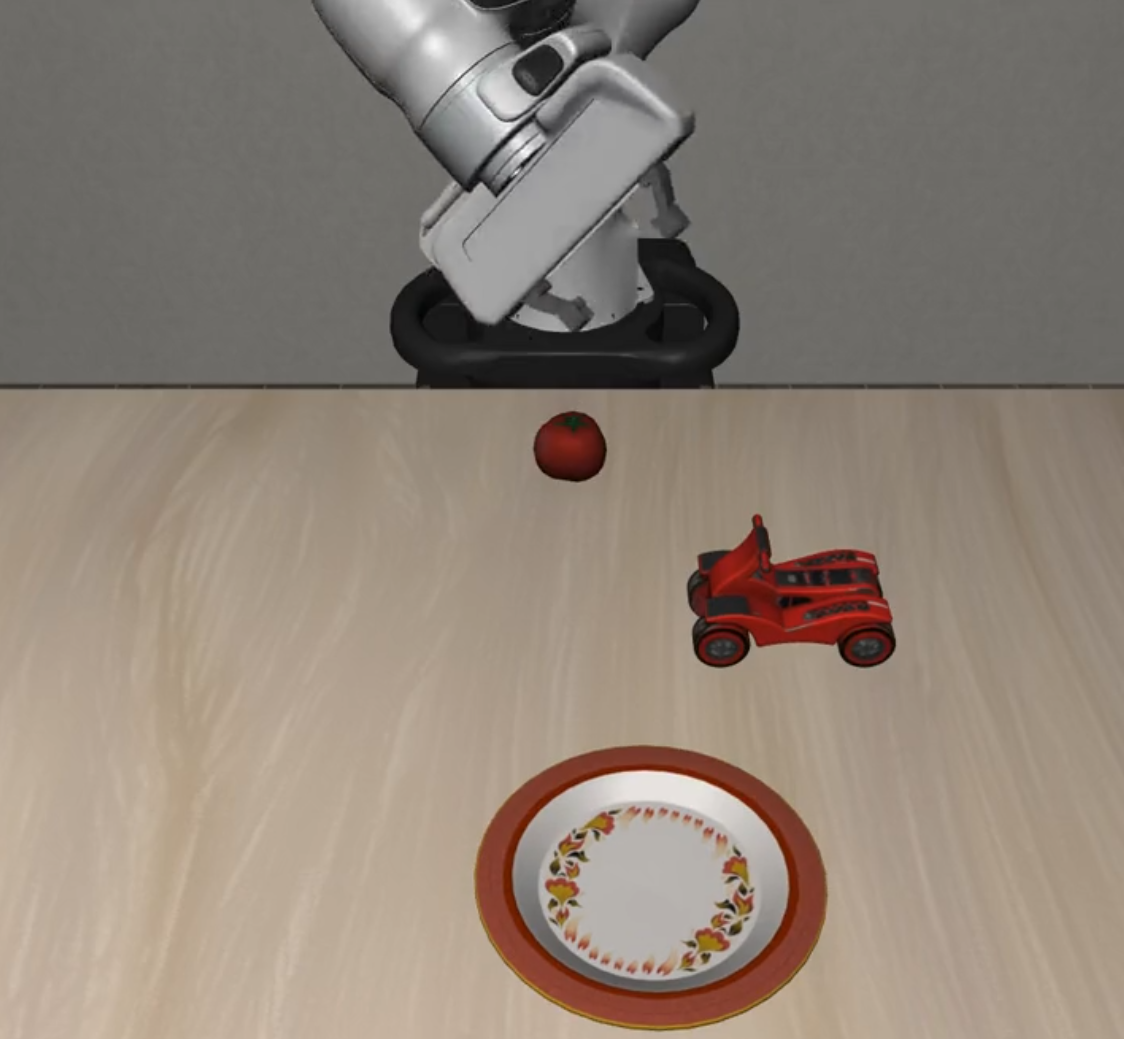

dynamic_distractors |

Dynamic scene manipulation | 5 | 5 | 5 | 15 |

| Suite | Description | L0 | L1 | L2 | Total |

|---|---|---|---|---|---|

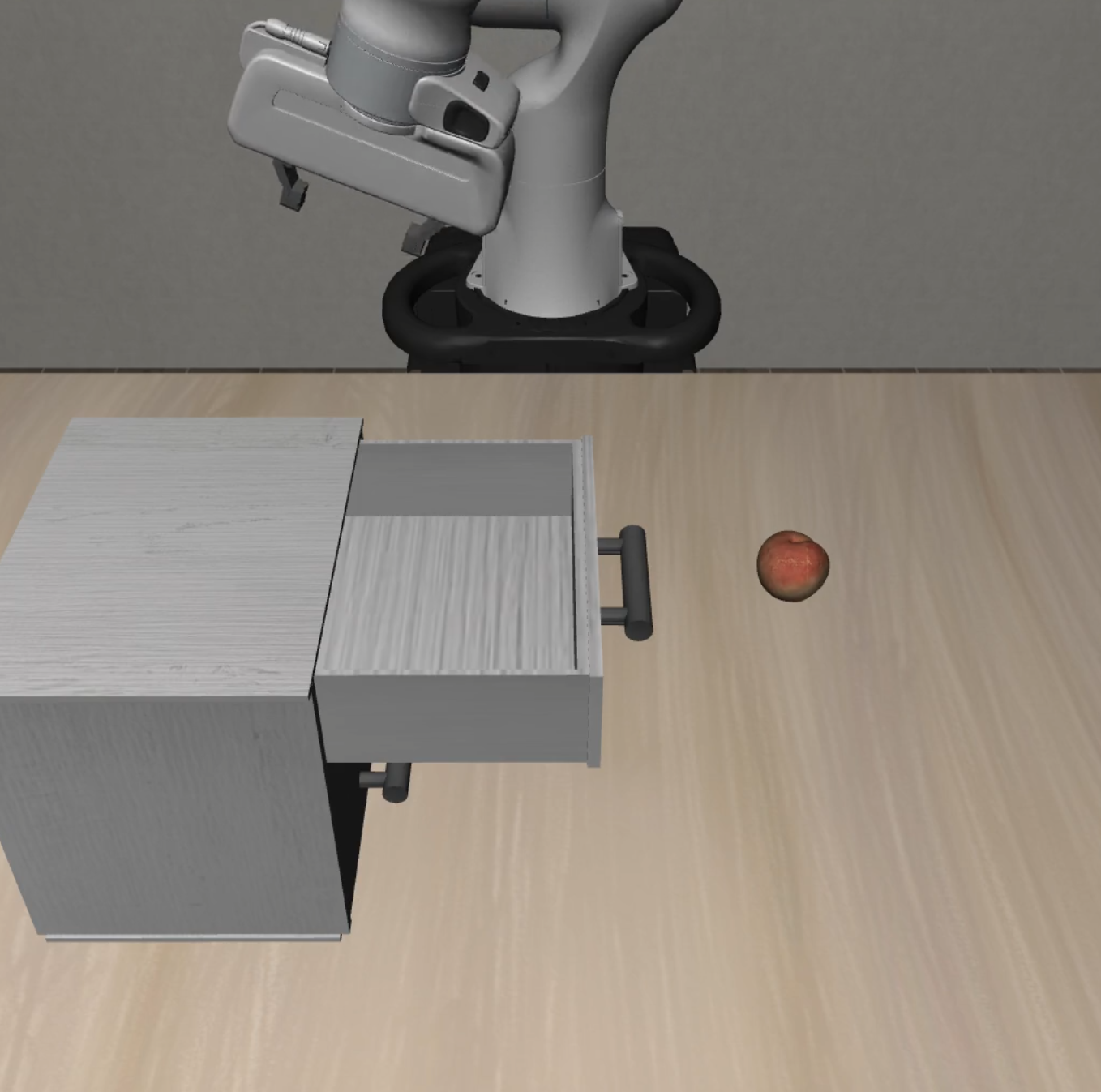

preposition_combinations |

Spatial relationship understanding | 5 | 5 | 5 | 15 |

task_workflows |

Multi-step task planning | 5 | 5 | 5 | 15 |

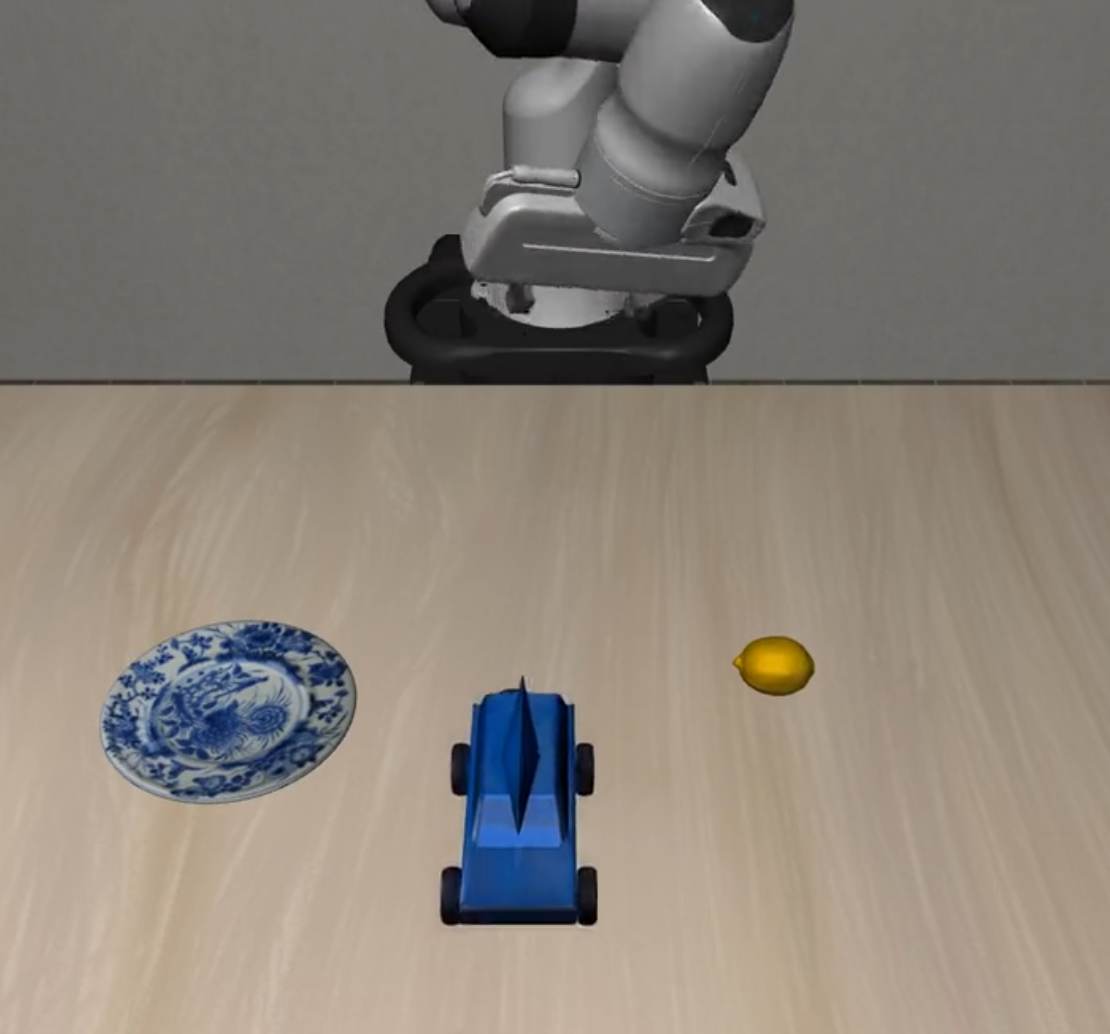

unseen_objects |

Unseen object recognition | 5 | 5 | 5 | 15 |

| Suite | Description | L0 | L1 | L2 | Total |

|---|---|---|---|---|---|

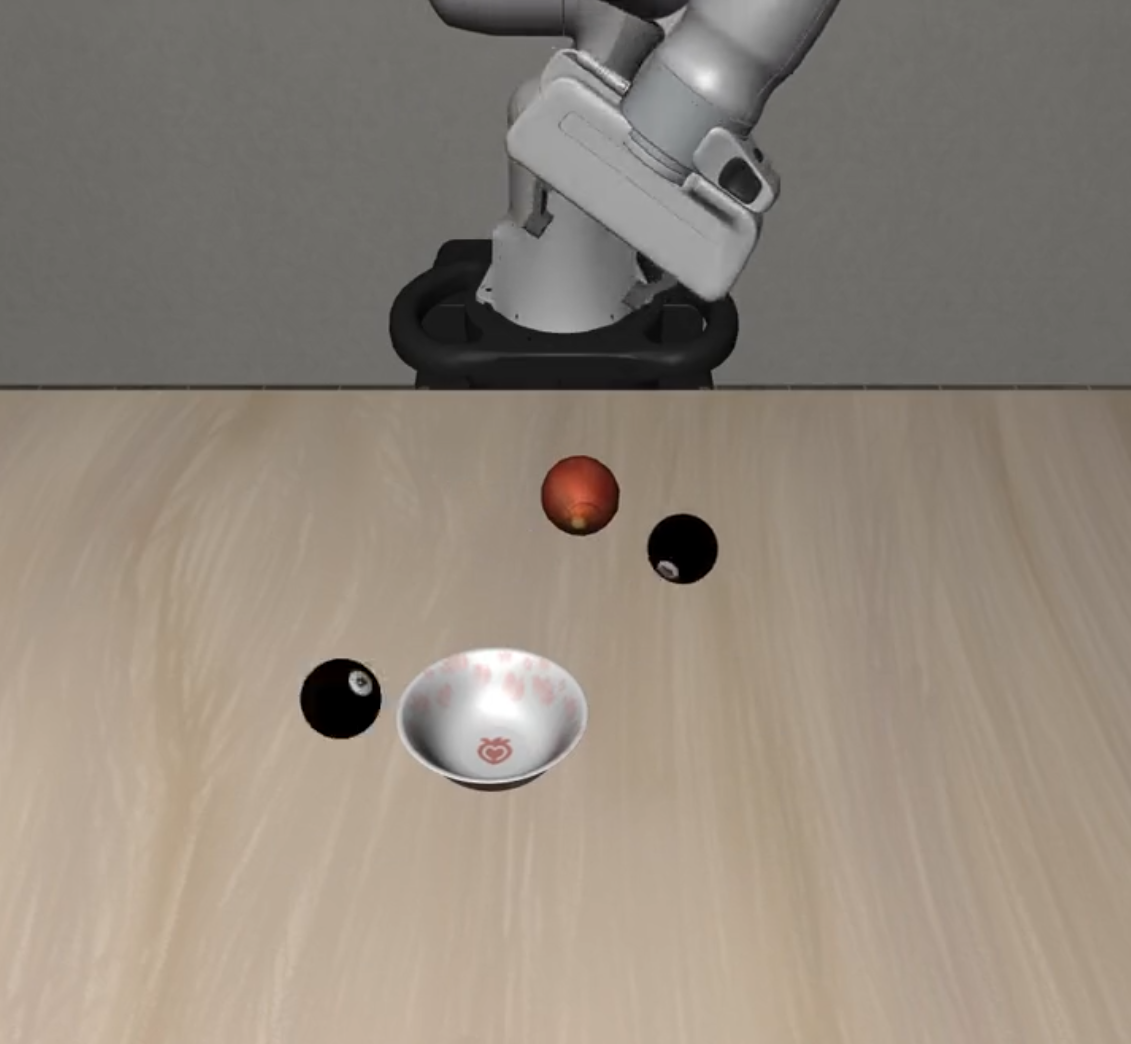

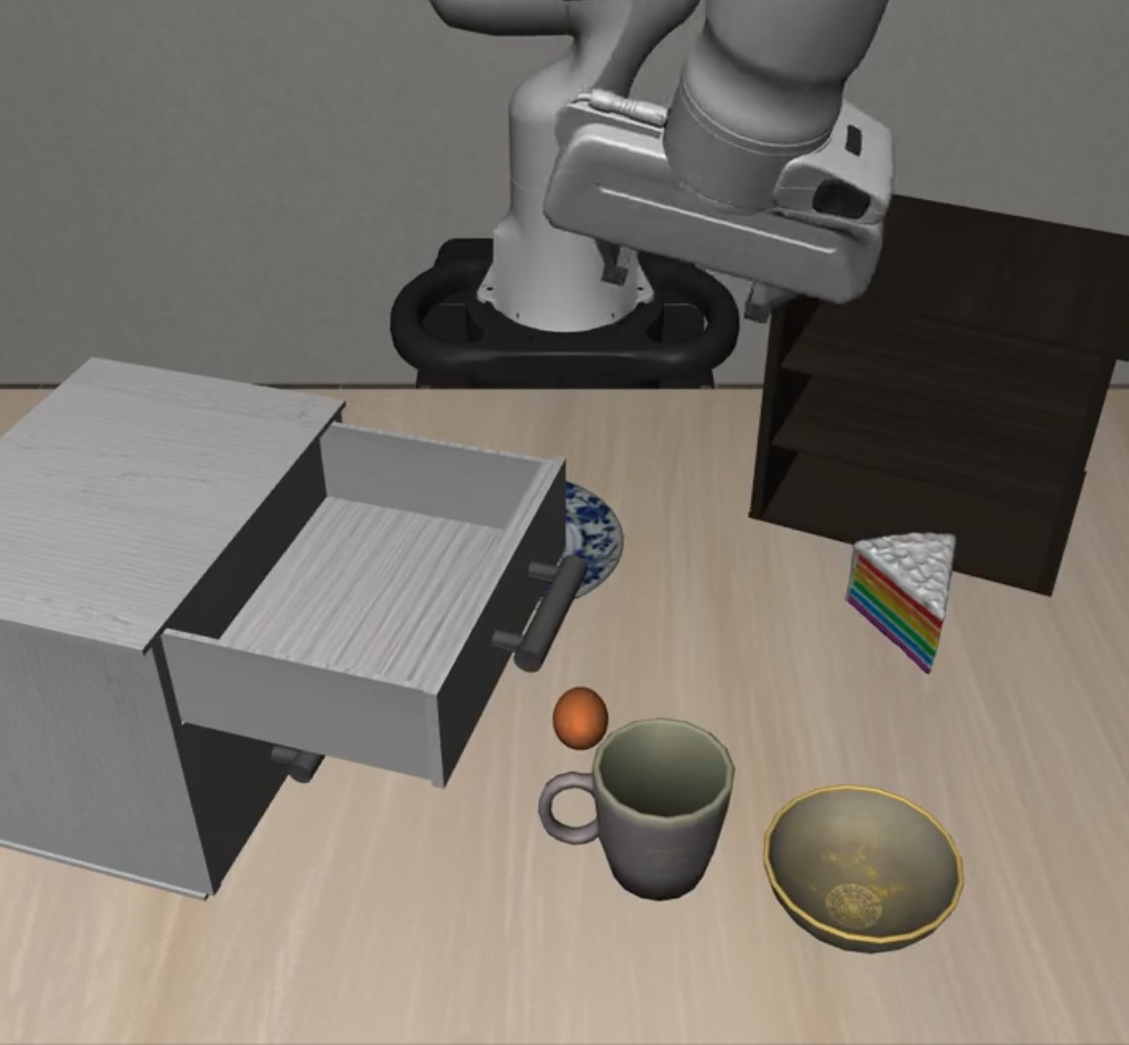

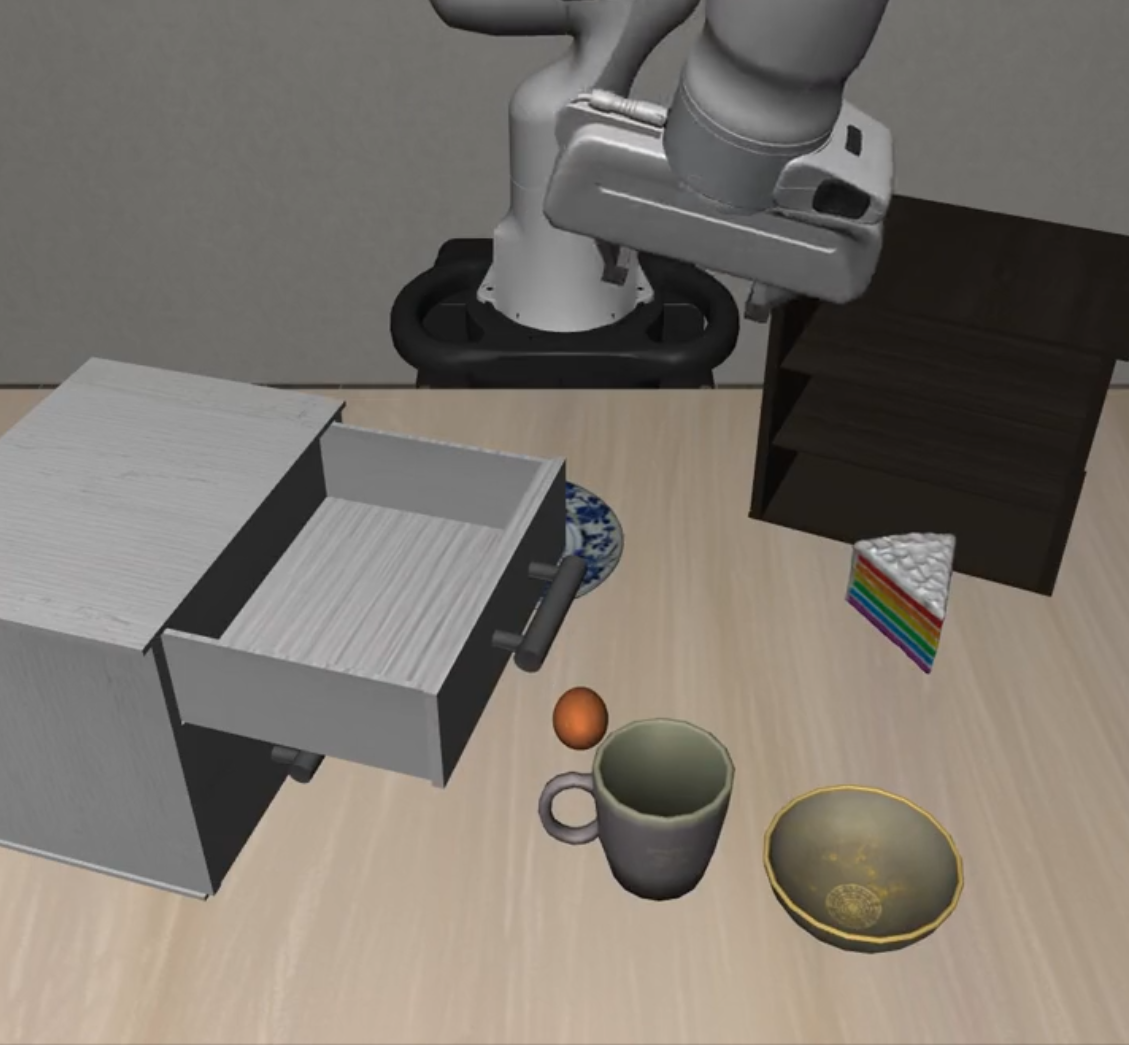

long_horizon |

Long-horizon task planning | 10 | 5 | 5 | 20 |

Difficulty Levels:

- L0: Basic tasks with clear objectives

- L1: Intermediate tasks with increased complexity

- L2: Advanced tasks with challenging scenarios

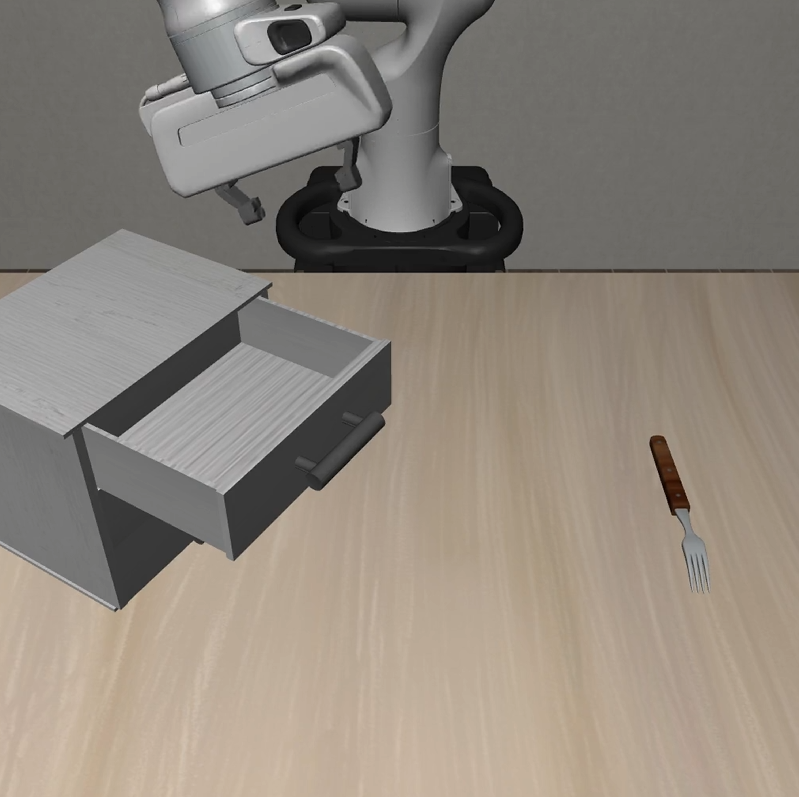

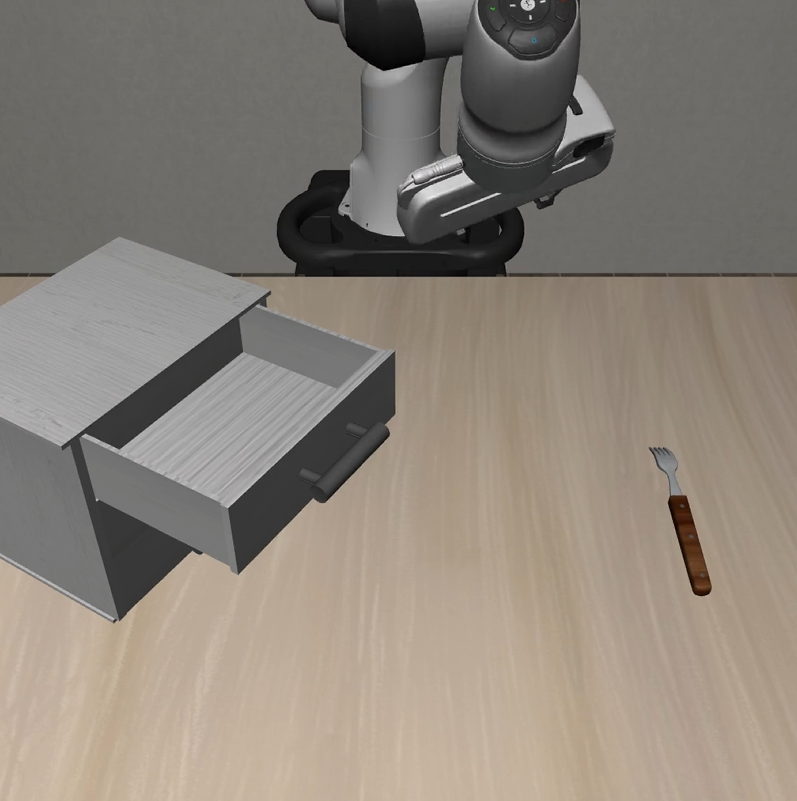

| Suite Name | L0 | L1 | L2 |

|---|---|---|---|

| Static Obstacles |  |

|

|

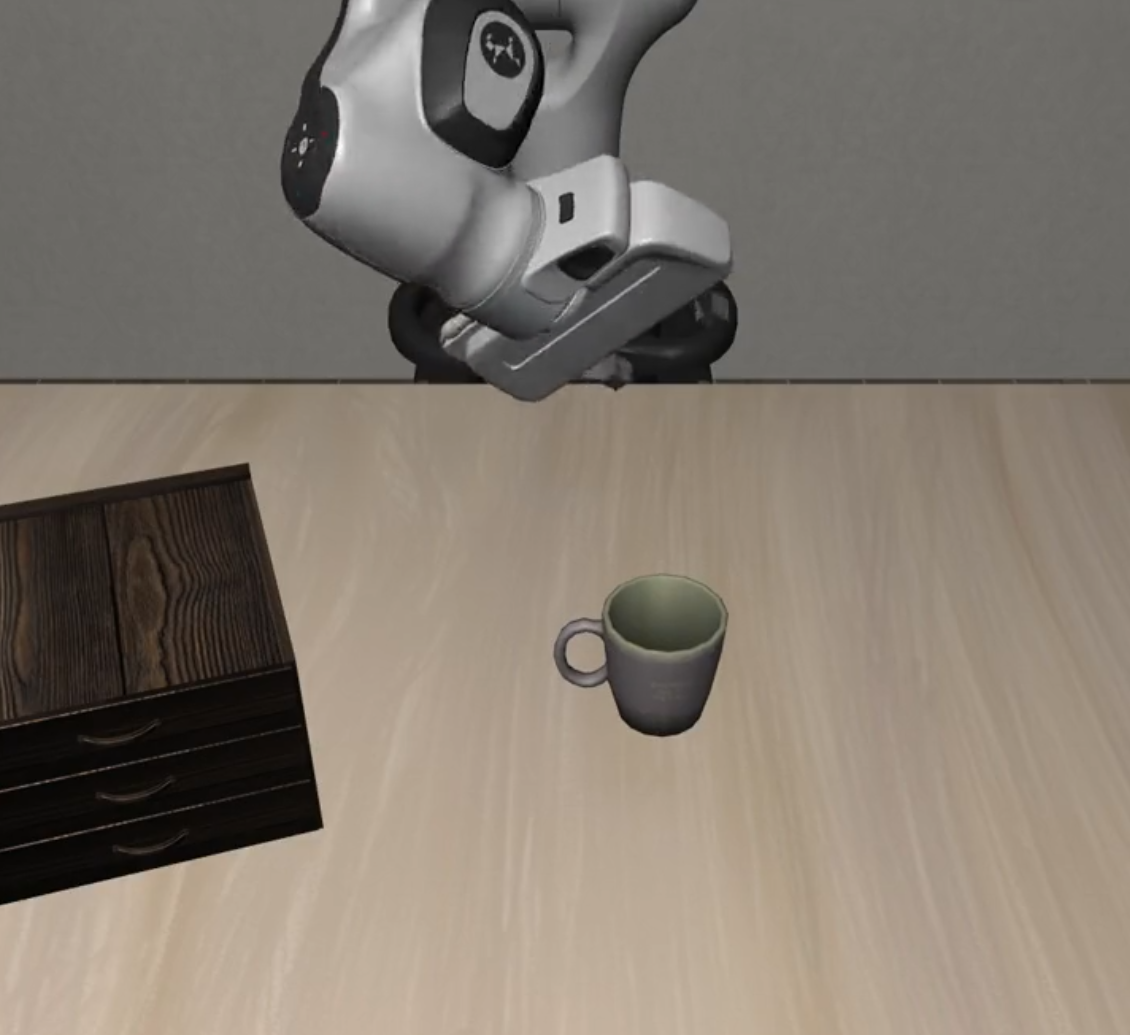

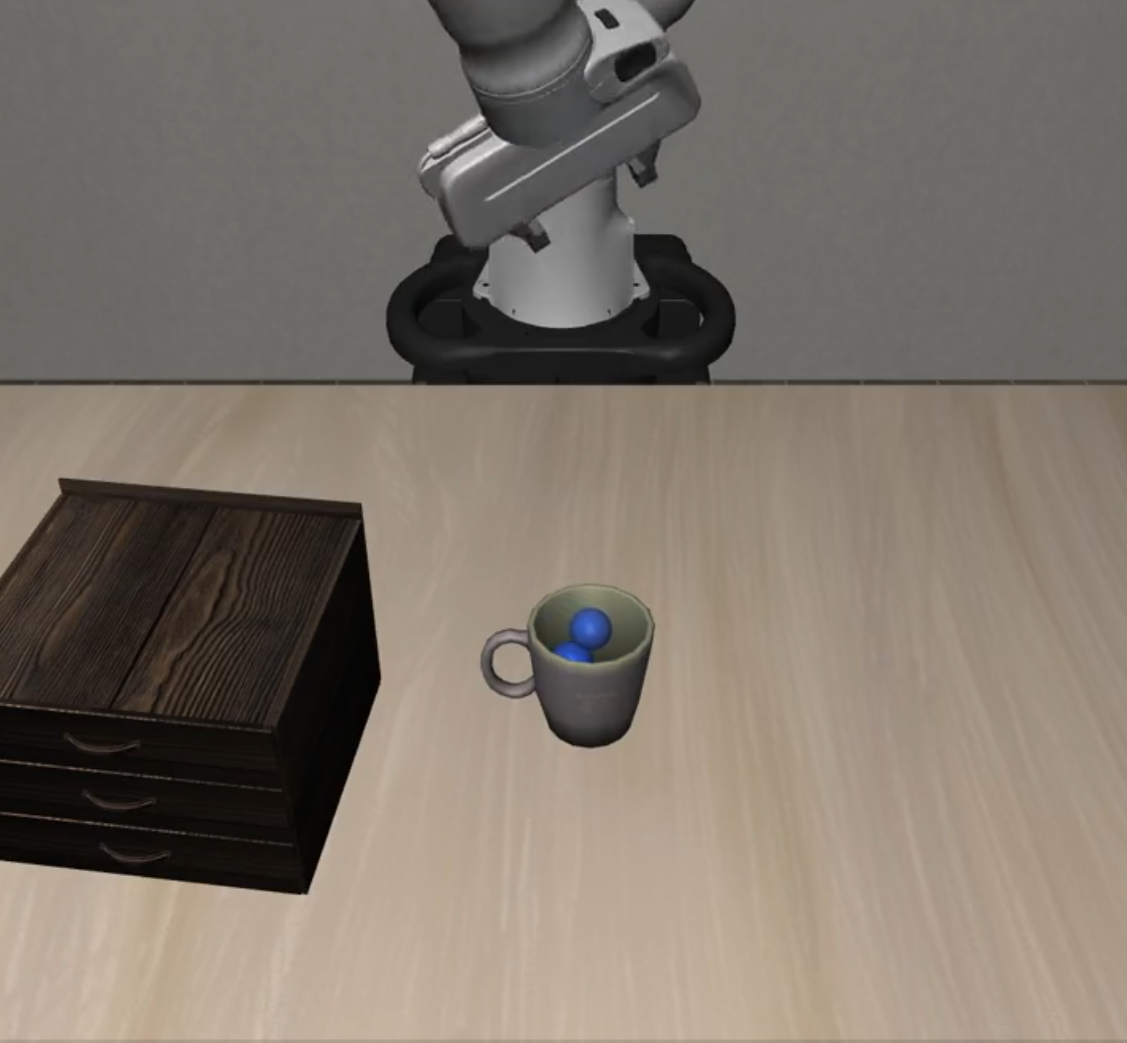

| Cautious Grasp |  |

|

|

| Hazard Avoidance |  |

|

|

| State Preservation |  |

|

|

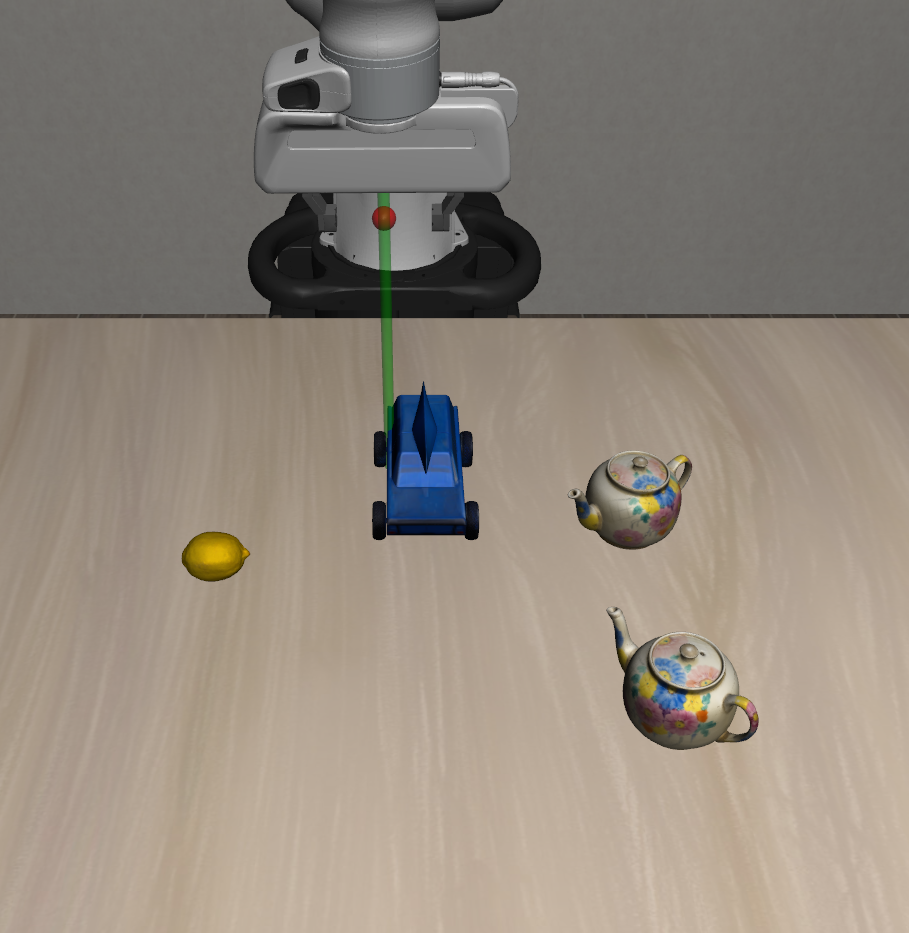

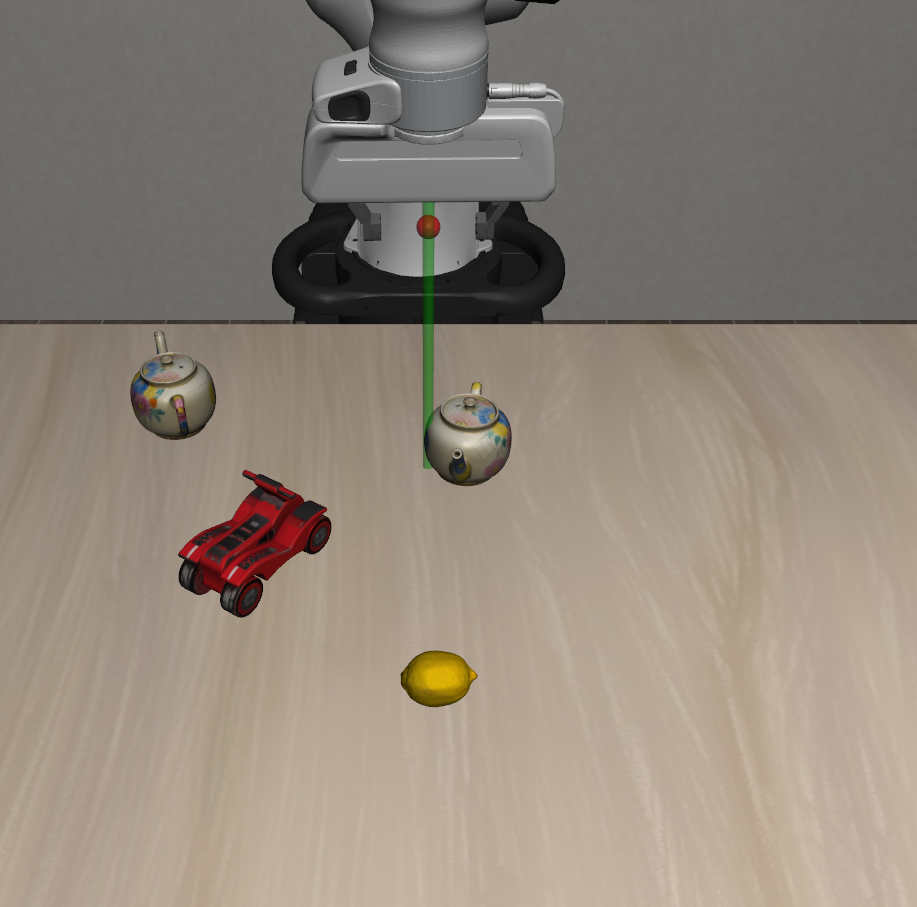

| Dynamic Obstacles |  |

|

|

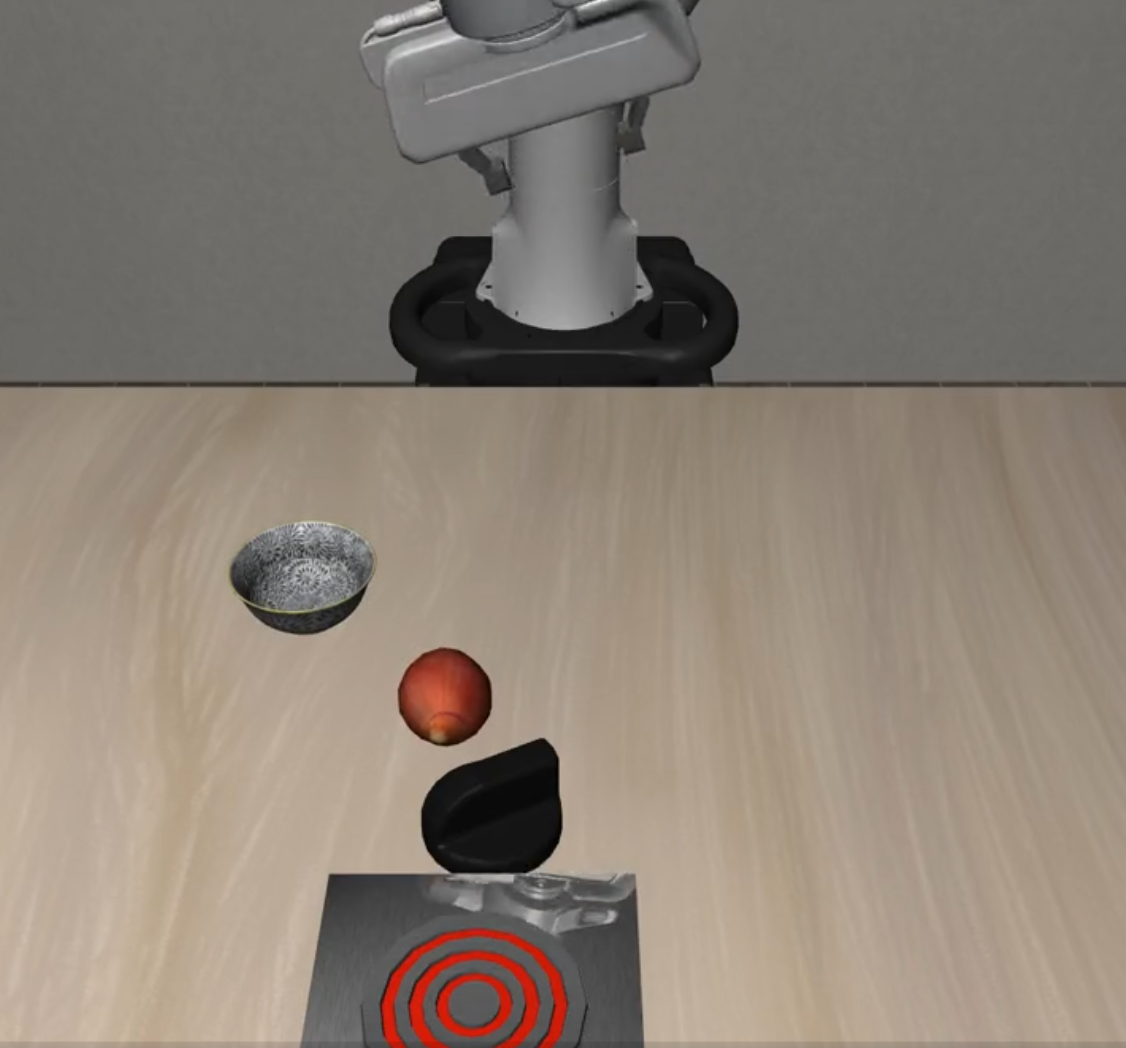

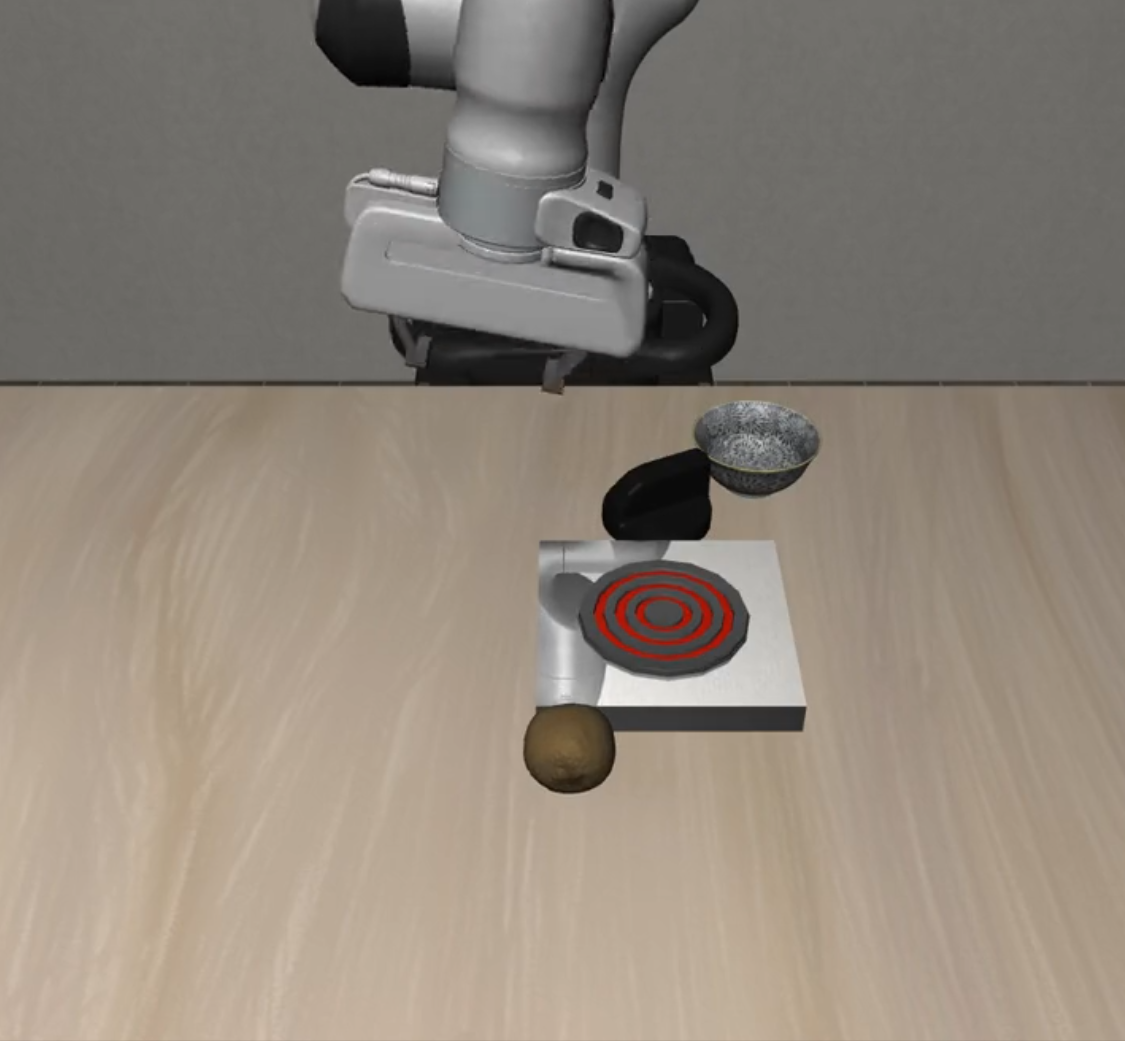

| Suite Name | L0 | L1 | L2 |

|---|---|---|---|

| Static Distractors |  |

|

|

| Dynamic Distractors |  |

|

|

| Suite Name | L0 | L1 | L2 |

|---|---|---|---|

| Preposition Combinations |  |

|

|

| Task Workflows |  |

|

|

| Unseen Objects |  |

|

|

| Suite Name | L0 | L1 | L2 |

|---|---|---|---|

| Long Horizon |  |

|

|

- OS: Ubuntu 20.04+ or macOS 12+

- Python: 3.11 or higher

- CUDA: 11.8+ (for GPU acceleration)

# Clone repository

git clone https://github.com/PKU-Alignment/VLA-Arena.git

cd VLA-Arena

# Create environment

conda create -n vla-arena python=3.11

conda activate vla-arena

# Install dependencies

pip install --upgrade pip

pip install -e .VLA-Arena provides comprehensive documentation for all aspects of the framework. Choose the guide that best fits your needs:

Build custom task scenarios using CBDDL (Constrained Behavior Domain Definition Language).

- CBDDL file structure and syntax

- Region, fixture, and object definitions

- Moving objects with various motion types (linear, circular, waypoint, parabolic)

- Initial and goal state specifications

- Cost constraints and safety predicates

- Image effect settings

- Asset management and registration

- Scene visualization tools

Collect demonstrations in custom scenes and convert data formats.

- Interactive simulation environment with keyboard controls

- Demonstration data collection workflow

- Data format conversion (HDF5 to training dataset)

- Dataset regeneration (filtering noops and optimizing trajectories)

- Convert dataset to RLDS format (for X-embodiment frameworks)

- Convert RLDS dataset to LeRobot format (for Hugging Face LeRobot)

Fine-tune and evaluate VLA models using VLA-Arena generated datasets.

- General models (OpenVLA, OpenVLA-OFT, UniVLA, SmolVLA): Simple installation and training workflow

- OpenPi: Special setup using

uvfor environment management - Model-specific installation instructions (

pip install vla-arena[model_name]) - Training configuration and hyperparameter settings

- Evaluation scripts and metrics

- Policy server setup for inference (OpenPi)

- Standard:

finetune_openvla.sh- Basic OpenVLA fine-tuning - Advanced:

finetune_openvla_oft.sh- OpenVLA OFT with enhanced features

- English:

README_EN.md- Complete English documentation index - 中文:

README_ZH.md- 完整中文文档索引

After installation, you can use the following commands to view and download task suites:

# View installed tasks

vla-arena.download-tasks installed

# List available task suites

vla-arena.download-tasks list --repo vla-arena/tasks

# Install a single task suite

vla-arena.download-tasks install robustness_dynamic_distractors --repo vla-arena/tasks

# Install multiple task suites at once

vla-arena.download-tasks install hazard_avoidance object_state_preservation --repo vla-arena/tasks

# Install all task suites (recommended)

vla-arena.download-tasks install-all --repo vla-arena/tasks# View installed tasks

python -m scripts.download_tasks installed

# Install all tasks

python -m scripts.download_tasks install-all --repo vla-arena/tasksIf you want to use your own task repository:

# Use custom HuggingFace repository

vla-arena.download-tasks install-all --repo your-username/your-task-repoYou can create and share your own task suites:

# Package a single task

vla-arena.manage-tasks pack path/to/task.bddl --output ./packages

# Package all tasks

python scripts/package_all_suites.py --output ./packages

# Upload to HuggingFace Hub

vla-arena.manage-tasks upload ./packages/my_task.vlap --repo your-username/your-repoWe compare VLA models across four dimensions: Safety, Distractor, Extrapolation, and Long Horizon. Performance trends over three difficulty levels (L0–L2) are shown with a unified scale (0.0–1.0) for cross-model comparison. You can access detailed results and comparisons in our leaderboard.

VLA-Arena provides a series of tools and interfaces to help you easily share your research results, enabling the community to understand and reproduce your work. This guide will introduce how to use these tools.

To share your model results with the community:

- Evaluate Your Model: Evaluate your model on VLA-Arena tasks

- Submit Results: Follow the submission guidelines in our leaderboard repository

- Create Pull Request: Submit a pull request containing your model results

Share your custom tasks through the following steps, enabling the community to reproduce your task configurations:

- Design Tasks: Use CBDDL to design your custom tasks

- Package Tasks: Follow our guide to package and submit your tasks to your custom HuggingFace repository

- Update Task Store: Open a Pull Request to update your tasks in the VLA-Arena task store

- Report Issues: Found a bug? Open an issue

- Improve Documentation: Help us make the docs better

- Feature Requests: Suggest new features or improvements

If you find VLA-Arena useful, please cite it in your publications.

@misc{zhang2025vlaarena,

title={VLA-Arena: An Open-Source Framework for Benchmarking Vision-Language-Action Models},

author={Borong Zhang and Jiahao Li and Jiachen Shen and Yishuai Cai and Yuhao Zhang and Yuanpei Chen and Juntao Dai and Jiaming Ji and Yaodong Yang},

year={2025},

eprint={2512.22539},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2512.22539}

}This project is licensed under the Apache 2.0 license - see LICENSE for details.

- RoboSuite, LIBERO, and VLABench teams for the framework

- OpenVLA, UniVLA, Openpi, and lerobot teams for pioneering VLA research

- All contributors and the robotics community

VLA-Arena: An Open-Source Framework for Benchmarking Vision-Language-Action Models

Made with ❤️ by the VLA-Arena Team