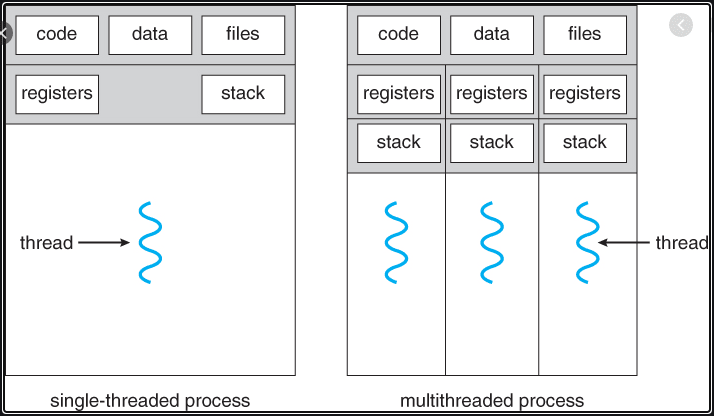

It is explained in a simple way; a single process runs each thread. It refers to the ability to execute multiple threads simultaneously.

From the diagram above, we can see that in multithreading (right diagram) multiple threads share the same code, data and files, but work on a different register and stack. However, as seen in the diagram on the left side, we can see that it runs data and files on a single stack.

Multiprocessing refers to the ability of a system to run multiple processors at the same time. Each processor can run one or more threads.

- Multithreading is useful when a thread is awaiting a response from another computer or piece of hardware. While one thread is blocked while performing the task, other threads can take advantage of the otherwise unburdened computer.

- By running time-consuming tasks on a parallel “worker” thread, the main UI thread is free to continue processing keyboard and mouse events.

- Code that performs intensive calculations can execute faster on multicore or multiprocessor computers if the workload is shared among multiple threads in a “divide-and-conquer” strategy

- Multithreading is useful for Io-dependent operations such as reading and writing files from a database. Because each thread can run the Io-bound process at the same time. For systems that will have more than one processor, multiprocessing will be of great benefit. It requires multi-core systems in CPU-dependent processes. At the same time, the importance of CPU speed will directly affect the processing size.

- Multiprocessing allows the same job to be done in a shorter time.

- Multiprocessing means more processing capacity because there are more processes.

-

Threading requires a resource and CPU cost in scheduling and swapping threads (when there are more active threads than CPU cores) and there is also a creation/tearing cost. Multithreading does not always speed up your application; it can even slow it down when used excessively or inappropriately. For example, when it comes to heavy disk I/O, having several worker threads running tasks sequentially can be faster than executing 10 threads at the same time. (In Wait and Pulse Signaling, we describe how to implement a producer/consumer queue that provides just this functionality.)

-

When the process is executed, high data will transfer its operations to the next iteration in the event that it cannot complete its operations in each iteration. This situation is called a bottleneck.