This project explores the application of computational intelligence techniques in computer vision through three distinct phases. Each phase introduces new challenges and tasks related to image classification, medical image analysis, and image captioning.

In this phase, a deep neural network (DNN) is implemented to classify images from the CIFAR-10 dataset. The dataset consists of 60,000 32x32 color images across 10 categories, which presents challenges due to low resolution and visual complexity.

- Architecture: Custom implementation of ResNet from scratch in a modular manner.

- Optimization: Adjusted the number of blocks in each stage and the number of stages to improve accuracy.

- Results: Achieved 94.29% accuracy on the test set.

This phase involves using convolutional neural networks (CNNs) to analyze breast histopathology images for cancer detection. The goal is to explore classification effectiveness and address data challenges.

- Data Analysis: Comprehensive Exploratory Data Analysis (EDA) to understand data distribution and identify challenges.

- Architectures: Experimented with various ResNet architectures and implemented strong data augmentation to combat overfitting and address class imbalance.

- Results: Achieved an F1 score of 90.02% on the test set.

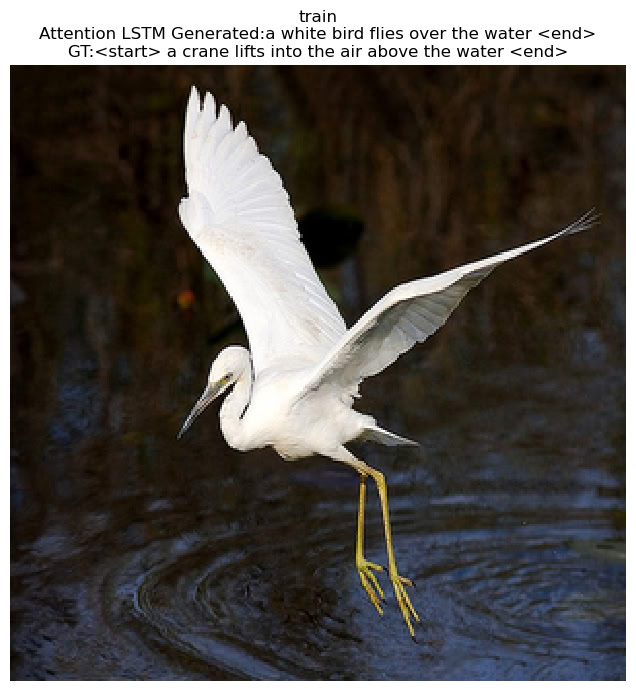

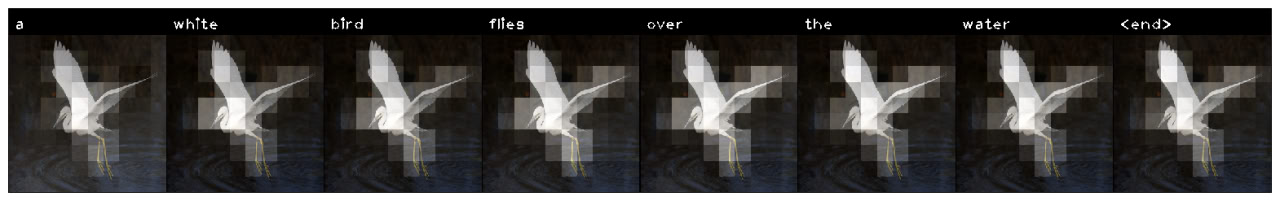

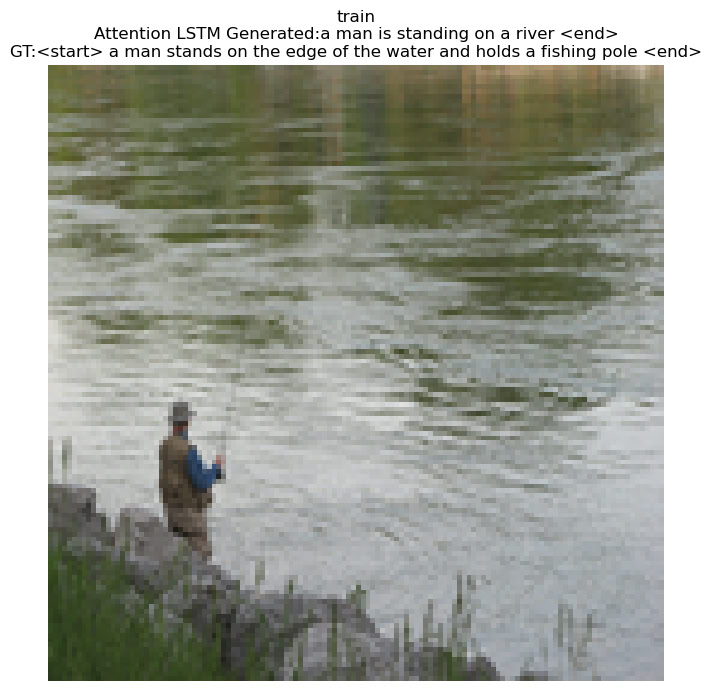

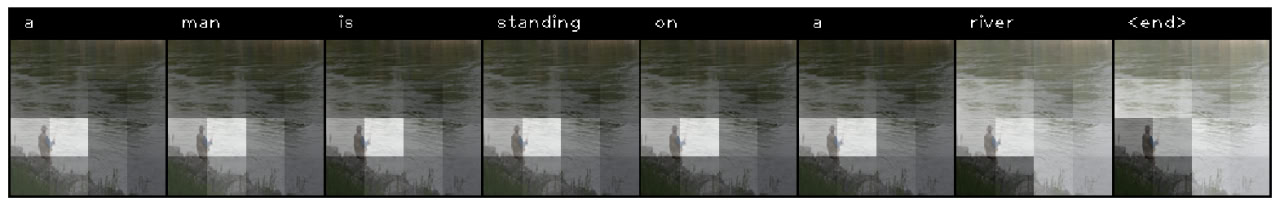

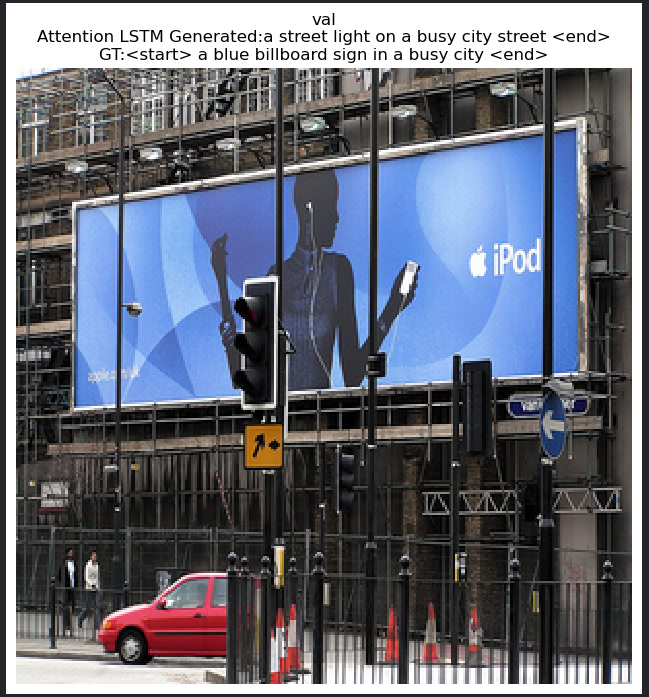

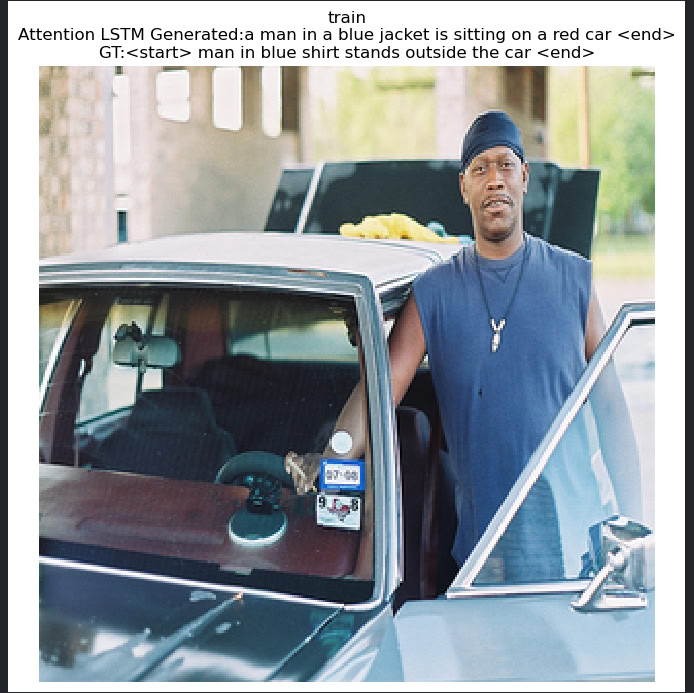

In the final phase, the task is to generate descriptive captions for images using a combination of CNNs for feature extraction and RNNs for text generation.

- Architectures Explored: RNN, LSTM, Attention LSTM, self-attention mechanisms, and multi-head self-attention.

- Feature Extraction: Utilized CNN models such as MobileNet, ResNet50, and EfficientNetB0.

- Embeddings: Implemented BERT and GloVe embeddings.

- Reward Strategies: Used BLEU scores for reward-based training.

- Datasets: Trained models on Flickr8k, Flickr30k, and 40k images from the MSCOCO dataset.

- Implementation: All modules, including attention mechanisms and LSTM models, were implemented from scratch.

- Results Tracking: Comprehensive trial and error process documented in commit history.