-

Notifications

You must be signed in to change notification settings - Fork 2.2k

perf(trie): compute and sort trie inputs async #19894

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Conversation

…fy immediate availability of computed trie data

…d trie data handling

…e DeferredTrieData

add reth-trie-common dependency and update usages in flashblocks and rpc-eth-api

mattsse

left a comment

mattsse

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

seconding all of @mediocregopher comments

but functionally this approach lgtm

…Mutex and Condvar, replacing with OnceLock for improved concurrency

… and update tests for equality checks

…hance error handling

…ing in trie input computation

…xecutedBlock, simplify trie data access documentation

… and trie_input, add new constructors and methods for better handling

| // providers, proofs) block on the handle only when they actually need the sorted | ||

| // trie data. | ||

| let task = move || { | ||

| let result = panic::catch_unwind(AssertUnwindSafe(|| { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

having a panic here - the concern is that if something crashes the process, any panic within the blocking tasks leaves the underlying data unset which would cause the consumers of trie data to block forever.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

also side note - allows us to see what fails in e2e

…eInput struct for improved data handling

mediocregopher

left a comment

mediocregopher

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

mostly nits, looking good!

…plementation and updating ComputedTrieData usage

mediocregopher

left a comment

mediocregopher

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One final nit, LGTM 🚀

…eliminate TrieInputSorted usage

|

@dhyaniarun1993 @itschaindev @sadiq1971 @meyer9 for visibility |

| let data = OnceLock::new(); | ||

| data.set(bundle).unwrap(); // Safe: newly created OnceLock | ||

| Self(Arc::new(data)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this is just Self(Arc::new(bundle.into()))

| /// Multiple threads can wait concurrently. All waiters wake when the value is set, | ||

| /// and each receives a cloned `ComputedTrieData` (Arc clones are cheap). | ||

| pub fn wait_cloned(&self) -> ComputedTrieData { | ||

| self.0.wait().clone() | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hmm, this assumes that there's always something that's already computing the triedata,

imo this could be a potential footgun in case this isnt the case?

| /// This blocks the calling thread until the background trie computation completes. | ||

| #[inline] | ||

| pub fn trie_data(&self) -> ComputedTrieData { | ||

| self.trie_data.wait_cloned() | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this assumes that there is something that is doing this, otherwise this will deadlock, right?

| // Capture parent hash and ancestor overlays for deferred trie input construction. | ||

| let (parent_hash, overlay_blocks) = ctx | ||

| .state() | ||

| .tree_state | ||

| .blocks_by_hash(block.parent_hash()) | ||

| .unwrap_or_else(|| (block.parent_hash(), Vec::new())); | ||

|

|

||

| // Create a deferred handle to store the sorted trie data. | ||

| let deferred_trie_data = DeferredTrieData::pending(); | ||

| let deferred_handle_task = deferred_trie_data.clone(); | ||

| let deferred_compute_duration = | ||

| self.metrics.block_validation.deferred_trie_compute_duration.clone(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ca we make all of this a dedicated function, this existing fn is already quite large

| /// Multiple threads can wait concurrently. All waiters wake when the value is set, | ||

| /// and each receives a cloned `ComputedTrieData` (Arc clones are cheap). | ||

| pub fn wait_cloned(&self) -> ComputedTrieData { | ||

| self.0.wait().clone() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we don't have access to the internal oncestate and .get() does not block any ongoing initialization.

we could solve this with our own state tracking but this feels a bit redundant.

imo we can take the Hit here and always do get_or_init

imo that'd be better than relying on something else to init it

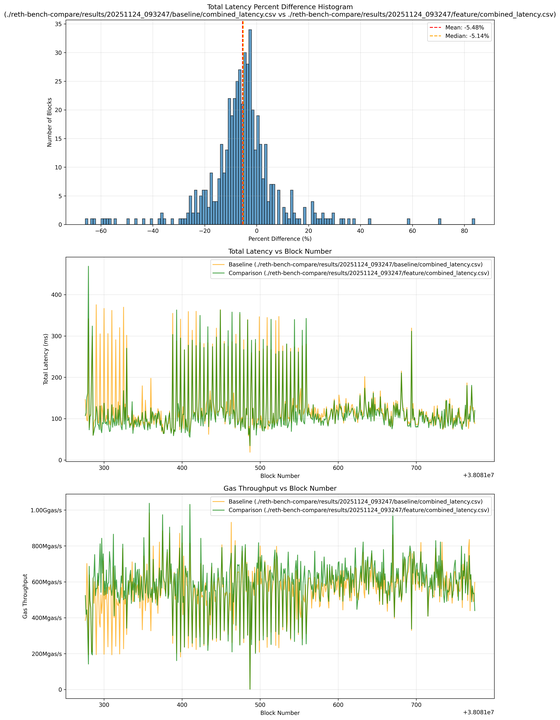

Addresses #19250 by moving both trie sorting and trie input construction out of the hot path; validation can return once the state root is checked.

Changes:

validate_block_with_statenow returns anExecutedBlockwith a pendingDeferredTrieData, and offloads the sorting and trie input building tospawn_blocking.Approach

Arc<OnceLock<ComputedTrieData>>to share the single result with multiple readers.OnceLock::waitblocks synchronously, needs no Tokio runtime, and allows cheap cloning of the handle.Flow now:

Impact

Other Approaches Considered?

Why not oneshot?

Why not Mutex + Condvar?

Mutex+Condvarrequires some scaffolding code to handle errors and conditions. With Onelock, we could simplify the handling with blocking wait.