📄 IEEE Access 2023 — DOI: 10.1109/ACCESS.2023.3339793

This repository introduces the core ideas and implementation of

Task-Aware Dynamic Model Optimization (DMO), a memory-efficient multi-task learning (MTL) framework.

Multi-task learning (MTL) often suffers from task interference and inefficient resource usage.

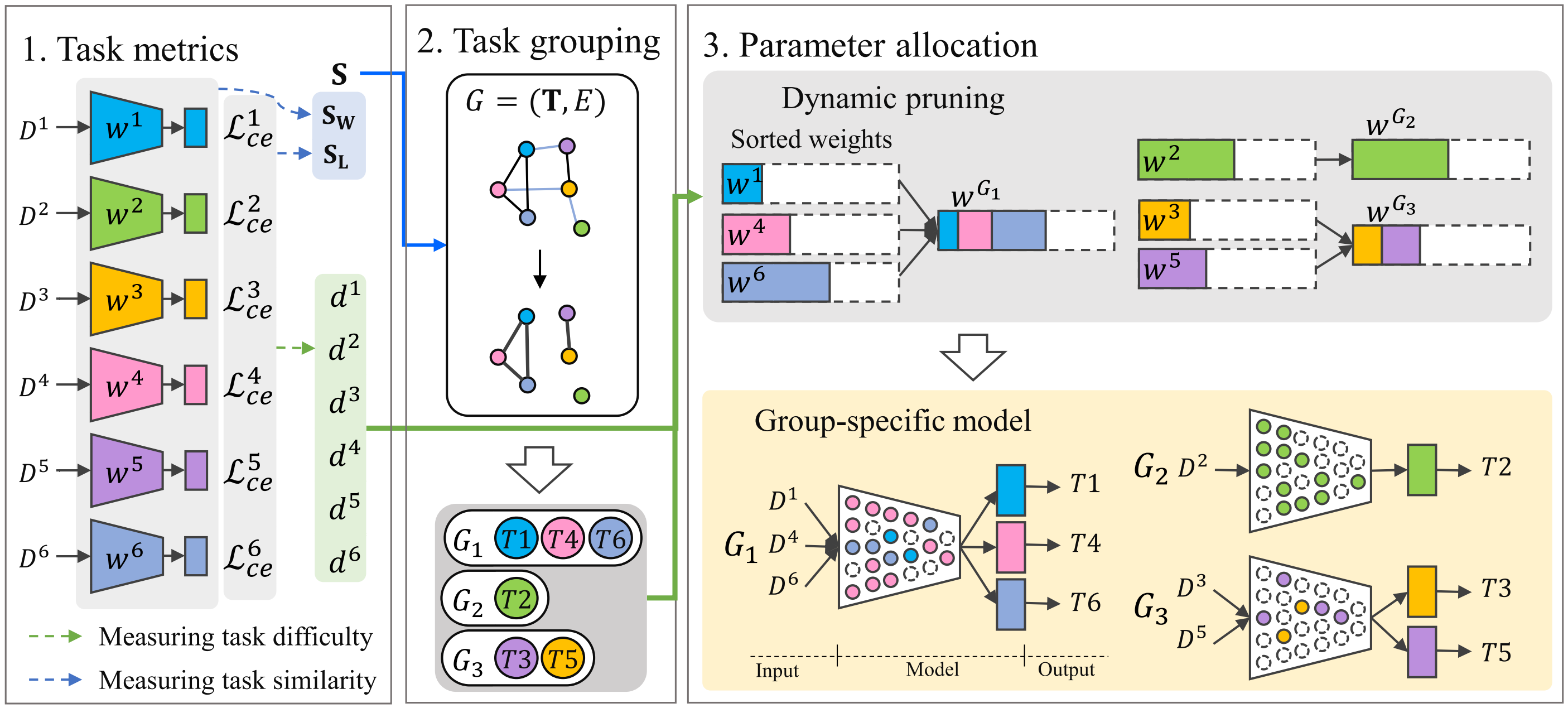

DMO (Dynamic Model Optimization) addresses these issues by:

- Grouping tasks based on inter-task similarity (weight & loss correlations)

- Dynamically allocating parameters based on task difficulty

- Producing lightweight subnetworks, reducing parameters by over 80% on average

- Task Similarity Measurement

- Pearson correlation on weights (

S_W) - Pearson correlation on losses (

S_L)

- Pearson correlation on weights (

- Task Grouping

- Graph-based maximal clique detection (Bron–Kerbosch)

- Dynamic Parameter Allocation

- Pruning by task difficulty

- Merging into group-specific subnetworks

Task-Aware-DMO/

├── models/ # Backbone network, task-specific heads, pruning utilities

├── train.py # Training logic

├── evaluate.py # Evaluation and metrics

├── config/ # Experimental settings

├── results/ # Graphs and tables from the paper

├── assets/ # Images and visual materials for README

└── paper/ # Original PDF paper for reference

- CIFAR-10, STL-10, MNIST, USPS

- CIFAR-100 (20-task version)

- Visual Decathlon Challenge (10 diverse tasks)

| Dataset Group | Param Reduction | Accuracy (Avg) |

|---|---|---|

| CIFAR-10 + MNIST | ~84% | ⭤ Comparable |

| CIFAR-100 (20 Tasks) | ~85% | ⬆️ Improved |

| Decathlon Challenge | ~88% | ⭤ Slight Drop |

🔹 Up to 97% reduction on USPS with minimal performance loss

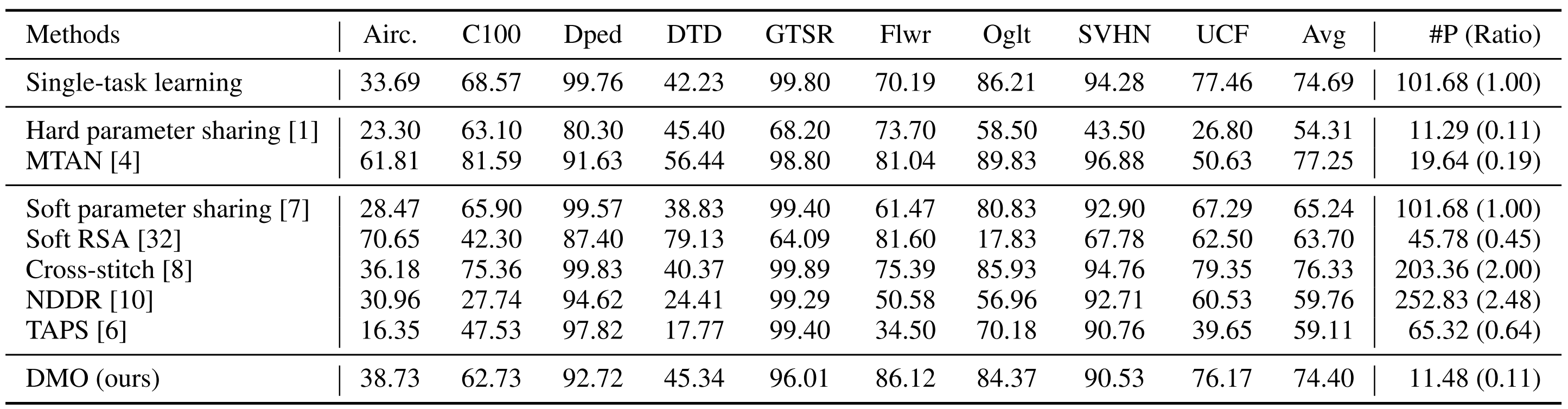

| Method | Avg Accuracy (%) | # Parameters (M) | Param Ratio |

|---|---|---|---|

| Single-task | 74.69 | 101.68 | 1.00 |

| Soft Param Sharing [7] | 65.24 | 101.68 | 1.00 |

| Cross-stitch [8] | 76.33 | 203.36 | 2.00 |

| NDDR [10] | 59.76 | 252.86 | 2.49 |

| TAPS [6] | 59.11 | 130.50 | 1.28 |

| DMO (Ours) | 74.40 | 11.48 | 0.11 |

Figure: Visual Decathlon Challenge results table (from IEEE Access paper)

Su-jin Choi (최수진)

Master of AI, Chung-Ang University

📧 [email protected]

📄 IEEE Access Paper