This repository implements a deep learning model to solve the Image-to-LaTeX task: converting images of mathematical formulas into their corresponding LaTeX code. Inspired by the work of Guillaume Genthial (2017), this project explores various encoder-decoder architectures based on the Seq2Seq framework to improve the accuracy of LaTeX formula generation from images.

🧠 Motivation: Many students, researchers, and professionals encounter LaTeX-based documents but lack the ability to extract and reuse formulas quickly. This project aims to automate the conversion of math formula images into editable LaTeX.

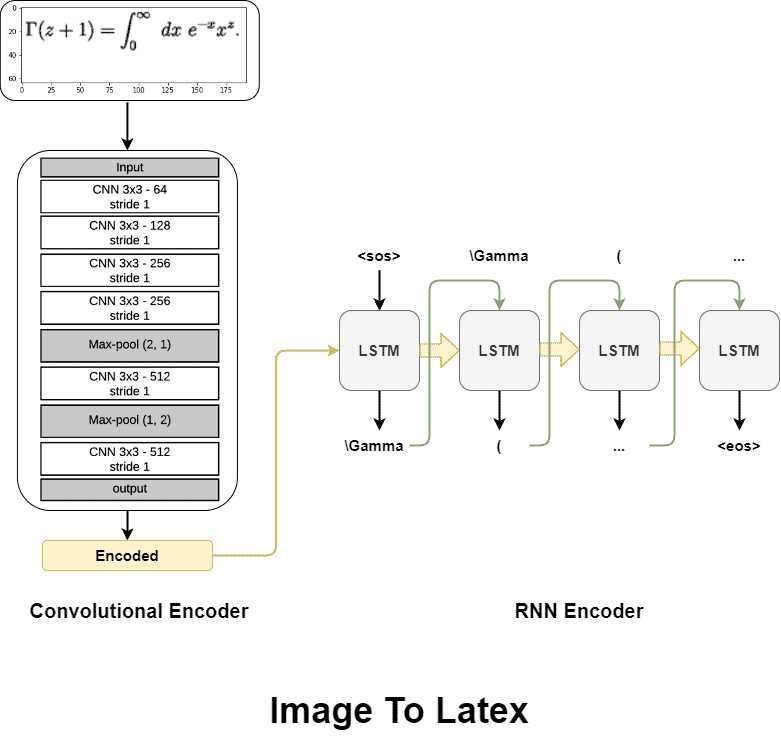

Our model follows an Encoder–Decoder with Attention structure:

- Encoder: Several configurations based on CNNs (Convolutional Neural Networks), sometimes combined with a Row Encoder (BiLSTM) or ResNet-18.

- Decoder: A unidirectional LSTM network.

- Attention: Luong attention is used to enhance decoding accuracy.

Supported Encoder Variants:

- 🧱 Pure Convolution

- 🧱 Convolution + Row Encoder (BiLSTM)

- 🧱 Convolution + Batch Normalization

- 🧱 ResNet-18

- 🧱 ResNet-18 + Row Encoder (BiLSTM)

- A commonly used dataset for benchmarking Image-to-LaTeX models.

- Preprocessed version available: im2latex-sorted-by-size

- A larger dataset with more complex LaTeX expressions.

- Extended version with metadata: im2latex-170k-meta-data

Make sure you have the necessary packages installed.

pip install -r requirements.txtwandb login <your-wandb-key>python main.py \

--batch-size 2 \

--data-path C:\Users\nvatu\OneDrive\Desktop\dataset5\dataset5 \

--img-path C:\Users\nvatu\OneDrive\Desktop\dataset5\dataset5\formula_images \

--dataset 170k \

--val \

--decode-type beamsearchFrom experiments using the IM2LATEX-100k dataset, the best-performing architecture was:

- Convolutional Feature Encoder + BiLSTM Row Encoder

- Achieved 77% BLEU-4 score

Explore pre-trained model performance and evaluation in Kaggle Notebooks:

- Integrating Transformer-based decoders

- Exploring pretrained vision encoders like ViT

- Improving performance on noisy or low-resolution images

Nguyễn Văn Anh Tuấn 📍 IUH - Industrial University of Ho Chi Minh City ✉️ [email protected]