-

Notifications

You must be signed in to change notification settings - Fork 1.9k

Add support for stream output in Gradio demo #630

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| @@ -143,24 +138,34 @@ def predict( | |||

| with gr.Row(): | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

line 135: The banner image seems too big, please consider resize it to a smaller one if possible. Also, try to use the absolute path for this image as someone may use gradio_web_demo.py in other path.

line 136: missing "。" at the end of sentence.

|

Hi, Thanks again for your PR. I have checked that the script is functional on Colab.

Comments from GPT-4 Code Interpreter (it is up to you to follow its advice or not): The code seems well structured, but here are a few suggestions that might improve its overall quality:

Please note that these suggestions are based on general good practices for Python development. The specific needs and constraints of your project might mean that some of these suggestions are not applicable or need to be modified. |

|

Thanks for your valuable advice!!!! I will modify the code following your great code advice, I am really happy that you can give me so many advices on my code!!!😃 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I made some minor comments.

After resolving these issues, I'll merge this PR in main branch.

Thanks.

| with gr.Blocks() as demo: | ||

| gr.HTML("""<h1 align="center">Chinese LLaMA & Alpaca LLM</h1>""") | ||

| current_file_path = os.path.abspath(os.path.dirname(__file__)) | ||

| gr.Image(f'{current_file_path}/../../pics/banner.png', label = 'Chinese LLaMA & Alpaca LLM') |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should be small_banner.png instead of banner.png?

scripts/inference/gradio_demo.py

Outdated

| value=128, | ||

| step=1.0, | ||

| label="Maximum New Token Length", | ||

| interactive=True) | ||

| top_p = gr.Slider(0, 1, value=0.8, step=0.01, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would be better to set top_p=0.9 as default.

|

@ymcui |

Description:

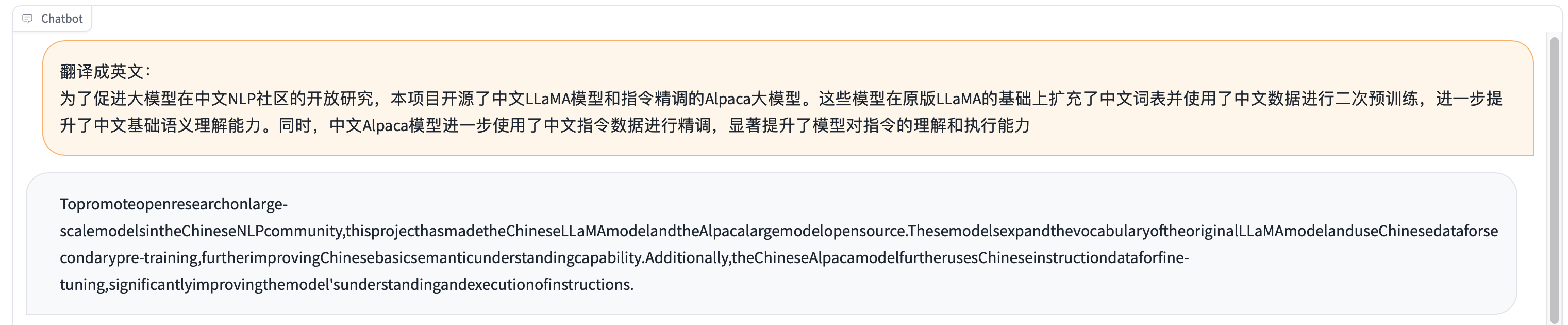

This pull request adds support for streaming output in Gradio demo. With this enhancement, users can now visualize the output of their models as a stream in real-time, enabling a more interactive and dynamic experience.

效果展示:https://www.bilibili.com/video/BV1zW4y1S7tS/?vd_source=87997328b39dd6fa2449ef2da981cfcc

Changes Made: