Implementation of A3C using TensorFlow v0.9(But it is easy to modify and run it on higher versions)

From Here, clone multi thread supported arcade learning environment. make and install it. Modifications to ale is necessary to avoid multi thread problems

$ python main.pyThere are several options to change learning parameters and behaviors.

- rom: Atari rom file to play. Defaults to breakout.bin.

- threads_num: Number of learner threads to run in parallel. Defaults to 8.

- local_t_max: Number of steps to look ahead. Defaults to 5.

- global_t_max: Number of iterations to train. Defaults to 8e7 (80 million). Learning rate will decrease propotional to this value.

- use_gpu: Whether to use gpu or cpu. Defaults to True. To use cpu set it to False.

- shrink_image: Whether to just shrink or trim and shrink state image. Defaults to False.

- life_lost_as_end: Treat life lost in the game as end of state. Defaults to True.

- evaluate: Evaluate trained network. Defaults to False.

Options can be used like follows

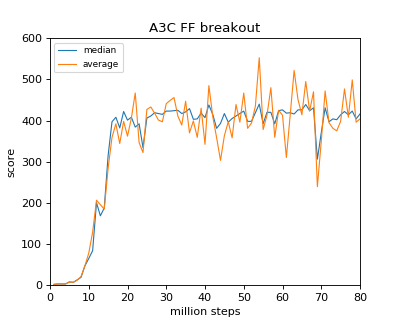

$ python main.py --rom="pong.bin" --threads_num=4The result trained for 80 Million steps with 8 threads. It took about 40 hours with 8 core Ryzen 1800X.

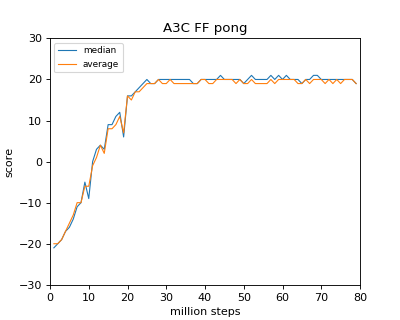

The result trained for 80 Million steps with 8 threads. It took about 34 hours with 8 core Ryzen 1800X.

$ python main.py --evaluate=True --checkpoint_dir=trained_results/breakout/ --trained_file=network_parameters-80002500MIT