-

Notifications

You must be signed in to change notification settings - Fork 0

Problem Defination

The proposed idea approaches the demand side management problem using smart dToU policy to further improve the user engagement. The idea is to design a tariff policy which would match the observed mean consumption to target mean consumption. While improving the tariff policy, the algorithm will also create a probability distribution of the users based on their participation influence on overall consumption. The target outcome of the experiment is to get the least number of tariff signals to be sent to specific users in order to match the target mean consumption.

For the aforementioned experimental setup, based on their engagement, the users are categorized as follows:

- Users with more than 95% engagement

- Users with more than 60% engagement

- Users with more than 30% engagement

- Users with no engagement

The above categories are proposed for initial conceptualization and may change over time.

-

Upper limit on the number of test signals:

- For each experimental setup, only limited number of test signals will be provided.

- The algorithm can decide the number of recipients for each test signal.

-

Available tariff policies:

- The model can choose from a predefined set of tariff policies for every particular case. This step is implemented due to other constraints on tariff policy design such as - minimum duration of tariff level, predictable peak hours, limit on maximum number of experiments per day etc. (in other words, why reinvent a wheel!)

-

Minimize the loss function

- Loss is calculated as a quadratic function of difference between expected mean consumption and target mean consumption

The problem is two fold. The first step of the problem is to identify best tariff policy from the given set of tariff policies. The next and more interesting problem is to minimize the number of tariff signals to be sent for a particular trial.

Although the experiment design for dToU tariff policy design is a simple problem (because of known and predictable peak hours), complexity of problem increases when we try to minimize the total number of signals to be sent. At this point, the problem can be analyzed from two different views:

- Frequentist problem (classic way of machine learning)

- Bayesian problem

The Bayesian analysis is superior to classic frequentist analysis because of the following advantages:

- Use of priors. Therefore easier to compensate the shift in the target variable (idea of 'target shift' seems more important in this case than 'covariate shift' as user behaviour would change over time, but contextual variables will more or less remain same)

- Bayesian analysis tells us how likely 'A' is winner over 'B' and by how much. This improves the transparency in the decision making process for energy retailer company in practical world. Frequentist problem depends entirely on the prespecified hypothesis.

- Bayesian analysis doe not require user to define many hyper-parameters/ thresholds to formulate valid hypothesis. Hence, robustness and reliability of the algorithm is better. This is especially useful in this case as the entire hypothesis formulation depends on simulation data. Therefore, ideally models with Bayesian hypothesis would perform better in practical world.

Both the problems will be solved with active learning method. The first problem can be solved with frequentist analysis and hypothesis (solution can be converged easily with preset of tariff policies). Hence, the tariff policy choosing problem can be solved with any available probabilistic method.

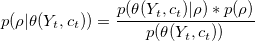

For choosing the optimal number of tariff signals to specific users (prioritized by algorithm) for meeting the target consumption level, Bayesian analysis is used. The Bayes rule for this particular problem can be formulated as below,

Where,

The above Bayesian approach directly uses the simulator generated 'observation' as expected mean consumption. A more practical approach would be the use of contextual variables. The target mean consumption will be provided to the model from preset targets. The ρ is the distribution of contribution of k users in total energy consumption change.

What this approach can do-

- design dToU tariff policy with active learning technique

- cope up with changing user behaviour over time (target shift)

- organically solve the problem of user selection considering user interest in the dToU scheme

- provide transparency in user selection for reception of price signals with Bayesian approach

Following tests can be carried out to understand the effectiveness of the approach.

- Analyze the effect of number of training tariff signals on final model accuracy

- Effect of number of tariff signals sent (per experiment) on accuracy

- Analyze target shift / covariate shift by changing input data