-

Notifications

You must be signed in to change notification settings - Fork 497

Apache Iceberg: The Leading Table Format

Apache Iceberg has emerged as the leader in open table formats in 2025, culminating years of development and industry competition. This remarkable rise represents a significant shift in how organizations manage large-scale data lakes and lakehouses. What was once considered less performant and less promising just a few years ago has now become the industry standard, backed by major technology companies and enjoying widespread adoption. The following analysis explores the key factors behind Iceberg's dominance, from technical advantages to strategic industry moves that have solidified its position as the preferred open table format.

The ascendancy of Apache Iceberg can be largely attributed to strategic industry shifts and major corporate endorsements. By 2025, several pivotal developments have cemented Iceberg's position as the leading table format. Databricks' acquisition of Tabular, the company founded by Iceberg's original creators, represented a major endorsement of the technology's potential and signaled a strategic shift to promote Iceberg alongside, and perhaps even prioritizing it over, their own Delta Lake format[10]. This move was particularly significant as it came from a company that had previously been heavily invested in promoting a competing solution.

Simultaneously, Snowflake made a dual announcement regarding Polaris and their commitment to supporting Iceberg natively[10]. This strategic decision further legitimized Iceberg as a cross-platform standard rather than just another competing format. With prominent query engine vendors like Starburst and Dremio supporting Polaris, the industry has aligned around Iceberg as a common standard[10]. This alignment has created a powerful network effect, encouraging more organizations to adopt the format.

Major corporations including Apple, Netflix, and Tencent have implemented Iceberg in their production environments and play significant roles within the community[1]. Their public support and contributions have accelerated development and added credibility to the format. Additionally, AWS's declaration of Iceberg support for S3 has made the technology more accessible to the vast AWS user base[2]. These endorsements collectively demonstrate how industry leaders have coalesced around Iceberg, creating momentum that has been difficult for competing formats to overcome.

Apache Iceberg's technical capabilities have been fundamental to its growing popularity in 2025. At its core, Iceberg boasts a straightforward design with a genuinely open specification that lacks any concealed proprietary elements[1]. This transparency makes it the simplest option for third-party integrations, fostering a rich ecosystem of compatible tools and services.

One of Iceberg's most compelling advantages is its multi-engine interoperability, making it the preferred choice for teams utilizing Spark, Trino, Flink, and Snowflake simultaneously[7]. Unlike Delta Lake, which is optimized primarily for Spark workflows, Iceberg's distributed architecture scales seamlessly across different processing engines[7]. This flexibility allows organizations to select the optimal tool for each task rather than being constrained by format limitations.

In terms of schema evolution, Iceberg permits column modifications without the need to rewrite data, while Delta necessitates explicit merge operations[7]. This capability significantly reduces operational overhead when data structures need to change. Similarly, Iceberg's approach to partitioning stands out—it automatically adjusts partitions, whereas Delta requires predetermined partitions that often result in costly rewrites[7]. For performance optimization, Iceberg employs Puffin files to enhance query performance, a feature that competing formats like Delta Lake do not offer[7].

Iceberg's ACID compliance ensures data integrity even with concurrent writes, which is essential for any serious data operation[11]. The format also supports time travel capabilities, allowing users to access historical versions of data for debugging, auditing, and compliance purposes[9][11]. These technical capabilities collectively provide a robust foundation that addresses many of the shortcomings of traditional data lakes, making Iceberg particularly attractive for organizations dealing with large-scale data operations.

The surge in Apache Iceberg's popularity coincides with its strategic positioning at the intersection of emerging data trends, particularly those related to artificial intelligence. As enterprises increasingly focus on AI initiatives in 2025, Iceberg has established itself as a foundational technology for building and maintaining the high-quality datasets necessary for machine learning workloads[5][11].

Data quality is paramount when developing AI models, and Iceberg's ACID properties and time travel capabilities ensure that data used for training and testing is both consistent and reliable[11]. This consistency helps accelerate model development and debugging, ultimately reducing time-to-market for AI projects. The format's ability to handle schema evolution without disrupting existing data access patterns is particularly valuable in the rapidly evolving field of AI, where data requirements frequently change.

The shift from traditional data pipelines to more comprehensive knowledge pipelines has also played into Iceberg's strengths[5]. As organizations seek to derive more value from their data assets, the format's ability to support multiple engines with unified storage creates an environment where data can be processed and analyzed more efficiently across different platforms and tools[5]. This aligns perfectly with the industry trend toward consolidation in data solutions, where organizations are looking to simplify their data architectures while maintaining flexibility[5].

Cost efficiency has become increasingly important in cloud-driven environments, and Iceberg optimizes both storage formats and query execution, reducing unnecessary data scans[11]. This means lower compute costs and more efficient resource usage—an essential factor when working with large-scale deployments, particularly for AI workloads that can be computationally intensive[3][11]. By 2025, organizations have recognized these advantages, driving further adoption of Iceberg as a cost-effective foundation for their data and AI strategies.

By 2025, Apache Iceberg has proven its value across diverse real-world scenarios, addressing several critical pain points in data management. One of the primary motivations for adoption has been the desire for data ownership and prevention of vendor lock-in[3]. By utilizing storage solutions not tied to specific platforms, organizations maintain greater control over their data assets and retain the flexibility to switch between service providers as needed.

The ability to employ various compute engines such as Spark, Trino, and Flink enhances flexibility and allows teams to select the most appropriate tool for each specific task[3]. This capability is particularly valuable in heterogeneous environments where different workloads may benefit from different processing approaches. Additionally, Iceberg's approach to decoupling storage from compute facilitates independent scaling[3], enabling organizations to optimize resource allocation and control costs more effectively.

In the Japanese market, Iceberg has gained significant traction due to its compatibility with hybrid cloud architectures[12]. On-premises data remains very important in Japan, often due to security, compliance, or regulatory concerns. At the same time, cloud computing in Japan is growing. For this reason, many Japanese companies prefer a hybrid cloud approach, and Iceberg's ability to work consistently across on-premises and cloud environments makes it an ideal choice[12].

For organizations handling continuously growing datasets, Iceberg's scalability has proven to be a substantial advantage. Both read and write operations remain efficient even when dealing with petabytes of data[11]. This scalability represents a major improvement over traditional data lakes, which often experience performance degradation as data volumes increase. Organizations that have implemented Iceberg report simplified data management, with built-in schema and partition evolution features making life easier for data engineers and helping them avoid errors that can occur with manual interventions[11].

As we look ahead in 2025, several emerging developments are poised to further cement Apache Iceberg's dominance. The introduction of support for nanosecond-precision timestamps with time zones will open Iceberg to industries like finance and telecommunications, where high-precision data is critical[10]. Additionally, Spec V3's binary deletion vectors provide a scalable, efficient solution for handling deletions, which is especially valuable in regulatory environments or for GDPR compliance[10].

The ecosystem surrounding Iceberg continues to expand, with innovations like RBAC catalogs, enhanced streaming capabilities, materialized views, and geospatial data support on the horizon[8][10]. These advancements are making Iceberg more versatile and applicable to an even broader range of use cases. Meanwhile, the development of better support in Rust instead of JVM is unlocking new opportunities and potentially enhancing performance further[6].

Progress in tools that make Iceberg more accessible is also driving adoption. The pyiceberg library, along with Polaris and the DuckDB Iceberg extension, are expanding the format's reach beyond Java implementations[1]. While these alternatives don't yet have feature parity with Java Iceberg, their development signals growing interest in making the format more accessible to a wider range of developers and use cases[1].

Today, organizations can ingest data into Iceberg using Kafka or the PostgreSQL protocol (via RisingWave) and query that data using modern query engines like Trino, Snowflake, Databricks, and more[10]. This rich ecosystem is expected to grow even more robust in the coming years, further increasing Iceberg's appeal across different segments of the data engineering community.

By 2025, it has become clear that Apache Iceberg has won the format wars. What began as a competition between Delta Lake, Apache Hudi, and Iceberg has concluded with Iceberg emerging as the de facto open table format for data engineering[10]. This victory can be attributed to a combination of strategic industry endorsements, technical superiority, and alignment with key trends in data management and artificial intelligence.

The industry alignment around Iceberg, exemplified by Databricks' acquisition of Tabular and Snowflake's introduction of Polaris, has created an environment where choosing any other format increasingly means swimming against the tide. The technical advantages—from multi-engine interoperability to advanced schema evolution and partitioning capabilities—have made Iceberg the logical choice for organizations seeking to build modern, flexible data architectures.

Looking forward, Iceberg is on track to become the universal table format for data engineering[10]. With ongoing innovations in areas like new data types, binary deletion vectors, and integration with emerging technologies, Iceberg's position appears secure for the foreseeable future. Whether organizations are building real-time analytics pipelines, managing petabytes of historical data, or exploring cutting-edge data lakehouse architectures, Iceberg offers compelling capabilities that address their needs while providing a foundation for future growth and innovation.

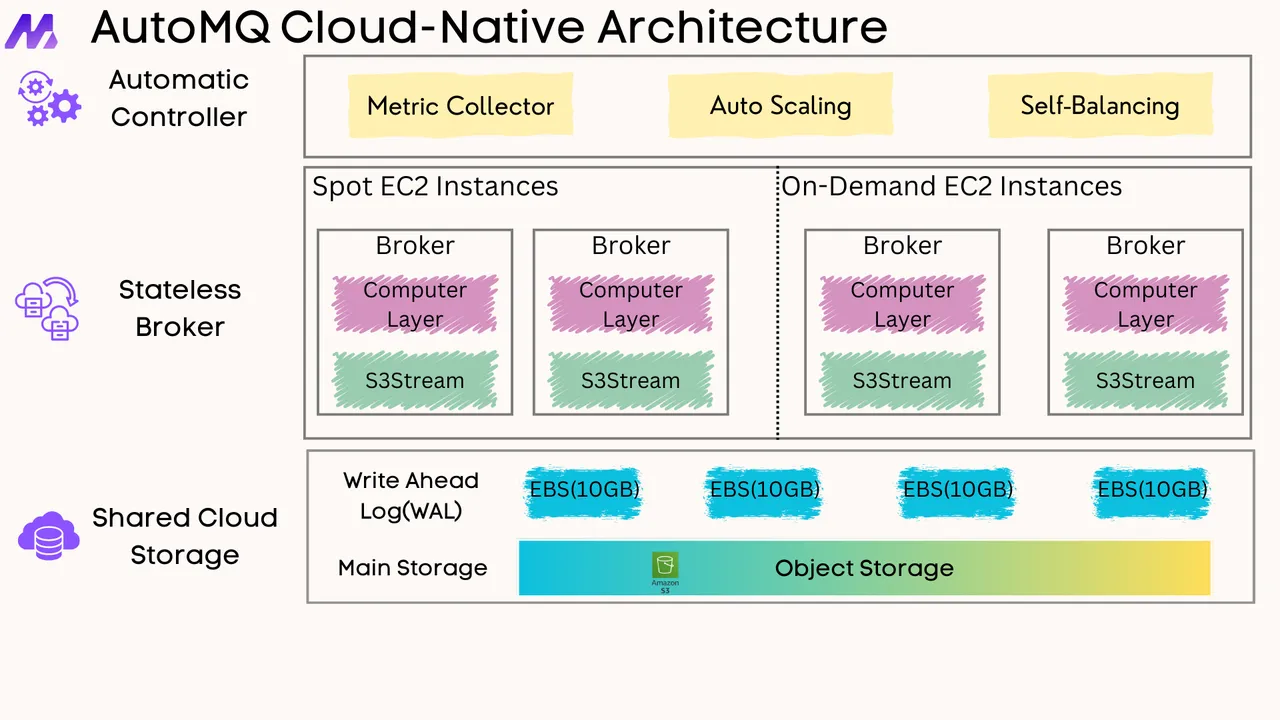

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

-

What are the Real Use Cases Being Solved Using Apache Iceberg?

-

Navigating the Data Lakehouse Revolution with Apache Iceberg

-

DuckDB Now Provides an End-to-End Solution for Apache Iceberg

-

10 Future Apache Iceberg Developments to Look Forward to in 2025

-

Beyond Cost Savings: The True Power of Apache Iceberg in Modern Data Architectures

-

10 Reasons Why Apache Iceberg Will Dominate Data Lakehouses in 2025

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

- Architecture: Overview

- S3stream shared streaming storage

- Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

- Data analysis

- Object storage

- Kafka ui

- Observability

- Data integration