-

Notifications

You must be signed in to change notification settings - Fork 497

What is Kafka Exactly Once Semantics

Exactly Once Semantics (EOS) represents one of the most challenging problems in distributed messaging systems. Introduced in Apache Kafka 0.11 (released in 2017), EOS provides guarantees that each message will be processed exactly once, eliminating both data loss and duplication. This feature fundamentally changed how stream processing applications handle data reliability and consistency. The implementation of EOS in Kafka demonstrates how sophisticated distributed systems can overcome seemingly impossible theoretical constraints.

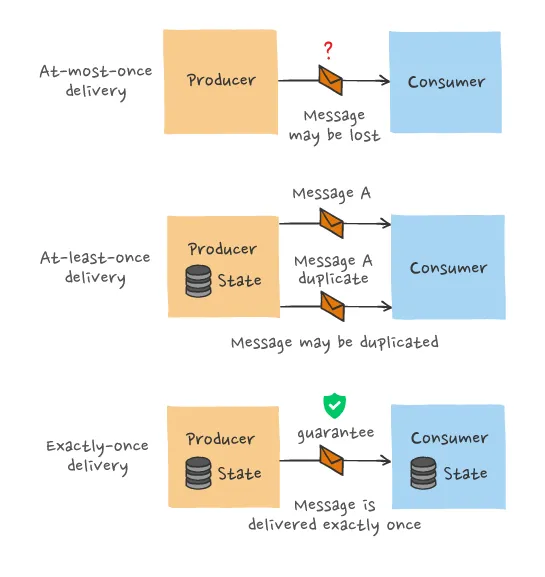

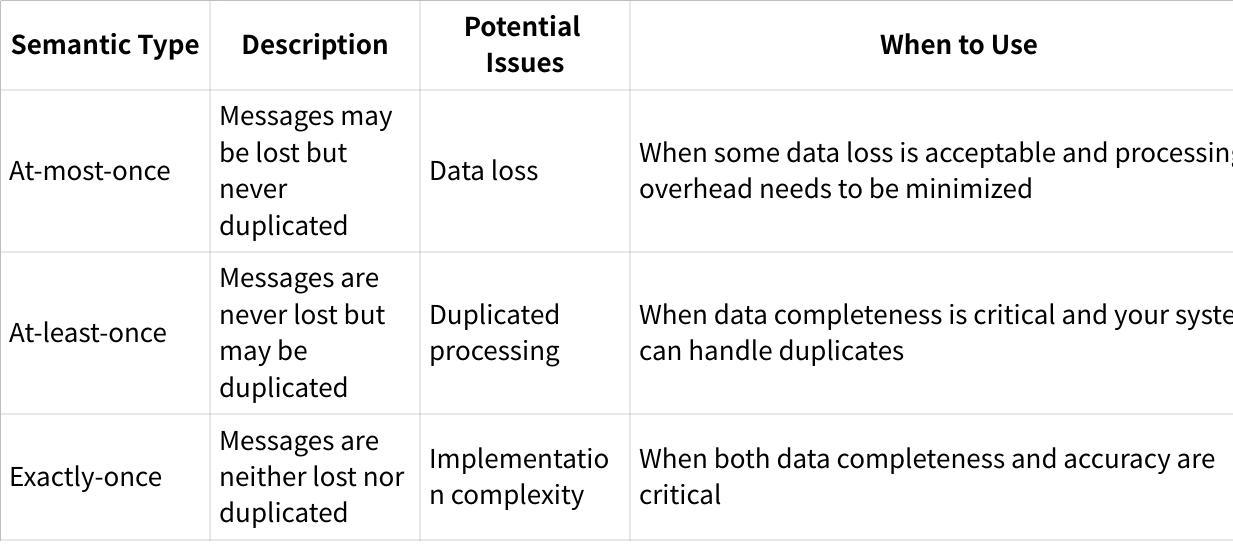

Before diving into Kafka's specific implementation, it's important to understand the spectrum of delivery guarantees in messaging systems:

In distributed systems like Kafka, failures can occur at various points: a broker might crash, network partitions may happen, or clients could fail. These failures create significant challenges for maintaining exactly-once semantics[2][7].

As noted by experts like Mathias Verraes, the two hardest problems to solve in distributed systems are guaranteeing message order and achieving exactly-once delivery[2]. Prior to version 0.11, Kafka only provided at-least-once semantics with ordered delivery per partition, meaning producer retries could potentially cause duplicate messages[7].

Contrary to common misunderstanding, Kafka's EOS is not just about message delivery. It's a combination of two properties:

-

Effectively Once Delivery : Ensuring each message appears in the destination topic exactly once

-

Exactly Once Processing : Guaranteeing that processing a message produces deterministic state changes that occur exactly once[6]

For stream processing, EOS means that the read-process-write operation for each record happens effectively once, preventing both missing inputs and duplicate outputs[7].

Kafka achieves exactly-once semantics through several interconnected mechanisms:

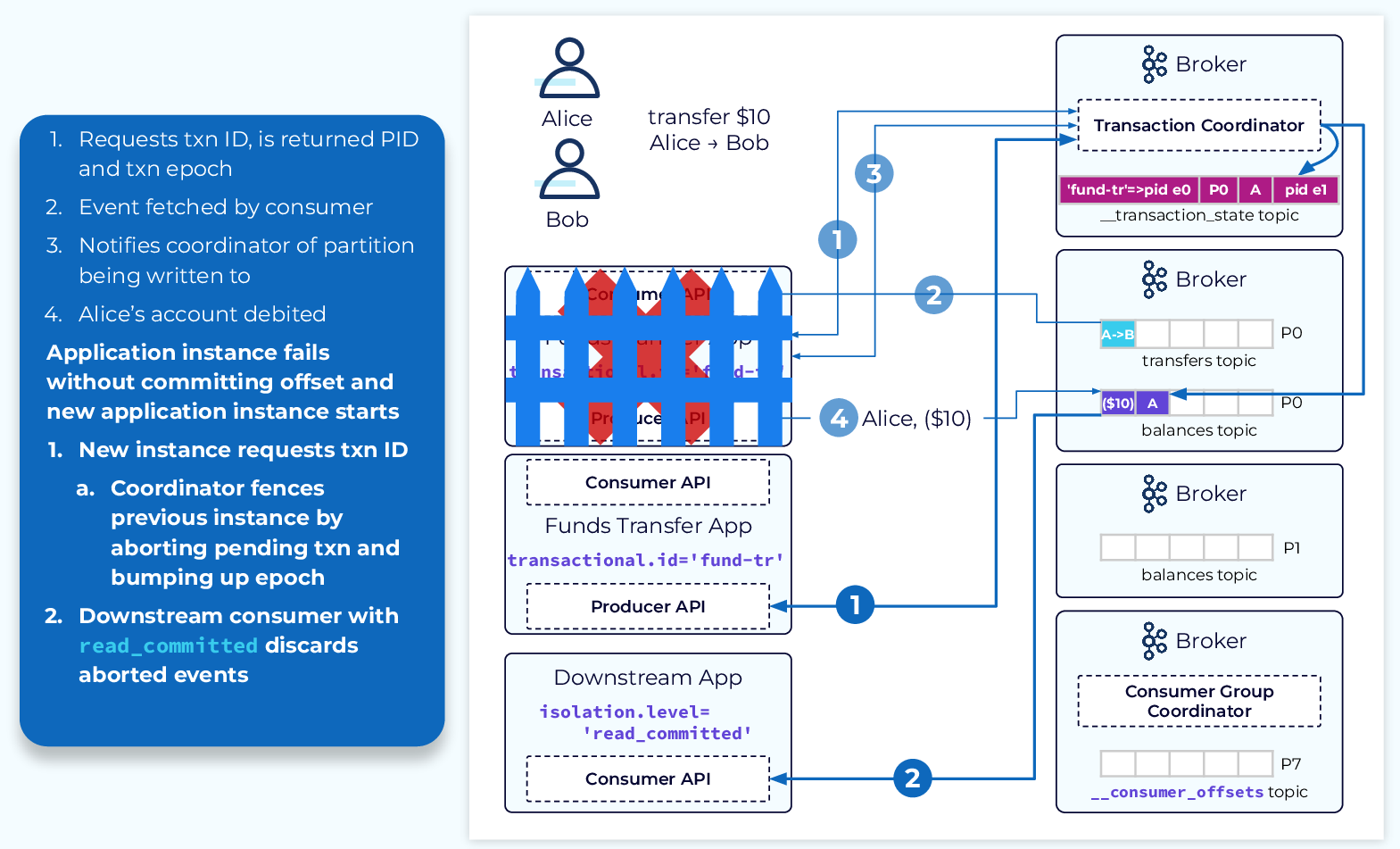

The idempotent producer is the foundation of EOS, upgrading Kafka's delivery guarantees from at-least-once to exactly-once between the producer and broker[7]. When enabled, each producer is assigned a unique producer ID (PID), and each message is given a sequence number. The broker uses these identifiers to detect and discard duplicate messages that might be sent during retries[1][7].

Kafka transactions allow multiple write operations across different topics and partitions to be executed atomically[7]. This is essential for stream processing applications that read from input topics, process data, and write to output topics.

A transaction in Kafka works as follows:

-

The producer initiates a transaction using

beginTransaction\() -

Messages are produced to various topics/partitions

-

The producer issues a commit or abort command

The transaction coordinator is a module running inside each Kafka broker that maintains transaction state. For each transactional ID, it tracks:

-

Producer ID: A unique identifier for the producer

-

Producer epoch: A monotonically increasing number that helps identify the most recent producer instance[11]

This mechanism ensures that only one producer instance with a given transactional ID can be active at any time, enabling the "single-writer guarantee" required for exactly-once semantics[11].

On the consumer side, Kafka provides isolation levels that control how consumers interact with transactional messages:

-

read_uncommitted: Consumers see all messages regardless of transaction status -

read_committed: Consumers only see messages from committed transactions[7]

When configured for exactly-once semantics, consumers use the read_committed isolation level to ensure they only process data from successful transactions[7].

Kafka transactions provide robust guarantees for atomicity and exactly-once semantics in stream processing applications. By understanding their underlying concepts, configuration options, common issues, and best practices, developers can leverage Kafka's transactional capabilities effectively. While they introduce additional complexity and overhead, their benefits in ensuring data consistency make them indispensable for critical applications.

This comprehensive exploration highlights the importance of careful planning and monitoring when using Kafka transactions, ensuring that they align with application requirements and system constraints.

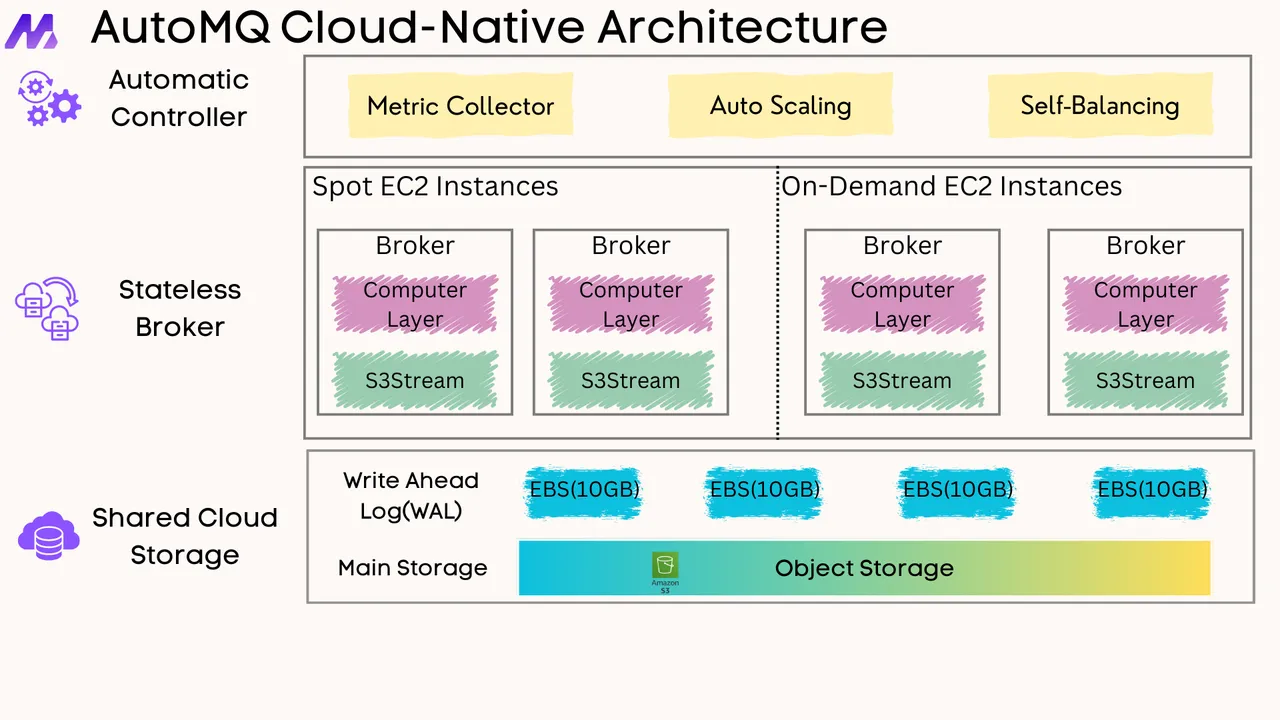

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

- Architecture: Overview

- S3stream shared streaming storage

- Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

- Data analysis

- Object storage

- Kafka ui

- Observability

- Data integration