-

Notifications

You must be signed in to change notification settings - Fork 497

Apache Kafka vs. Azure Event Hubs

In today's data-driven landscape, event streaming platforms have become essential for building real-time applications and data pipelines. Apache Kafka and Microsoft Azure Event Hubs stand out as two prominent solutions in this space. This comprehensive comparison examines their architectures, features, performance characteristics, security models, and ideal use cases to help you make an informed decision for your streaming needs.

Before diving into detailed comparisons, here's a key finding: While Apache Kafka offers maximum flexibility as an open-source solution with extensive customization options, Azure Event Hubs provides a fully managed experience with native Kafka protocol support, effectively reducing operational overhead while maintaining compatibility with the Kafka ecosystem.

Apache Kafka is a distributed event streaming platform that you install and operate on your own infrastructure or cloud provider. Its architecture consists of a cluster of brokers that store and serve data organized in topics [1]. Each topic is divided into partitions , with each partition having a leader broker and one or more follower brokers for replication and fault tolerance[1].

Kafka organizes data into topics, which are further divided into partitions. Each partition can be replicated across multiple brokers to ensure fault tolerance and high availability[2]. Clients interact with Kafka through producer and consumer APIs, with producers writing data to topics and consumers reading from them.

Azure Event Hubs is a fully managed, cloud-native service that provides a unified event streaming platform with native Apache Kafka protocol support[6][8]. It consists of namespaces (equivalent to Kafka clusters) containing event hubs (equivalent to Kafka topics)[8]. Like Kafka topics, event hubs are divided into partitions that store and distribute data[1].

The key architectural difference is that Event Hubs abstracts away the underlying infrastructure. You don't need to manage brokers, disks, or networks—you simply create a namespace with a fully qualified domain name and then create event hubs within that namespace[1][8]. Event Hubs uses a single virtual IP address as the endpoint, simplifying network configuration compared to Kafka's requirement for accessing all brokers in a cluster[1].

|

Apache Kafka Concept |

Azure Event Hubs Equivalent |

|---|---|

| Cluster |

Namespace |

| Topic |

Event Hub |

| Partition |

Partition |

| Consumer Group |

Consumer Group |

| Offset |

Offset |

-

Open-source platform with a large and active community[2]

-

Distributed architecture ensuring fault tolerance and scalability[2]

-

High throughput with low latency for real-time data processing[2]

-

Extensive ecosystem with connectors, stream processing libraries (Kafka Streams), and monitoring tools[2]

-

Data durability through replication and disk storage[16]

-

Fully managed service with high availability and disaster recovery options[2][6]

-

Native Kafka protocol support allowing existing Kafka applications to connect without code changes[6][8]

-

Seamless Azure integration with services like Azure Functions, Stream Analytics, and Data Explorer[2][6]

-

Schema Registry for managing schemas in event streaming applications[6]

-

Auto-scaling capabilities with throughput units that can automatically adjust based on load[1][8]

-

Multi-protocol support including AMQP, HTTP, and Kafka protocols[2][9]

-

Event Hubs Capture for automatic batching and archiving of streaming data[9]

Kafka is designed for high throughput and can handle millions of events per second with proper configuration. Performance depends on:

-

Number and size of partitions

-

Replication factor

-

Hardware resources allocated

-

Network configuration

Scaling Kafka requires adding more brokers to the cluster and carefully rebalancing partitions, which can be operationally complex[1].

Azure Event Hubs can handle millions of events per second with low latency[6]. Its performance scaling is controlled through:

-

Throughput units (TUs) in standard tier or processing units in premium tier[7][8]

-

Each TU provides 1 MB/s or 1000 events per second of ingress and twice that for egress[7]

-

Auto-inflate feature automatically scales throughput units when limits are reached[8]

-

A single Capacity Unit in dedicated clusters can achieve 100-250 MB/s based on workload patterns[11]

Event Hubs can accommodate events up to 20 MB with self-serve scalable dedicated clusters[6], which is significantly larger than standard message sizes in many streaming platforms.

Kafka security features require manual configuration and include:

-

TLS/SSL encryption for data in transit

-

SASL authentication mechanisms (PLAIN, SCRAM, Kerberos)

-

ACL-based authorization for access control

-

Requires significant expertise to properly secure

Azure Event Hubs provides comprehensive security features[7][8]:

-

OAuth 2.0 token-based authentication integrated with Microsoft Entra ID[8]

-

Shared Access Signatures (SAS) for delegated access[7][8]

-

Role-Based Access Control (RBAC) for fine-grained permissions[8]

-

TLS encryption required for all data in transit[7]

-

Network security features including Private Endpoints and VNet service endpoints

-

Application groups for resource access policies like throttling[9]

When using Kafka clients with Event Hubs, authentication is configured through SASL mechanisms. For example[7][8]:

bootstrap.servers=NAMESPACENAME.servicebus.windows.net:9093

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{CONNECTION STRING}";

Apache Kafka requires significant operational efforts:

-

Installation and cluster setup

-

Broker configuration and maintenance

-

Partition management and rebalancing

-

Monitoring and alerting setup

-

Scaling operations and cluster upgrades

Several management tools are available, including Conduktor, which provides features like[4]:

-

UI for managing Kafka resources

-

Authentication and authorization options (LDAP, SAML, OpenID Connect)

-

Schema registry support

-

Multi-cluster management capabilities

Azure Event Hubs minimizes operational overhead[1][8]:

-

No servers, disks, or networks to manage

-

Automatic scaling with the Auto-Inflate feature

-

Built-in monitoring through Azure Monitor

-

Point-and-click disaster recovery configuration

-

Simplified updates and patching handled by Microsoft

Best practices for Event Hubs operations include[15]:

-

Creating SendOnly and ListenOnly policies for publishers and consumers

-

Using batched events in high-throughput scenarios

-

Implementing proper exception handling in client applications

-

Considering geo-disaster recovery for business continuity

Kafka has a rich ecosystem of integrations:

-

Kafka Connect framework for data import/export

-

Kafka Streams for stream processing

-

Integration with Hadoop, Spark, and other big data technologies

-

Third-party monitoring and management tools

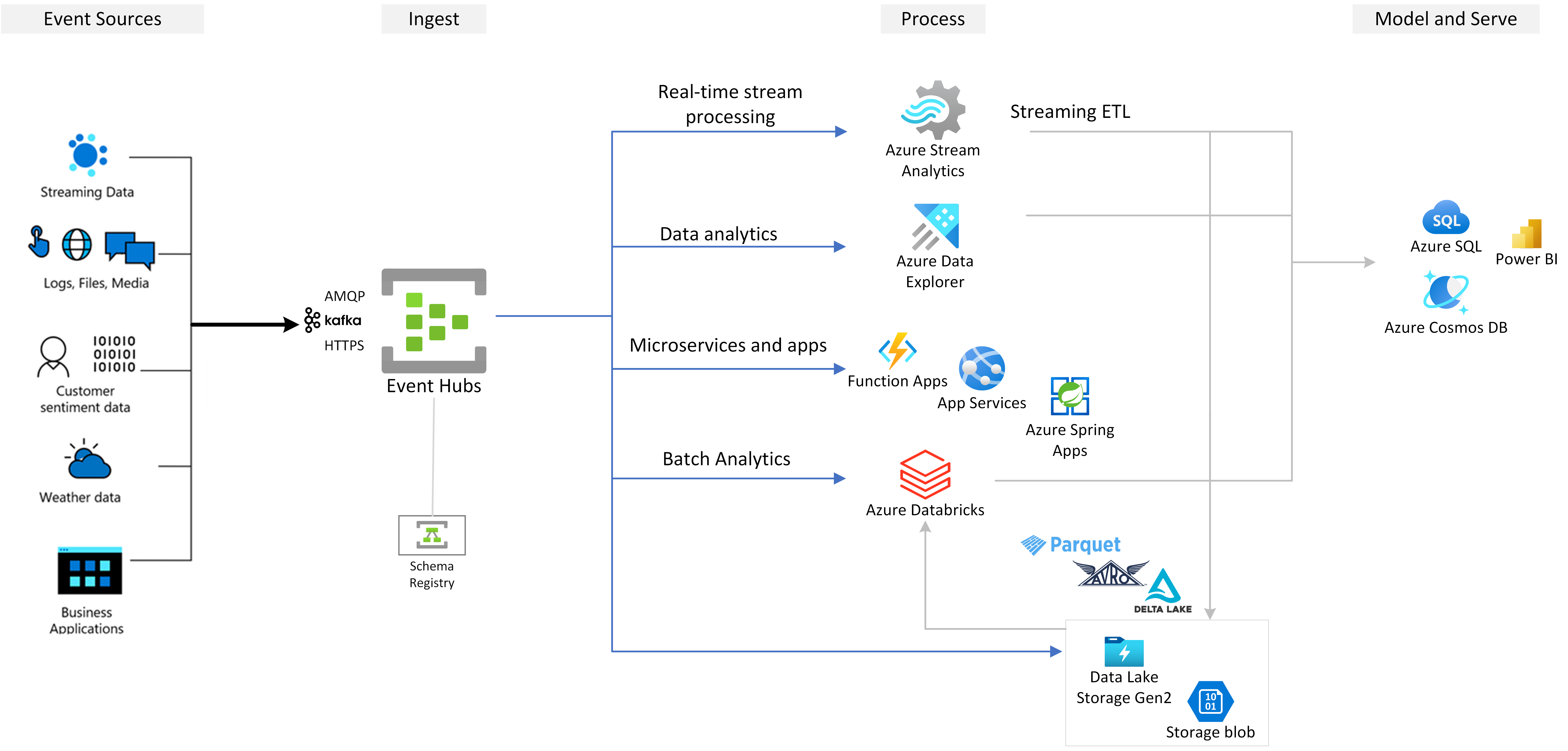

Azure Event Hubs offers seamless integration with[2][6]:

-

Azure Stream Analytics for real-time analytics

-

Azure Functions for serverless processing

-

Azure Data Explorer for data exploration and analytics

-

Azure Logic Apps for workflow automation

-

Microsoft Fabric for end-to-end analytics

Apache Kafka is ideal for[2][16]:

-

Organizations requiring complete control over their infrastructure

-

Complex event-driven architectures with extensive customization needs

-

Scenarios demanding maximum flexibility in configuration

-

Large enterprises with dedicated Kafka expertise

-

Use cases requiring specific Kafka features not yet supported in Event Hubs

Azure Event Hubs is best suited for[14]:

-

Organizations already invested in the Azure ecosystem

-

Teams seeking to minimize operational overhead

-

Scenarios requiring seamless integration with Azure services

-

Projects needing quick setup and reduced time-to-market

-

Enterprises with strict security and compliance requirements

-

Existing Kafka workloads that want to reduce operational burden

While Apache Kafka is open-source, total cost of ownership includes:

-

Infrastructure costs (servers, storage, networking)

-

Operational costs (administration, monitoring, maintenance)

-

Potential costs for enterprise support or managed Kafka services

Azure Event Hubs costs depend on[11][14]:

-

Selected tier (standard, premium, or dedicated)

-

Number of throughput units or processing units

-

Ingress of events (Event Hubs charges for both reserving bandwidth and ingress)

-

Additional features like Schema Registry usage

-

For throughput >50MB/s, dedicated clusters can be more cost-effective[11]

For organizations considering migrating from Kafka to Azure Event Hubs, Microsoft provides a straightforward path[13]:

-

Create an Event Hubs namespace and obtain the connection string

-

Update Kafka client configurations to point to the Event Hubs endpoint:

bootstrap.servers={NAMESPACE}.servicebus.windows.net:9093

request.timeout.ms=60000

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{CONNECTION STRING}";

- Run your Kafka application and verify event reception through the Azure portal[13]

-

Complete control over infrastructure and configuration

-

Extensive customization options for specific requirements

-

Rich ecosystem with a wide range of tools and extensions

-

Open-source with no vendor lock-in concerns

-

Strong community support and continuous development

-

Operational simplicity with no infrastructure management

-

Native Azure integration for comprehensive cloud solutions

-

Auto-scaling with minimal configuration

-

Enterprise security features built-in

-

Kafka compatibility without the operational overhead

The choice between Apache Kafka and Azure Event Hubs depends on your specific requirements, existing investments, and operational preferences.

Choose Apache Kafka if you need maximum control, have specific customization requirements, or have dedicated teams capable of managing Kafka infrastructure.

Choose Azure Event Hubs if you prefer a fully managed service with minimal operational overhead, need seamless Azure integration, or want to maintain Kafka compatibility while reducing management complexity.

For organizations already using Azure services, Event Hubs offers a compelling option with its native Kafka protocol support, allowing you to leverage Kafka clients and applications while benefiting from Azure's managed service capabilities[6][8].

As event-driven architectures continue to evolve, both platforms remain strong choices for building scalable, reliable, and high-performance streaming data solutions.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

-

Comparing Azure Event Hub and Kafka: Which is Right for Your Project?

-

Redpanda Expands Multi-Cloud Offerings with Microsoft Azure Integration

-

Azure Event Hubs Limits and Comparison to Pure Kafka Cluster

-

Choosing Between Apache Kafka, Azure Event Hubs, and Confluent Cloud for Microsoft Fabric Lakehouse

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

- Architecture: Overview

- S3stream shared streaming storage

- Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

- Data analysis

- Object storage

- Kafka ui

- Observability

- Data integration